Updated March 20, 2023

What is Apache Hadoop Ecosystem?

Apache Hadoop Ecosystem is a framework or an open-source data platform assigned to save and examine the huge collections of data unstructured. There is a ton of data being propelled from numerous digital media with the leading innovative technology of big data worldwide. Moreover, Apache Hadoop was the first which gotten this stream of innovation.

What Comprises of Hadoop Data Architecture/Ecosystem?

The Hadoop Ecosystem is not a programming language or a service; it is a framework or platform which takes care of significant data issues. You can identify it as a suite that envelops various services such as storing, ingesting, maintaining, and analyzing inside it. Then examine and get a concise thought regarding how the services work exclusively and in cooperation. The Apache Hadoop architecture comprises different innovations and Hadoop elements through which even complicated information issues can be resolved effectively.

Following is the depiction of every part:

- Namenode: It guides the process of information.

- Datanode: It composes the information to local storage. To save all information at a singular spot isn’t continually suggested, as it might cause loss of information in the event of outage circumstances.

- Task tracker: They receive duties allocated to the slave node.

- Map: It takes information from a stream, and each line is handled divided to part it into different fields.

- Diminish: Here the fields, acquired through Map are gathered or linked with one another.

Apache Hadoop Ecosystem – Step-by-Step

Every element of the Hadoop ecosystem, as specific aspects are obvious. The Hadoop structure’s comprehensive perspective offers noteworthy quality to Hadoop Distributed File Systems (HDFS), Hadoop YARN, Hadoop MapReduce, and MapReduce from the Ecosystem of the Hadoop. Hadoop even gives every Java library significant Java records, OS-level reflection, advantages, and scripts to operate Hadoop; Hadoop YARN is a method for business outlining and bunch resource management. In Hadoop configuration, the HDFS gives high throughput passage to application information, and Hadoop MapReduce gives YARN-based parallel preparing of extensive data assortments.

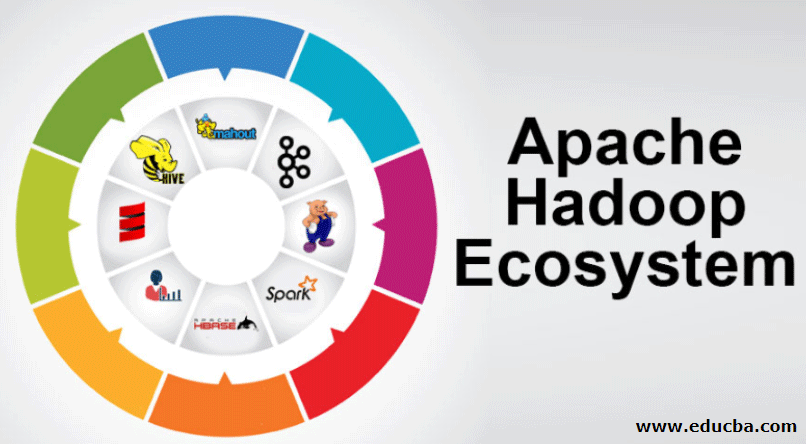

Apache Hadoop Ecosystem Overview:

It is a fundamental subject to comprehend before you begin working with the Hadoop Ecosystem.

Below are the essential components:

- HDFS: This is the centre part of the Hadoop Ecosystem, and it can save an enormous quantity of unstructured, structured, and semi-structured information.

- YARN: It resembles the mind of the Hadoop ecosystem, and all handling is conducted directly here, which may incorporate asset allocation, scheduling job, and action preparation.

- MapReduce: It is a blend of two processes, described as Map and Reduce, and comprises of essence preparing parts composing huge informational collections utilizing parallel and dispersed algorithms inside the Hadoop ecosystem.

- Apache Pig: It is a language of the procedure, which is utilized for parallel handling applications to process vast informational collections in the Hadoop condition, and this language is an option for Java programming.

- HBase: It is an open-source and non-associated or NoSQL database. It bolsters all information types thus can deal with any information type inside a Hadoop framework.

- Mahout, Spark MLib: Mahout is utilized for machine learning and gives nature to creating machine learning applications.

- Zookeeper: To deal with the groups, one can utilize Zookeeper, which is otherwise called the lord of coordination, which can give dependable, quick, and sorted-out operational administrations for the Hadoop bunches.

- Oozie: Apache Oozie operates work scheduling and serves as an alert and clock service inside the Hadoop Ecosystem.

- Ambari: It is an undertaking of Apache Software Foundation, and it can perform the Hadoop ecosystem progressively flexible.

Hadoop YARN

Think about YARN as the mind of your Hadoop Ecosystem. It plays out the entirety of your processing operations by allotting assets and planning duties.

It has two noteworthy segments, which are ResourceManager and NodeManager.

- ResourceManager: It is again a major node in the operating division. It gets the preparing inquiries and passes the questions to relating NodeManagers respectively, where genuine handling happens.

- NodeManagers: These are installed on each DataNode. It is in charge of the execution of an assignment on every DataNode.

How does Apache Hadoop work?

- It is intended to ascend from individual servers to a huge quantity of machines, each providing local computation and capacity. Instead of depending on the equipment to convey high accessibility, the library itself is intended to distinguish and deal with disappointments at the application layer, so getting an exceedingly accessible service over a bunch of PCs, every one of which might be inclined to blows.

- Look further, however, and there are significantly increasing charm at work. Hadoop is totally modular, which implies that you can swap out practically any of its segments for an alternate software tool. That makes the architecture fantastically adaptable, just as powerful and effective.

Apache Hadoop Spark:

- Apache Spark is a system for real-time information analysis in a dispersed computing setting. It implements in-memory computations to build the speed of information handling.

- It is quicker for handling vast scale information as it utilizes in-memory computations and different improvements. Along these lines, it requires a high processing force.

How does Apache Pig work?

- Apache Pig is a beneficial system Yahoo was developed to examine vast information positions effectively and smoothly. It gives some top-level information stream language Pig Latin which is enhanced, extensible and simple to utilize.

- Pig programs’ exceptional component in which their composition is available to substantial parallelization, making this simple toward taking care of substantial informational collections.

Pig Use Case:

- The private healthcare information of an individual is personal and ought not to be disclosed to other people.

- This data ought to be concealed to maintain secrecy, yet the medicinal services information is huge to the point that recognizing and excluding individual healthcare information is essential. Apache Pig can be utilized under such conditions to de-recognize health data.

Conclusion

It is outlined to ascend from only one server to a huge quantity of machines, all providing nearby computation and capacity. Look further, however, and there is much increasingly enchantment at work. Hadoop is totally modular, which implies that you can trade out practically any of its parts for an alternate software tool. That makes the structure fantastically adaptable, just as powerful and effective.

Recommended Articles

This has been a guide to Apache Hadoop Ecosystem. Here we also discuss the apache hadoop ecosystem, hadoop architecture and the working. You can go through our other suggested articles to learn more –