Updated March 21, 2023

Introduction to Classification of Neural Network

Neural Networks are the most efficient way (yes, you read it right) to solve real-world problems in Artificial Intelligence. Currently, it is also one of the much extensively researched areas in computer science that a new form of Neural Network would have been developed while you are reading this article. There are hundreds of neural networks to solve problems specific to different domains. Here we are going to walk you through different types of basic neural networks in the order of increasing complexity.

Different Types of Basics in Classification of Neural Networks

It classifies the different types of Neural Networks as:

1. Shallow Neural Networks (Collaborative Filtering )

Neural Networks are made of groups of Perceptron to simulate the neural structure of the human brain. Shallow neural networks have a single hidden layer of the perceptron. One of the common examples of shallow neural networks is Collaborative Filtering. The hidden layer of the perceptron would be trained to represent the similarities between entities in order to generate recommendations. Recommendation system in Netflix, Amazon, YouTube, etc. uses a version of Collaborative filtering to recommend their products according to the user interest.

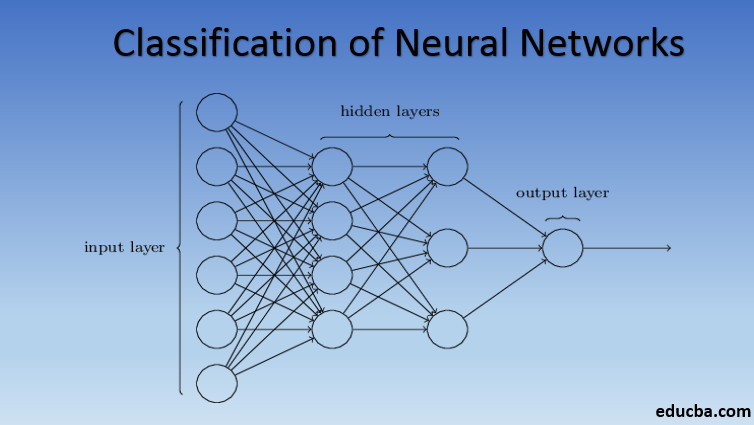

2. Multilayer Perceptron (Deep Neural Networks)

Neural Networks with more than one hidden layer is called Deep Neural Networks. Spoiler Alert! All following neural networks are a form of deep neural network tweaked/improved to tackle domain-specific problems. In general, they help us achieve universality. Given enough number of hidden layers of the neuron, a deep neural network can approximate i.e. solve any complex real-world problem.

The Universal Approximation Theorem is the core of deep neural networks to train and fit any model. Every version of the deep neural network is developed by a fully connected layer of max pooled product of matrix multiplication which is optimized by backpropagation algorithms. We will continue to learn the improvements resulting in different forms of deep neural networks.

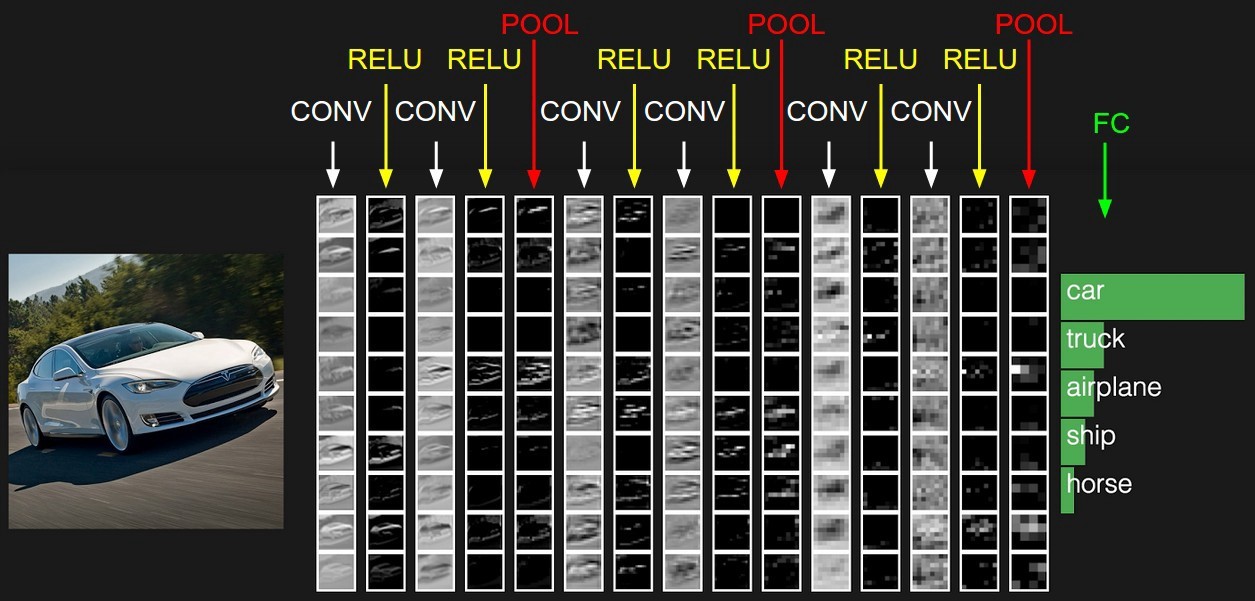

3. Convolutional Neural Network (CNN)

CNN’s are the most mature form of deep neural networks to produce the most accurate i.e. better than human results in computer vision. CNN’s are made of layers of Convolutions created by scanning every pixel of images in a dataset. As the data gets approximated layer by layer, CNN’s start recognizing the patterns and thereby recognizing the objects in the images. These objects are used extensively in various applications for identification, classification, etc. Recent practices like transfer learning in CNNs have led to significant improvements in the inaccuracy of the models. Google Translator and Google Lens are the most states of the art example of CNN’s.

The application of CNNs is exponential as they are even used in solving problems that are primarily not related to computer vision. A very simple but intuitive explanation of CNNs can be found here.

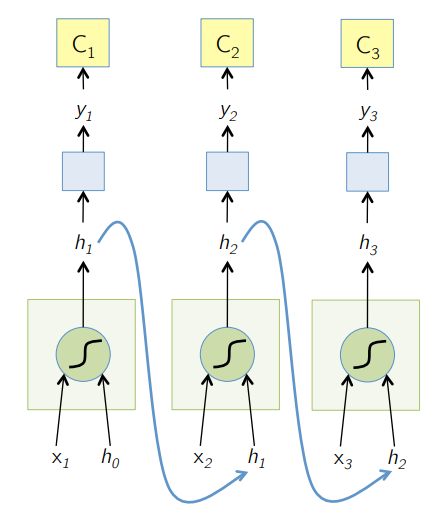

4. Recurrent Neural Network (RNN)

RNNs are the most recent form of deep neural networks for solving problems in NLP. Simply put, RNNs feed the output of a few hidden layers back to the input layer to aggregate and carry forward the approximation to the next iteration(epoch) of the input dataset. It also helps the model to self-learn and corrects the predictions faster to an extent. Such models are very helpful in understanding the semantics of the text in NLP operations. There are different variants of RNNs like Long Short Term Memory (LSTM), Gated Recurrent Unit (GRU), etc. In the diagram below, the activation from h1 and h2 is fed with input x2 and x3 respectively.

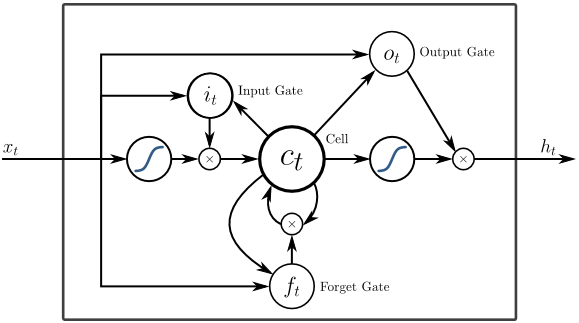

5. Long Short Term Memory (LSTM)

LSTMs are designed specifically to address the vanishing gradients problem with the RNN. Vanishing Gradients happens with large neural networks where the gradients of the loss functions tend to move closer to zero making pausing neural networks to learn. LSTM solves this problem by preventing activation functions within its recurrent components and by having the stored values unmutated. This small change gave big improvements in the final model resulting in tech giants adapting LSTM in their solutions. Over to the “most simple self-explanatory” illustration of LSTM,

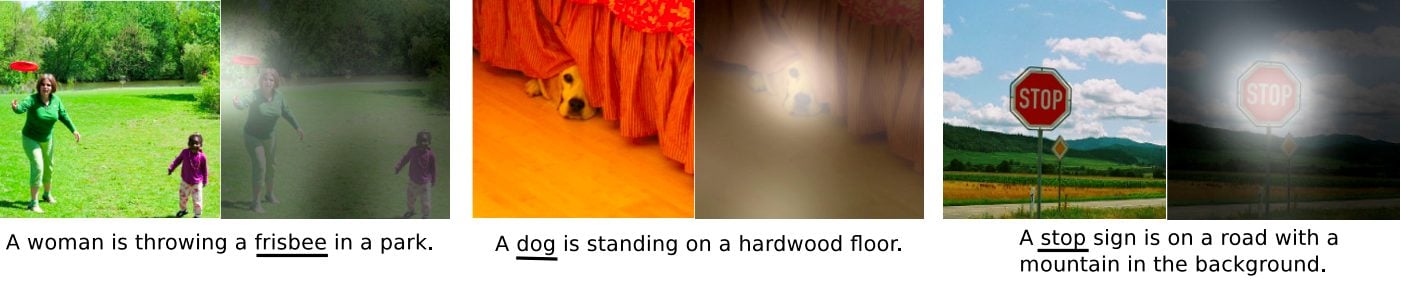

6. Attention-based Networks

Attention models are slowly taking over even the new RNNs in practice. The Attention models are built by focusing on part of a subset of the information they’re given thereby eliminating the overwhelming amount of background information that is not needed for the task at hand. Attention models are built with a combination of soft and hard attention and fitting by back-propagating soft attention. Multiple attention models stacked hierarchically is called Transformer. These transformers are more efficient to run the stacks in parallel so that they produce state of the art results with comparatively lesser data and time for training the model. An attention distribution becomes very powerful when used with CNN/RNN and can produce text description to an image as follow.

Tech giants like Google, Facebook, etc. are quickly adapting attention models for building their solutions.

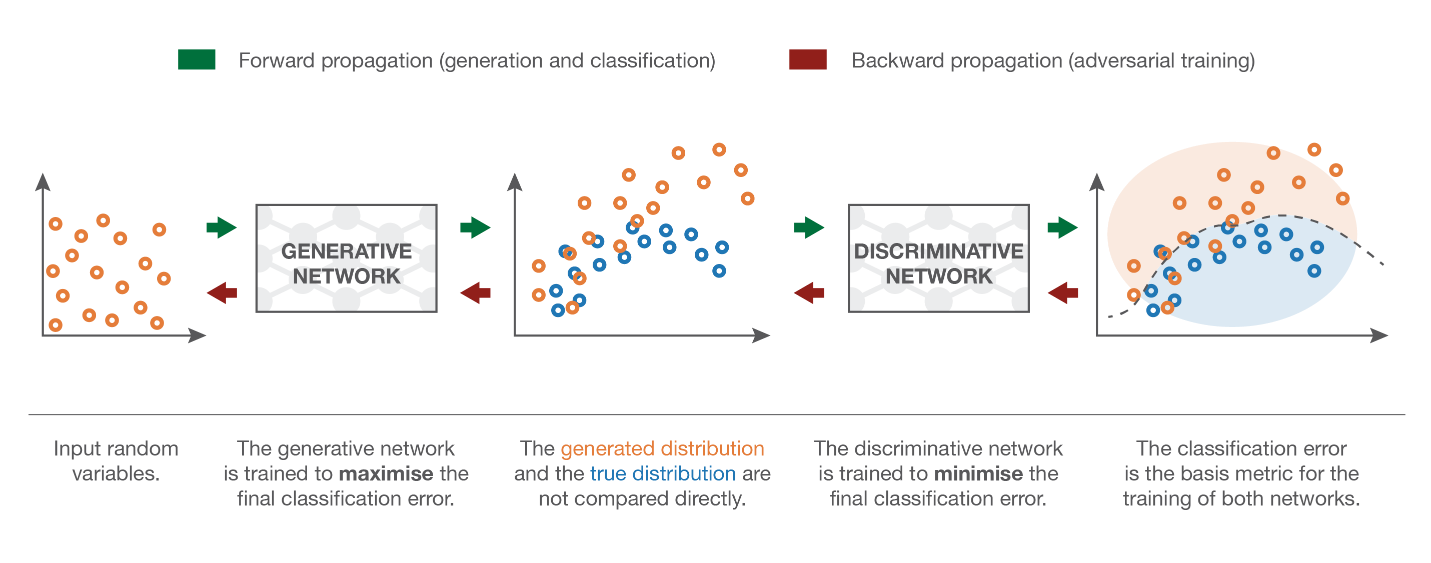

7. Generative Adversarial Network (GAN)

Although deep learning models provide state of the art results, they can be fooled by far more intelligent human counterparts by adding noise to the real-world data. GANs are the latest development in deep learning to tackle such scenarios. GANs use Unsupervised learning where deep neural networks trained with the data generated by an AI model along with the actual dataset to improve the accuracy and efficiency of the model. These adversarial data are mostly used to fool the discriminatory model in order to build an optimal model. The resulting model tends to be a better approximation than can overcome such noise. The research interest in GANs has led to more sophisticated implementations like Conditional GAN (CGAN), Laplacian Pyramid GAN (LAPGAN), Super Resolution GAN (SRGAN), etc.

Conclusion

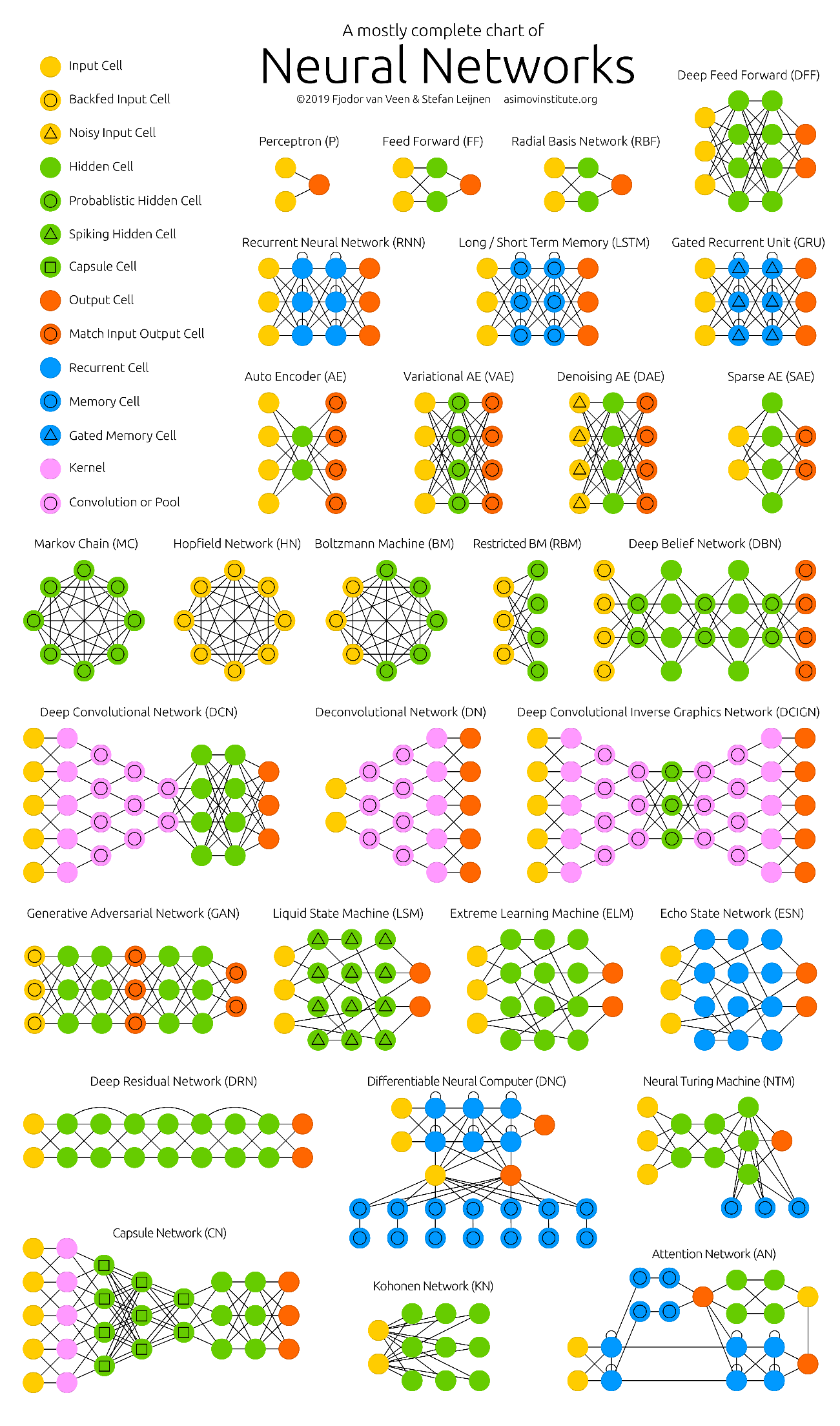

The deep neural networks have been pushing the limits of the computers. They are not just limited to classification (CNN, RNN) or predictions (Collaborative Filtering) but even generation of data (GAN). These data may vary from the beautiful form of Art to controversial Deep fakes, yet they are surpassing humans by a task every day. Hence, we should also consider AI ethics and impacts while working hard to build an efficient neural network model. Time for a neat infographic about the neural networks.

Recommended Articles

This is a guide to the Classification of Neural Network. Here we discussed the basic concept with different classification of Basic Neural Networks in detail. You can also go through our given articles to learn more –