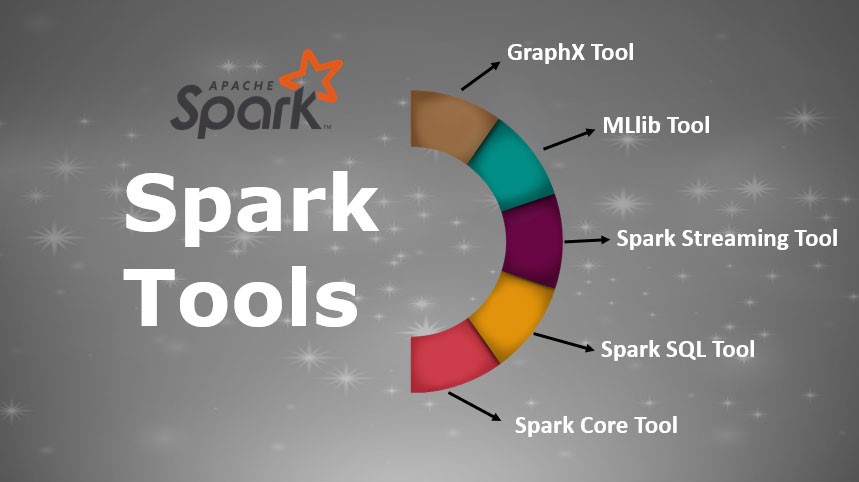

Introduction to Spark Tools

Spark tools are the software features of the Spark framework used for efficient and scalable data processing for big data analytics. The Spark framework is made available as open-source software under the Apache license. It comprises 5 important data processing tools: GraphX, MLlib, Spark Streaming, Spark SQL, and Spark Core. GraphX is the tool that processes and manages graph data analysis. The MLlib Spark tool is used for implementing machine learning on distributed datasets. At the same time, users utilize Spark Streaming for stream data processing, while Spark SQL serves as the primary tool for structured data analysis. Spark Core tool manages the Resilient data distribution known as RDD.

Top 5 Spark Tools

There exist 5 Spark tools, namely GraphX, MLlib, Spark Streaming, Spark SQL, and Spark Core.

1. GraphX Tool

- This is the Spark API related to graphs as well as graph-parallel computation. GraphX provides a Resilient Distributed Property Graph, an extension of the Spark RDD.

- The form possesses a proliferating collection of graph algorithms and builders to simplify graph analytics activities.

- This vital tool develops and manipulates graph data to perform comparative analytics. The former transforms and merges structured data at a very high speed consuming minimum time resources.

- Employ the user-friendly Graphical User Interface to pick from a fast-growing collection of algorithms. You can even develop custom algorithms to monitor ETL insights.

- The GraphFrames package permits you to perform graph operations on data frames. This includes leveraging the Catalyst optimizer for graph queries. This critical tool possesses a selection of distributed algorithms.

- The latter’s purpose is to process graph structures that include an implementation of Google’s highly acclaimed PageRank algorithm. These special algorithms employ Spark Core’s RDD approach to modeling essential data.

2. MLlib Tool

- MLlib is a library that contains basic Machine Learning services. The library offers various kinds of Machine Learning algorithms that make possible many operations on data with the object of obtaining meaningful insights.

- The Spark platform bundles libraries to apply graph analysis techniques and machine learning to data at scale.

- The MLlib tool has a framework for developing machine learning pipelines, enabling simple implementation of transformations, feature extraction, and selections on any particular structured dataset. The former includes rudimentary machine learning, filtering, regression, classification, and clustering.

- However, facilities for training deep neural networks and modeling are not available. MLlib supplies robust algorithms and lightning speed to build and maintain machine learning libraries that drive business intelligence.

- It also operates natively above Apache Spark, delivering quick and highly scalable machine learning.

3. Spark Streaming Tool

- This tool’s purpose is to process live streams of data. There is real-time processing of data produced by different sources. Instances of this kind of data are messages having status updates posted by visitors, log files, and others.

- This tool also leverages Spark Core’s speedy scheduling capability to execute streaming analytics. Mini-batches of data are ingested, followed by performing RDD (Resilient Distributed Dataset) transformations on these mini-batches. Spark Streaming enables fault-tolerant stream processing and high throughput of live data streams. The core stream unit is DStream.

- The latter is a series of Resilient Distributed Datasets whose function is to process real-time data. This helpful tool extended the Apache Spark paradigm of batch processing into streaming. The Apache Spark API was employed to break down the stream into multiple micro-batches and perform manipulations. Spark Streaming is the engine of robust applications that need real-time data.

- The former has the big data platform’s reliable fault tolerance making it extremely attractive for development. Spark Streaming introduces interactive analytics for live data from almost any common repository source.

4. Spark SQL Tool

The platform’s functional programming interface combines with relational processing in this newly introduced module in Spark. There is support for querying data through the Hive Query Language and Standard SQL.

Spark SQL consists of 4 libraries:

- SQL Service

- Interpreter and Optimizer

- Data Frame API

- Data Source API

This tool’s function is to work with structured data. The former gives integrated access to the most common data sources. This includes JDBC, JSON, Hive, Avro, and more. The tool sorts data into labeled columns and rows perfect for dispatching the results of high-speed queries. Spark SQL integrates smoothly with newly introduced and existing Spark programs, resulting in minimal computing expenses and superior performance. Apache Spark employs a query optimizer named Catalyst, which studies data and queries to create an efficient query plan for computation and data locality. The plan will execute the necessary calculations across the cluster. The current advice for development purposes is to utilize the Spark SQL interface of datasets and data frames.

5. Spark Core Tool

- This is the basic building block of the platform. Among other things, it consists of components for running memory operations, scheduling jobs, and others. Core hosts the API containing RDD. The former, GraphX, provides APIs to build and manipulate data in RDD.

- The core also provides distributed task dispatching and fundamental I/O functionalities. When benchmarked against Apache Hadoop components, the Spark Application Programming Interface is pretty simple and easy to use for developers.

- The API conceals a large part of the complexity involved in a distributed processing engine behind relatively simple method invocations.

- Spark operates in a distributed way by merging a driver core process which splits a particular Spark application into multiple tasks and distributes them among numerous methods that perform the job. These particular executions could be scaled up or down depending on the application’s requirements.

- All the tools belonging to the Spark ecosystem interact smoothly and run well while consuming minimal overhead. This makes Spark both extremely scalable as well as a very powerful platform. Work is ongoing to improve the tools in terms of both performance and convenient usability.

Recommended Articles

This is a guide to Spark Tools. Here we discuss the basic concept and top 5 Spark Tools namely GraphX, MLlib, Streaming, SQL, and Core. You may also look at the following articles to learn more-