Updated March 23, 2023

Introduction to Data Lake Architecture

A data lake is the advanced version of the traditional data warehouse concept in terms of source type, processing type, and structure that operates for business analytics solutions. Data Lakes are majorly implemented through Cloud providers and architected with several data storage and data processing tools and managed services based services are associated to process and maintain the data infrastructure for Data Lake. Support of new changes of data variants through the iterative approach of enhancements of the architecture adds values to the organization which implements a data lake.

There is a very well-known analogy of data lake with a lake from Pentaho CTO James Dixon who coined the term Data Lake. The data lake resembles the lake where the water comes in from various sources and stay in the native form, whereas package bottle of water resembles a data mart which undergoes several filtrations and purification process similarly the data is processed for a data mart.

Data Lake Architecture

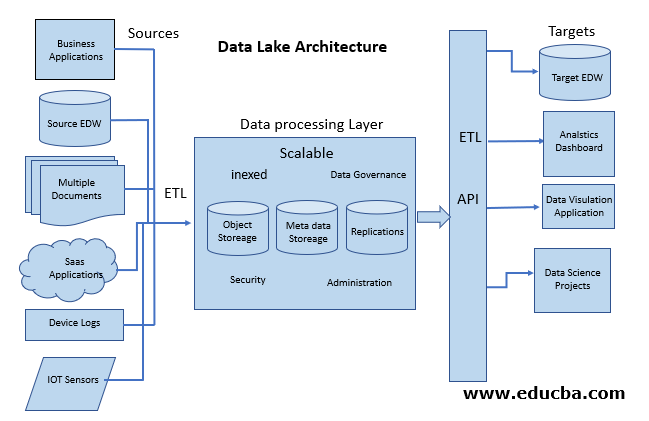

Let us understand what comprises a data lake by discussing the data lake architecture. The following diagram represents a high-level Data lake architecture with standard terminologies.

Data lake architecture is majorly comprised of three components or layers in general.

- Sources

- Data Processing Layer

- Target

1. Sources

we will discuss the sources from a Data lake perspective.

- Sources are the providers of the business data to the data lake.

- The ETL or ELT mediums are being used to retrieve data from various sources for further data processing.

- They are categorized into two types based upon the source structure and formats for ETL Process

a. homogenous sources

- The similar data types or structure

- Easy to join and consolidate the data

- Example: Sources from MS SQL Server databases.

b. Heterogeneous sources

- These are from different data formats and structures.

- It is tricky for ETL professionals to aggregate the sources to create consolidate data for processing.

- Example: Sources from Flat files, NoSQL Databases, RDBMS, and Industry Standard Formats like HL7, SWIFT, EDI which as some predefined data formats

Data lake architecture mostly use sources from the following:

Business Applications

- These are Transaction business applications like ERP, CRM, SCM or Accounts which are used to capture business transactions.

- These are mainly Databases or file-based data store applications that store transaction data.

- Data lake connects these applications through connectors, adapters, APIS or web services for ETL.

- Example: SAP ERP, Oracle Apps, Quick books.

EDW

- There are possibilities that the data lake sources the data from an existing enterprise data warehouse or EDW to create consolidate data reference using other sources of data.

- These can be Standard RDBMS based EDW or cloud-based Data warehouse.

- Most of the scenarios ETL tools create connections to the relevant databases through connectors, ODBC or JDBC drivers to extract data from the EDW.

- Example: The Sales Data EDW of a particular country can be used as a source for a data lake that is built for the customer 360 analysis.

Multiple Documents

- These are flat files that are relevant for the business uses case of the data lake.

- Several business transactions and other relevant data are stored in flat files in the organization.

- There are several preferred file formats such are preferred by Data Lake.

- Example: .CSV and .Txt are majorly used flat files formats.

- Also, Several semi-structured files such as XML, JSON and AVRO formats are used with Data Lakes projects.

Saas Applications

- These days organizations are moving preferring Saas based applications compared to on-premise applications.

- These applications are cloud-based and managed by the provider.

- Example: Salesforce CRM, Microsoft Dynamics CRM, SAP Business By Design, SAP Cloud for Customers. Oracle CRM On Demand.

Device Logs

- Several logs are captured from various devices and captured for Data lake processing.

- Example: The System or server logs data useful for cluster performance analytics.

IoT Sensors

- There are several data stream which is captured through the IoT sensor and generally processed in real-time through the Data lake setup.

- Example: The engine of an aircraft sending Sensors data to the server and Data lake components like Apache Kafka capturing though and routing it in real-time.

2. Data Processing Layer

Given below are the data processing layer of data lake architecture

- The data processing layer of Data lake comprises of Datastore, Metadata store and the Replication to support the High availability (HA) of data.

- The index is applied to the data for optimizing the processing.

- The best practices include including a cloud-based cluster for the data processing layer.

- The data processing layer is efficiently designed to support the security, scalability, and resilience of the data.

- Also, proper business rules and configurations are maintained through the administration.

- There are several tools and cloud providers that support this data processing layer.

- Example: Apache Spark, Azure Databricks, Data lake solutions from AWS.

3. Targets for the Data Lake

After processing layer data lake provides the processed data to the target systems or applications. There are several systems that consume data from Data lake through an API layer or through connectors.

Following is the list which uses the data lake:

EDW

After consolidating the data from various sources a new EDW created based upon the business use case.

Analytics Dashboards

- There are custom analytics applications are build based upon the data lake data.

- APIs act as primary channels from the Data lake processing layer to Custom applications.

Data Visualization Tools

- Several well-known enterprise BI tools like Tableau, MS Power BI, SAP Lumira consumes the data lake data for creating advanced analytics graphs and charts.

Machine Learning Projects

- Machine learning models use the raw data from Data lake to generate the optimized ML models which add values to the business scenarios.

- ML tools R Language, Python accepts data in a structured format that is created through the data lake processing layer.

Purpose of a Data Lake in Business

- A data lake works as an enabler for businesses for data-driven decision-making or insights.

- It is very useful for time-to-market analytics solutions.

- It helps an IT-driven business process.

- Cloud-based data lake implementation helps the business to create cost-effective decisions.

Data Lake implementation is more crucial for Business decisions for use case selection before technical decisions are made for the tools and technologies. Data engineers, DevOps Engineers, Data Analyst, and data scientist professional teams up to create successful data lake implementation for the business.

Conclusion

Data Lake is a comparatively new concept that is evolving with the popularity of Cloud, Data Science, and AI applications. It has gained good interest in the industry due to its flexible architecture adoption and the application or data type it supports which helps the business to consolidate the holistic view of the data patterns.

Recommended Articles

This has been a guide to Data Lake Architecture. Here we discuss the introduction and three-layer of data lake architecture with their Purpose in business. You may also have a look at the following articles to learn more –