Updated April 12, 2023

Introduction to PySpark foreach

PySpark foreach is explained in this outline. PySpark foreach is an active operation in the spark that is available with DataFrame, RDD, and Datasets in pyspark to iterate over each and every element in the dataset. The For Each function loops in through each and every element of the data and persists the result regarding that. The PySpark ForEach Function returns only those elements which meet up the condition provided in the function of the For Each Loop. A simple function that applies to each and every element in a data frame is applied to every element in a For Each Loop. ForEach partition is also used to apply to each and every partition in RDD. We can create a function and pass it with for each loop in pyspark to apply it over all the functions in Spark. This is an action operation in Spark used for Data processing in Spark. In this topic, we are going to learn about PySpark foreach.

Syntax for PySpark foreach

The syntax for the PYSPARK WHEN function is:-

def function(x): //function definition

Dataframe.foreach(function)code:

def f(x): print(x)

b=a.foreach(f)ScreenShot:

Working of PySpark foreach

Let us see somehow the ForEach function works in PySpark:-

The ForEach function in Pyspark works with each and every element in the Spark Application. We have a function that is applied to each and every element in a Spark Application.

The loop is iterated for each and every element in Spark. The function is executed on each and every element in an RDD and the result is evaluated.

Every Element in the loop is iterated and the given function is executed the result is then returned back to the driver and the action is performed.

The ForEach loop works on different stages for each stage performing a separate action in Spark. The loop in for Each iterate over items that is an iterable item, One Item is selected from the loop and the function is applied to it, if the functions satisfy the predicate for the loop it is returned back as the action.

The number of times the loop will iterate is equal to the length of the elements in the data.

If the data is not there or the list or data frame is empty the loop will not iterate.

The same can be applied with RDD, DataFrame, and Dataset in PySpark.

Example of PySpark foreach

Let us see some Example of how PYSPARK ForEach function works:

Create a DataFrame in PYSPARK:

Let’s first create a DataFrame in Python.

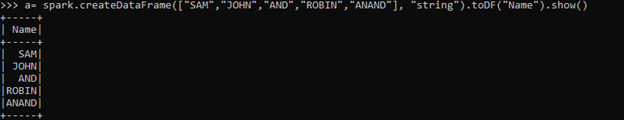

CreateDataFrame is used to create a DF in Python

a= spark.createDataFrame(["SAM","JOHN","AND","ROBIN","ANAND"], "string").toDF("Name")

a.show()Now let’s create a simple function first that will print all the elements in and will pass it in a For Each Loop.

def f(x) : print(x)This is a simple Print function that prints all the data in a DataFrame.

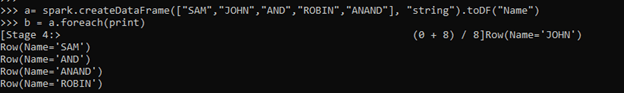

def f(x): print(x)Code SnapShot:

Let’s iterate over all the elements using for Each loop.

b = a.foreach(f)This is simple for Each Statement that iterates and prints through all the elements of a Data Frame.

b = a.foreach(f)Stages are defined and the action is performed.

[Stage 3:> (0 + 8) / 8]

Row(Name='ROBIN')

Row(Name='ANAND')

Row(Name='AND')

Row(Name='JOHN')

Row(Name='SAM')Code Snapshot:

a= spark.createDataFrame(["SAM","JOHN","AND","ROBIN","ANAND"], "string").toDF("Name")

b=a.foreach(print)Example #2

Let us check the type of element inside a Data Frame. For This, we will proceed with the same DataFrame as created above and will try to pass a function that defines the type of variable inside.

Create a DataFrame in PYSPARK:-

Let’s first create a DataFrame in Python.

CreateDataFrame is used to create a DF in Python

a= spark.createDataFrame(["SAM","JOHN","AND","ROBIN","ANAND"], "string").toDF("Name").show()Code SnapShot:

Let’s create a function that defines the type of the variable, this is a generic UDF that a user can create based on the requirements.

This function defines the type of the variable inside.

def f(x): print(type(x))Let’s use ForEach Statement and print the type in the DataFrame.

b = a.foreach(f)Output:

This will print the Type of every element it iterates.

Code SnapShot:

We can also build complex UDF and pass it with For Each loop in PySpark.

From the above example, we saw the use of the ForEach function with PySpark

Note:

- For Each is used to iterate each and every element in a PySpark

- We can pass a UDF that operates on each and every element of a DataFrame.

- ForEach is an Action in Spark.

- It doesn’t have any return value.

Conclusion

From the above article, we saw the use of FOR Each in PySpark. From various examples and classification, we tried to understand how the FOREach method works in PySpark and what are is used at the programming level.

We also saw the internal working and the advantages of having PySpark in Spark Data Frame and its usage for various programming purpose. Also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

We hope that this EDUCBA information on “PySpark foreach” was beneficial to you. You can view EDUCBA’s recommended articles for more information.