Updated April 18, 2023

Introduction to PySpark OrderBy Descending

PySpark orderby is a spark sorting function used to sort the data frame / RDD in a PySpark Framework. It is used to sort one more column in a PySpark Data Frame. The Desc method is used to order the elements in descending order. By default the sorting technique used is in Ascending order, so by the use of Desc method, we can sort the element in Descending order in a PySpark Data Frame.

The orderBy clause is used to return the row in a sorted manner. It guarantees the total order of the output. The order by function can be used with one column as well as more than one column can be used in OrderBy. It takes two parameters Asc for ascending and Desc for Descending order. By Descending order we mean that column will the highest value will come at first followed by the one with 2nd Highest to lowest.

Syntax:

The syntax for PYSPARK ORDERBY Descending function is:

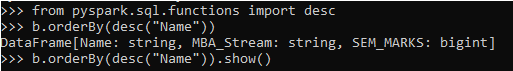

from pyspark.sql.functions import desc

b.orderBy(desc("col_Name")).show()ScreenShot:

- desc: The Descending Function to be Imported.

- orderBy: The Order By Function in PySpark.

- b: The Data Frame where the operation needs to be done.

Working of OrderBy in PySpark

The orderby is a sorting clause that is used to sort the rows in a data Frame. Sorting may be termed as arranging the elements in a particular manner that is defined. The order can be ascending or descending order the one to be given by the user as per demand. The Default sorting technique used by order is ASC. We can import the PySpark function and use the DESC method to sort the data frame in Descending order.

We can sort the elements by passing the columns within the Data Frame, the sorting can be done from one column to multiple columns. It takes the column name as the parameter, this column name is used for sorting the elements. The order by Function creates a Sort logical operator with a global flag, this is used for sorting data frames in a PySpark application.

This is how the use of ORDER BY in PySpark.

Examples

Let us see some examples on how PYSPARK ORDER BY function works.

Example #1

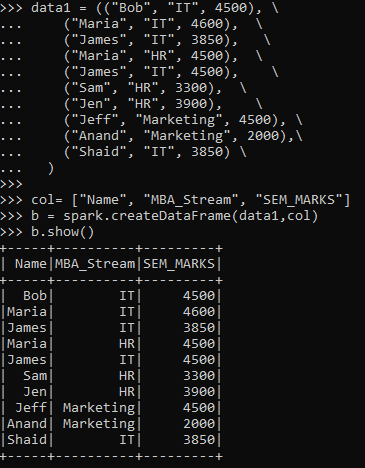

Let’s start by creating a PySpark Data Frame. A data frame of students with the concerned Dept. and overall semester marks are taken for consideration and a data frame is made upon that.

Code:

data1 = (("Bob", "IT", 4500), \

("Maria", "IT", 4600), \

("James", "IT", 3850), \

("Maria", "HR", 4500), \

("James", "IT", 4500), \

("Sam", "HR", 3300), \

("Jen", "HR", 3900), \

("Jeff", "Marketing", 4500), \

("Anand", "Marketing", 2000),\

("Shaid", "IT", 3850) \

)

col= ["Name", "MBA_Stream", "SEM_MARKS"]

b = spark.createDataFrame(data1,col)

b.show()Screenshot:

Example #2

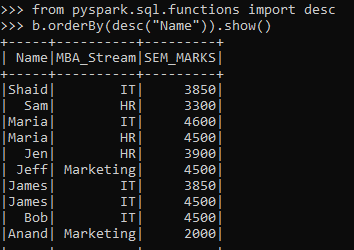

Let’s try doing the Order By operation using the descending function.

We will import the SQL Function Desc to use orderBy in Descending order.

from pyspark.sql.functions import desc

b.orderBy(desc("Name")).show()This will orderby Name in descending order.

Screenshot:

The same can be done with other columns also in the data Frame. We can order by the same using MBA_Stream Column and SEM_MARKS Column.

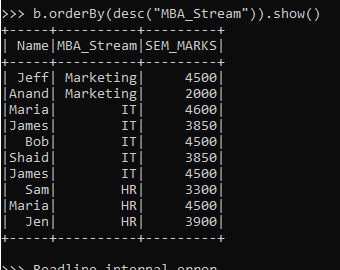

b.orderBy(desc("MBA_Stream")).show()This sorts according to MBA_Stream.

Screenshot:

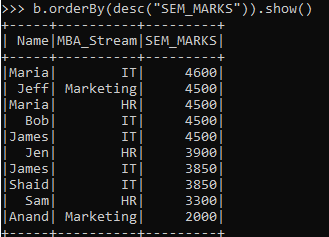

b.orderBy(desc("SEM_MARKS")).show()This will sort according to SEM_MARKS Column in the Data Frame.

Screenshot:

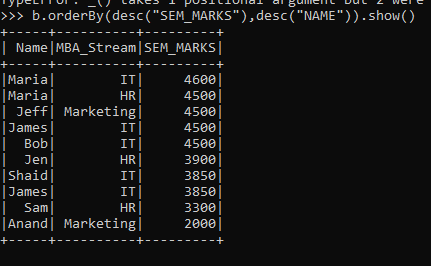

The same order can be used with Multiple Conditions also over the column.

b.orderBy(desc("SEM_MARKS"),desc("NAME")).show()It will sort according to the columns provided.

Screenshot:

The orderBy function can also be used with the Spark SQL function by creating a temporary Table of the Data Frame. The Temp Table can be used by then with the SPARK.SQL function where we can use the Order By Function. The DESC function is used to order it in Descending Order in the PySpark SQL Data Frame.

From the above example, we saw the use of the orderBy function with PySpark

Conclusion

From the above article, we saw the use of ORDERBY in PySpark. From various examples and classifications, we tried to understand how the ORDERBY(DESC) method works in PySpark and what is used at the programming level. The working model made us understand properly the insights of the function and helped us gain more knowledge about the same. The syntax helped out to check the exact parameters used and the functional knowledge of the function.

We also saw the internal working and the advantages of having ORDERBY in PySpark in Spark Data Frame and its usage for various programming purposes. Also, the syntax and examples helped us to understand much precisely over the function.

Recommended Articles

This is a guide to PySpark OrderBy Descending. Here we discuss the Introduction, Working of OrderBy in PySpark, and examples with code implementation. You may also have a look at the following articles to learn more –