Updated April 20, 2023

Introduction to TensorFlow Dataset

Basically, TensorFlow acts as a collection of different kinds of dataset and it is ready to use in another word, we can say that it is one kind of framework that is used for Machine Learning. The main purpose of the TensorFlow dataset is that with the help of the TensorFlow dataset, we can build the pipeline in a machine learning application.

Without the TensorFlow dataset, it is not possible or we can say it is a time-consuming and painful task. The TensorFlow dataset which is an API helps us to build asynchronous projects, more precisely for the pipeline to avoid the GPU. Normally TensorFlow loads the data from the local disk either in text or image format and after that it applies the transformation to create the batches, it sends them to the GPU.

What is TensorFlow Dataset?

- Profound learning is a subfield of AI or we can say that machine learning is a bunch of algorithms that is propelled by the design and capacity of the cerebrum. Basically, TensorFlow is used for the AI framework or we can say that machine learning framework and it directly gives the straightforward solution to implement the AI concept.

- You can utilize the TensorFlow library due to mathematical calculations, which in itself doesn’t appear, apparently, to be all through the very novel, yet these assessments are finished with information stream charts. These hubs address numerical tasks, while the edges address the information, which as a rule are multidimensional information exhibits or tensors, which are imparted between these edges.

TensorFlow Dataset Example Model

Let’s see the example of the Tensorflow dataset as follows:

Datasets is another approach to make input pipelines to TensorFlow models. This API is considerably more performant than utilizing feed_dict or the line-based pipelines, and it’s cleaner and simpler to utilize.

Normally we have the following high-level classes in Dataset as follows:

- Dataset: It is a base class that contains all the methods that are required to create the transformed dataset as well it also helps initialize the dataset in the memory.

- TextLineDataset: Basically we need to read the line from the text file, so for that purpose we use TextLineDataset.

- TFRecordDataset: It is used to read the records from the TFRecord files as per requirement.

- FixedLengthRecordDataset: When we need to read the fixed size of the record from the binary file at that time we can use FixedLengthRecordDataset.

- Iterator: By using Iterator we can access the dataset element at a time when required.

- We need to create the CSV file and store the data that we require as follows: Sepailength, SepalWidth, SetosLength, SetosWidth, and FlowerType.

Explanation:

- In the above-mentioned input value, we need to put it into the CSV file which means we read data from the CSV file. The first four are the input value for a single row and FlowerType is the label or we can say the output values. We can consider a dataset of input set is float and int for the output values.

- We also need to label the data so we can easily recognize the category.

Let’s see how we can represent the dataset as follows:

Code:

types_name = ['Sepailength','SepalWidth',' SetosLength',' SetosWidth']- After the training dataset, we need to read the data so we need to create the function as follows:

Code:

def in_value():

…………………

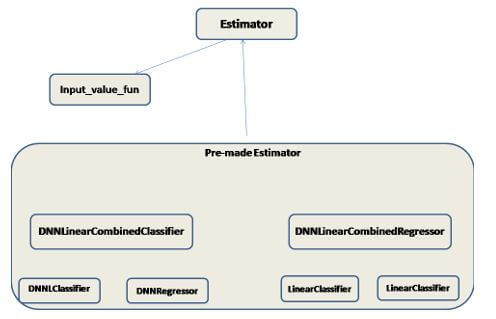

return({'Sepailengt':[values], '……….'})Class Diagram for Estimators

Let’s see the class diagram for the Estimators as follows:

Estimators are an undeniable level API that diminishes a significant part of the standard code you recently expected to compose when preparing a TensorFlow model. Assessors are likewise truly adaptable, permitting you to abrogate the default conduct on the off chance that you have explicit prerequisites for your model.

We can use two ways to build the class diagram as follows:

- Pre-made Estimator: This is a predefined estimator’s class and it is used for a specific type of model.

- Base Class: It provides complete control over the model.

Representing our Dataset

Let’s see how we can represent the dataset as follows:

There are different ways to represent the data as follows:

We can represent datasets by using numerical data, categorical data, and ordinal data, we can use anyway as per our requirement.

Code:

import pandas as pd_obj

data_info = pd_obj.read_csv("emp.csv")

row1 = data_info.sample(n = 1)

row1

row2 = data_info.sample(n = 1)

row2Explanation:

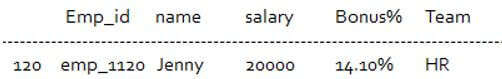

- In the above example we try to fetch the dataset, here first we import the pandas to implement the AI program after that we read data from the CSV file as shown, here we have an emp.csv file and we try to read the data from that file.

- The final output or we can say that result we illustrated by using the following screenshot as follows.

Output:

Similarly, we can display the second row same as above.

Importing Data TensorFlow Dataset

Let’s see how we can import the Data TensorFlow dataset as follows:

Code:

import tensorflow as tf_obj

A = tf_obj.constant([4,5,6,7])

B = tf_obj.constant([7,4,2,3])

res = tf_obj.multiply(A, B)

se = tf_obj.Session()

print(se.run(res))

se.close()Explanation:

- By using the above we try to implement the TensorFlow dataset, here first we import the TensorFlow as shown, and after that, we write the two different arrays A and B as shown. After that, we make the multiplication of both arrays and store results into res variables. In this example, we also add a session and after the complication of the operation, we close the session.

- The final output or we can say that result we illustrated by using the following screenshot as follows.

Output:

Freebies TensorFlow Dataset

- Basically, the Tensorflow dataset is an open-source dataset that is the collection of datasets we can directly use during the machine learning framework such as Jax, and all datasets we can set by using the TensorFlow as per requirement.

- It also helps us to improve our performance.

Conclusion

From the above article, we have taken in the essential idea of the TensorFlow dataset and we also saw the representation of the TensorFlow dataset. From this article, we saw how and when we use the TensorFlow dataset.

Recommended Articles

We hope that this EDUCBA information on “TensorFlow Dataset” was beneficial to you. You can view EDUCBA’s recommended articles for more information.