Updated March 10, 2023

What is Dataset in Machine Learning?

A dataset in machine learning is a collection of data bits that may be considered as a single entity by a computer for statistical and prediction purposes. This implies that the data gathered should be homogeneous and understandable to a machine that does not see data in the same manner that people do.

How to create a dataset in machine learning?

We need a lot of data to work on machine learning projects because ML/AI models can’t be trained without data. One of the most important aspects of building an ML/AI project is gathering and preparing the dataset. If the dataset is not correctly prepared and pre-processed, the technology used in ML projects will not operate well. The datasets are relied on by the developers during the development of the ML project.

In Python, prepare a data collection for machine learning.

To construct a flawless dataset, we employ the Python programming language. The following steps must be followed to prepare a dataset.

• Data Preparation Procedures

• Import the libraries and get the dataset.

• Take care of any data that is lacking.

• Data that is categorical should be encoded.

• Creating the Training and Test sets from the dataset.

• If all of the columns are not sized correctly, use Feature Scaling.

Getting a dataset

The First Step is to create a directory using a command

Cd predata

Next, create a Python file and import a corresponding Libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

Example

import numpy as np

import pandas as pd

Towns = ['Oslo', 'Bergen', 'Stavanger',

'Drammer', 'Alesund', 'Trondehm',

'Manland', 'Riser', 'Sandnes',

'Skein', 'Tromso', 'Karlsruhe'

]

n= len(Towns)

dtt = {'Temp': np.random.normal(22, 2, n),

'Humi': np.random.normal(68, 1.5, n),

'Wind': np.random.normal(15, 4, n)

}

df = pd.DataFrame(data=dtt, index=Towns)

df

Features dataset in machine learning

Data has always been the foundation of any business. Factors including what the customer purchased, the attractiveness of the products, and the seasonality of the customer flow have long played a role in company decisions. However, with the introduction of machine learning, it is now necessary to organise this information into datasets. We can evaluate trends and hidden patterns and make judgments based on the dataset created if an application has enough data.

– Relevance and Coverage are two factors that determine the quality of a dataset.

When collecting data for a machine learning project, the most important factor to consider is the quality of the data. It’s critical to make sure the data is of acceptable quality. While there are techniques to clean the data and make it uniform and manageable before the annotation and training procedures, having the data adhere to a list of required features is the best option.

Three important steps

There are three crucial steps in the process of building a dataset:

- Data Gathering

- Cleaning of data

- Labelling of Data

Data gathering

Finding datasets that can be used to train machine learning models is part of the data gathering process.

There are essentially two approaches. They are as follows:

• Data Collection

• Data Enrichment

Data Cleaning

It is critical to clean the data and prepare it for the ML model after it has been collected. It entails resolving issues like as outliers, inconsistency, and missing numbers.

Data Labelling

Data Labeling is a crucial aspect of data preparation that entails giving digital data meaning. For classification purposes, input and output data are labelled.

A high-quality training dataset improves decision-making accuracy and speed while putting less strain on the organization’s resources. The best classifier will be if the training data is reliable.

List dataset in machine learning

The discipline of machine learning relies heavily on datasets. Advances in learning algorithms may lead to significant advancements in this discipline. When faced with a variety of obstacles, machine learning becomes more engaging, therefore obtaining appropriate datasets that are relevant to the use case is critical. A data-set is defined by its flexibility and size. The number of tasks it can support is referred to as flexibility.

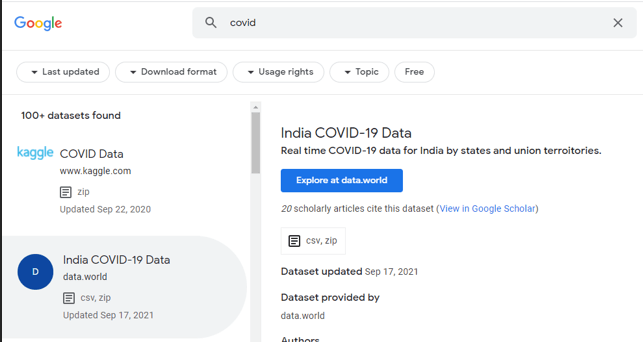

Google’s Datasets Search Engine: Google is a search engine behemoth, and they aided all ML practitioners by doing what they do best: assisting us in finding datasets. The search engine excels in retrieving datasets related to the keywords from a variety of sources, including government websites, Kaggle, and other open-source repositories.

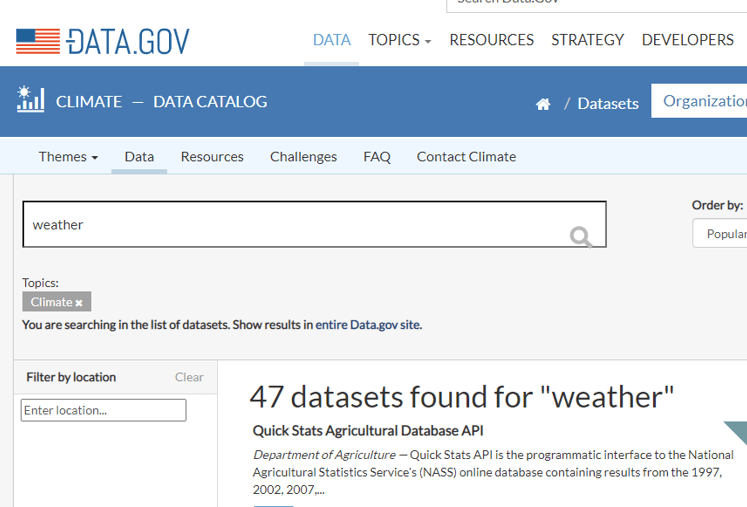

2. Government dataset

Data on the government can be obtained from a variety of sources. Various countries make public government data that they have obtained from various ministries. The purpose of making these datasets available is to improve public awareness of government activity and to use the data in novel ways. Here are some government dataset links:

Dataset from the Indian government

Dataset from the United States Government

Datasets from the Northern Ireland Public Sector

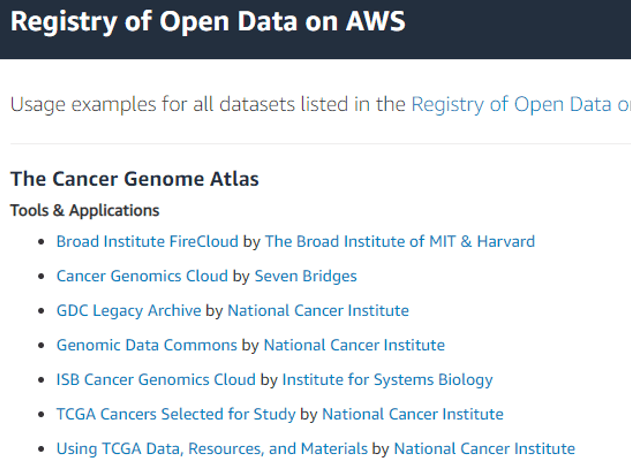

3. Amazon Dataset

Some of the datasets stored on Amazon’s computers are now open to the public. Using these locally available datasets instead of AWS resources for calibrating and adjusting models will speed up the data loading process by tens of times. The register comprises several datasets organised by application field, such as satellite photos, natural resources, and so on. Utilizing AWS resources, anyone may study and construct numerous services using shared data. The cloud-based shared dataset allows users to spend more time on data analysis rather than data collecting.

Top datasets for training your Machine Learning Algorithm that are easily accessible online

Coco dataset from ImageNet

Dataset for Iris Flowers

Wisconsin breast cancer (Diagnostic) Analysis of the Twitter sentiment dataset MNIST dataset is a dataset created by the National Institute of Standards and Technology (handwritten data)

MNIST dataset on fashion Amazon review dataset

Spam-Mails dataset Spam-SMS classifier CIFAR -10 Dataset Youtube Dataset

Conclusion

Furthermore, a decent dataset should meet specific quality and quantity requirements. It is good to see dataset is relevant and well-balanced for a smooth and quick training experience. When possible, use live data and confer with knowledgeable specialists about the volume of data and the source from which it should be collected for the training. Therefore, The computations in Machine Learning are extremely sophisticated. It depends on how and in what circumstances you obtain the data. You will begin pre-processing the data and splitting it into the Train and Test models based on the data condition.

Recommended Articles

This is a guide to Dataset in Machine Learning. Here we discuss the what is machine learning, How to create a dataset in machine learning? for better understanding. You may also have a look at the following articles to learn more –