Updated March 17, 2023

Introduction to Scikit Learn Linear Regression

We know that Scikit learn is nothing but a Python library that helps us implement various machine-learning algorithms to make predictions with the help of linear regression. Linear regression is nothing but a supervised learning algorithm, and it is used to perform the regression task to make the prediction based on the data set. But on the other hand, we can say that it is used to determine the relationship between the forecasting and variables.

Key Takeaways

- It helps us with easy estimations as well as procedures.

- It is less complex when we know the relationship between the dependent and independent variables.

- It minimizes the noisy data, providing a regularization method to reduce the noisy data and increase the system’s performance.

What is Scikit Learn Linear Regression?

We have different algorithms in machine learning, such as Classification, Regression, Clustering, Dimensionality reduction, Model selection, and Preprocessing. So here we will see classification with Supervised learning as follows.

In Supervised learning, again, we have different types of models, so let’s see Linear Models as follows:

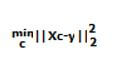

We know that in linear regression, we have a linear model which fits the coefficient to reduce the addition of squares between the dataset and the predicted target. So mathematically, we can solve the problem with the help of the below form.

Where,

- C – Is coefficient.

- X and Y are coordinators.

The coefficient prediction for Ordinary Least Squares depends on the freedom of the elements. When highlights are related, and the sections of the plan network have a roughly straight reliance, the plan lattice turns out to be near solitary. Subsequently, the least-squares gauge turns out to be profoundly delicate to irregular mistakes in the noticed objective, creating an enormous difference. This circumstance of multi-co-linearity can emerge, for instance, when information is gathered without a trial plan.

How to Run Scikit Learn Linear Regression?

Let’s see how we can run the Scikit learn linear regression as below:

Before execution, first, we need to focus on the prerequisite; we know that linear regression is a machine learning algorithm based on supervised learning. It determines the relationship between the variables and the expected result. So, first, we need to import all the required libraries as mentioned below.

Code:

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as ptIn the second step, we need to read the dataset from the CSV file, or if we don’t have the dataset, we can download it as below.

Code:

Dataset = pt.read_csv(‘specified file name’)Here we can see the output or say that result, but if we want to draw the chart, we can use data scatter. In the next step, we need to clean data to eliminate the null, or we can say there are missing inputs. Then, we must make a trained data model per our requirement if we don’t have the training dataset. Finally, we can display the predicted values with different colors, so we must explore the result for that purpose.

Scikit Learn Linear Regression Model

Let’s see the linear regression model in Scikit learn as below:

The linear model consists of the different regression methods as follows:

- Ordinary Least Squares: It fits LinearRegression with the coefficients and helps us minimize the residual sum of predicted and observed target values.

- Non-Negative Least Squares: In linear regression, we can make the non-negative coefficient, and it helps to represent the natural or physical non-negative numbers.

Let’s see ridge regression and its classification as follows:

First, ridge regression helps us to overcome the drawback of least squares; basically, ridge regression helps us to reduce the penalized squares.

Let’s see what classification in ridge is as follows:

Ridge regression uses the RidgeClassifier. First, we need to convert the binary target into the required format. Then, the predicated ridge class corresponds to the regression; if we have more than one problem, we can consider the problem as multi-output regression.

- Ridge Complexity: This is similar to the least squares.

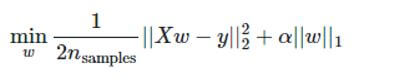

- Lasso: This is another linear model that helps us estimate the sparse coefficients. It is used to handle the non-zeros coefficients. For this problem, Lasso helps us with compressed sensing.

Mathematically we can represent it as below:

Scikit Learn Linear Regression Method

There are two methods we use in Scikit Learn as follows:

- Gradient Descent: This is a straightforward and efficient method to implement linear classifiers and regressors. Usually, it supports vector machines and logistic regression and is used to implement the machine learning algorithm. Gradient Descent is typically used for large-scale machine-learning problems.

- Matrix Method: We realize that in direct relapse, we have a straight model which fits the coefficient to decrease the expansion of the square between the dataset and the anticipated objective. Numerically we can take care of the issue with the assistance of a coefficient.

Example of Scikit Learn Linear Regression

Given below is the example of Scikit Learn Linear Regression:

Code:

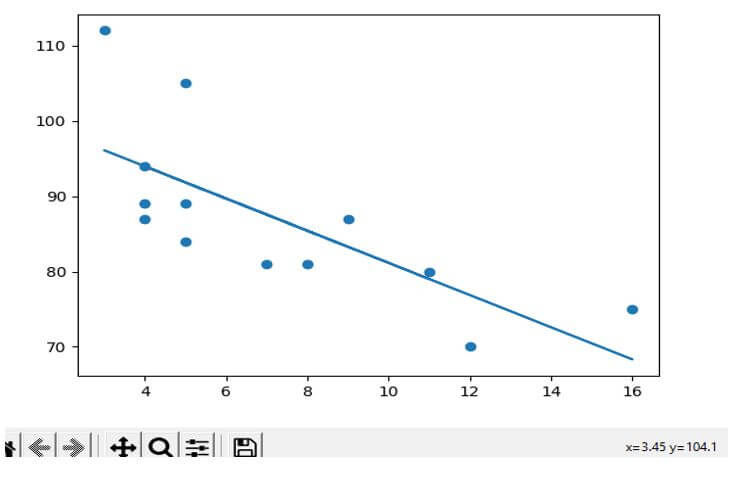

import matplotlib.pyplot as plt

from scipy import stats

x = [4,4,5,8,3,11,5,9,4,12,16,7,5]

y = [87,89,89,81,112,80,105,87,94,70,75,81,84]

slope, intercept, err = stats.linregress(x, y)

def m_func(x):

return slope * x + intercept

m_model = list(map(m_func, x))

plt.scatter(x, y)

plt.plot(x, m_model)

plt.show()Explanation:

- In the above example, we try to implement linear regression.

- The result of the above implementation is shown in the below screenshot.

Output:

FAQ

Other FAQs are mentioned below:

Q1. What is the purpose of Scikit Linear Regression?

Answer:

It is used to find the relationship between the dataset and the predicted value and to conduct the regression task based on the prediction values.

Q2. What is the strength of Scikit linear regression?

Answer:

There are three major strengths of Scikit Linear Regression as it is used to determine the predictors. One major strength is the effect of the result means estimation or prediction and research and formulating the prediction values.

Q3. What is Scikit linear regression?

Answer:

It uses the data point and the relationship to draw a straight line through the points.

Conclusion

In this article, we are trying to explore Scikit Learn linear regression. In this article, we saw the basic ideas of Scikit Learn linear regression. Another point from the article is how we can see the basic implementation of Scikit Learn linear regression.

Recommended Articles

This is a guide to Scikit Learn Linear Regression. Here we discuss the introduction, method, and how to run scikit learn linear regression along with examples & FAQ. You may also have a look at the following articles to learn more –