Updated March 17, 2023

Introduction to Scikit Learn Pipeline

The following article provides an outline for Scikit Learn Pipeline. Scikit learn offers different types of features to the user; the pipeline is one of the features that scikit has. The pipeline assembles the various steps while setting different parameters for cross-validations. In other words, we can say that it activates the setting of parameters for their respective steps with name and parameter name separated with the help of an underscore.

Key Takeaways

- It allows us to gather and process the data; it may be real-time or collected from different sources.

- It can be applied in the order of steps.

- It supports the slack notification.

- It allows us to implement distributed computing.

Overview of Scikit Learn Pipeline

An AI pipeline can be made by assembling a grouping of steps to prepare an AI model. It tends to be utilized to computerize an AI work process. The pipeline can include pre-handling, highlight choice, grouping/relapse, and post-handling. More complicated applications might have to fit in other essential strides inside this pipeline.

By improvement, we mean tuning the model for the best presentation. The progress of any learning model lies in choosing the ideal boundaries that give the best outcomes. Enhancement can be taken a gander at as far as an inquiry calculation, which strolls through a space of boundaries and chases down the best out of them.

How to Use Scikit Learn Pipeline?

For the implementation of the pipeline, we need to follow the different steps as follows:

First, we need to gather the data; basically, gathering the dataset depends on the project. Sometimes we require real-time data, or we need to collect data from various resources such as files, databases, and surveys, as shown below code.

Code:

import pandas as pd

import numpy as np

info = pd.read_csv("D:/Work From Home/Other/Scikit learn pipeline/bikesample.csv")

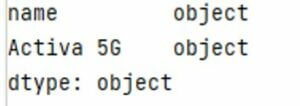

print(info.dtypes)Output:

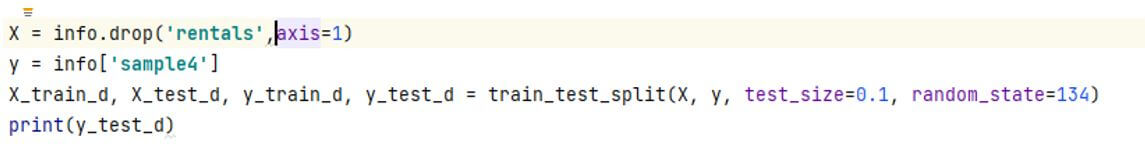

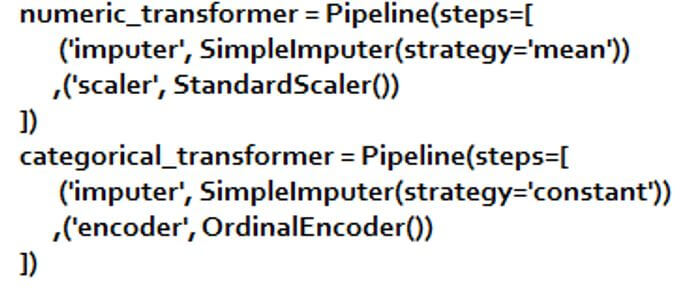

In the second step, we need to split and pre-process the data. As a rule, inside the gathered information, there is missing information, incredibly huge qualities, sloppy text information, or uproarious information and consequently can’t be utilized straightforwardly inside the model; subsequently, the information requires some pre-handling before entering the model.

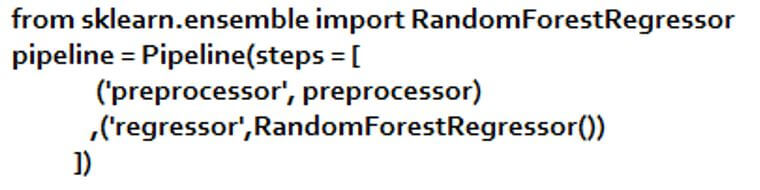

In the third step, we need to train and test the model. Once our data is ready for processing, we need to put these data into the machine learning model. Before that, it is essential to consider what model to be utilized to give a decent presentation yield. The informational collection is partitioned into three critical areas: preparation, approval, and testing. The principal point is to prepare information in the train set, tune the boundaries utilizing the ‘approval set,’ and test the presentation test set afterward.

In the fourth step, we need to evaluate the result. Assessment is a piece of the model improvement process. It assists with finding the best model that addresses the information and how well they picked model functions from here on out.

Scikit Learn Pipeline API

It empowers setting boundaries of the different advances utilizing their names and the boundary name isolated by a ‘__,’ as in the model beneath. Inside the step, we need to set boundary values for specified steps execution.

While creating API for the pipeline, we need to consider different parameters as follows:

- Steps: This is nothing but the list of steps we will execute in sequential order, and the last step must be an estimator.

- Memory: It is generally used for transformers of the pipeline by default; there is no cache. On the off chance that a string is given, it is the way to the reserving catalog. Utilize the trait named_steps or move toward investigating assessors inside the pipeline.

- Verbose: If we set it as accurate, each step is printed as completed.

The next parameter of API is attributed as follows:

- Steps_name: It is used to access steps with a name.

- Classes: This is nothing but the labels of class.

- N_features: It shows the number of features fitted in specified steps.

- Feature_name: Is used to indicate names of the feature that are fitted in specified steps.

Scikit Learn Pipeline Modeling

For pipeline modeling, we need to follow the different steps as follows:

First, as shown in the code, we need to load the dataset as per our requirement, either from the dataset or a file database.

Code:

from sklearn.datasets import load_iris

iriss = load_iris()After execution, we get some output that defines a specified data sequence. Inside the data, the last column represents the class label, so here we need to separate the features from the label of the class and split the data as per our requirement, as shown below code.

Code:

X_train_d, X_test_d, y_train_d, y_test_d = train_test_split(X,y,test_size=1/3, random_state=0)Now we need to set the machine learning pipeline as follows:

- First, we need to select the scaler for pre-processing data; after that, we need to use the features selector for the variance threshold.

- The next step of modeling is optimizing and tuning the training dataset. We can look for the best scalers. Rather than simply the StandardScaler(), we can attempt MinMaxScaler(), Normalizer() and MaxAbsScaler().

- We can look for the best change limit in the selector, i.e., VarianceThreshold().

- We can look for the best worth of k for the KNeighborsClassifier().

Example of Scikit Learn Pipeline

Given below is the example of Scikit Learn Pipeline:

Code:

from sklearn.svm import SVC

from sklearn.preprocessing import StandardScaler

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.pipeline import Pipeline

x, y = make_classification(random_state=1)

x_train_d, x_test_d, y_train_d, y_test_d = train_test_split(x, y, random_state=1)

pipel= Pipeline([('scaler', StandardScaler()), ('svc', SVC())])

print(pipel.fit(x_train_d, y_train_d))

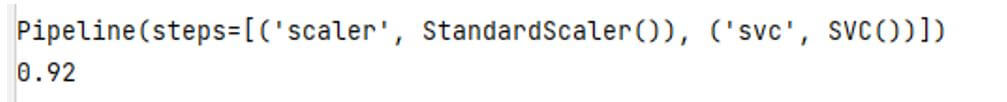

print(pipel.score(x_test_d, y_test_d))Explanation:

- In the above example, we try to implement a pipeline; after execution, we get the following result, as shown in the below screenshot.

Output:

FAQ

Other FAQs are mentioned below:

Q1. What is the scikit learn pipeline in machine learning?

Answer:

The pipeline is used for the end-to-construction flow of data, output, and machine learning model and includes the raw data, features, and prediction outputs.

Q2. What are the advantages of a pipeline?

Answer:

By using a pipeline, we can make the easy implementation of a machine learning algorithm as well as can quickly move into production.

Q3. How do pipeline algorithms work in Scikit learn?

Answer:

In the pipelined algorithm, we have different steps, which we need to occupy with some work, or we can say it depends on the requirement.

Conclusion

In this article, we are trying to explore the Scikit Learn pipeline. In this article, we saw the basic ideas of the Scikit Learn pipeline and the uses and features of these Scikit Learn pipelines. Another point from the article is how we can see the basic implementation of the Scikit Learn pipeline.

Recommended Articles

This is a guide to Scikit Learn Pipeline. Here we discuss the introduction, API & modeling, examples, and how to use the scikit learn pipeline with FAQ. You may also have a look at the following articles to learn more –