What is Aggregation in Data Mining

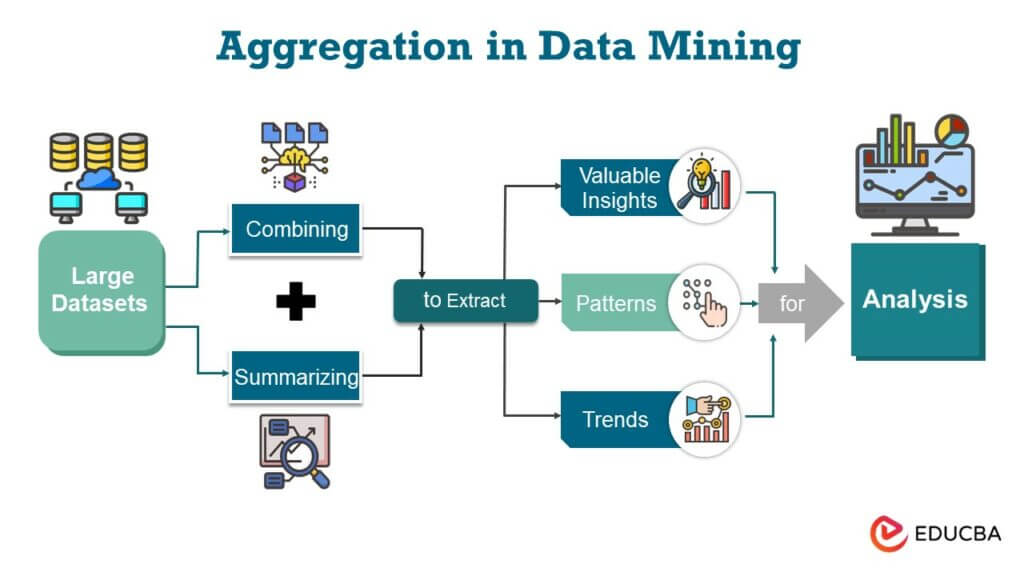

Aggregation in data mining refers to the process of summarizing and combining large volumes of data into a more concise and meaningful form for analysis. It involves grouping data points or values based on specific attributes or criteria, often using mathematical functions like sum, average, or count to derive relevant insights. Aggregation helps in simplifying complex data sets, revealing patterns, and facilitating decision-making by providing a more comprehensive overview of the information contained within the data.

Table of Contents

Key Takeaways

- Aggregation is the process of summarizing and condensing large datasets for analysis.

- It involves grouping data based on specific attributes or criteria.

- Mathematical functions like sum, average, or count are commonly used for aggregation.

- Aggregation simplifies complex data, making it easier to identify patterns and make informed decisions.

- It provides a comprehensive overview of data, revealing essential insights for data analysis and decision-making.

Significance of Aggregation in Data Analysis

Aggregation plays a crucial role in data analysis and can be summarized through several key points:

- Simplifies Complex Data: Aggregation condenses large and intricate datasets into manageable summaries, making it easier to grasp the overall picture.

- Enhances Data Exploration: It enables analysts to examine data at different levels of granularity, uncovering hidden patterns and trends.

- Facilitates Decision-Making: Aggregated data provides a basis for making informed decisions, offering a high-level overview of critical metrics.

- Supports Reporting: Summarized data is valuable for generating reports and visualizations, enabling effective stakeholder communication.

- Reduces Data Storage and Processing Costs: Aggregation minimizes the volume of data, saving storage space and decreasing computational demands, particularly relevant in big data environments.

- Aids Anomaly Detection: It assists in identifying outliers and anomalies by providing context for understanding normal data behavior.

- Improves Privacy and Security: Aggregation can help protect sensitive information by presenting summarized data instead of detailed records.

- Enables Predictive Modeling: Aggregated historical data is often used to build predictive models and identify trends that support forecasting.

- Optimizes Resource Allocation: Aggregation is crucial for resource allocation decisions, such as budgeting, inventory management, and staffing.

- Enhances Scalability: It allows data analysis to scale efficiently to handle large datasets and evolving data sources.

Working of Data Aggregators

Data aggregators operate through three main steps:

- Collection of Data: Data aggregators gather data from various sources, including websites, APIs, databases, sensors, social media, or other data repositories. They use web scraping, APIs, or data feeds to collect structured and unstructured data. This data can be in different formats, such as text, images, or numerical values.

- Processing of Data: Once the data is collected, data aggregators process it to make it usable and relevant. Data cleaning, transformation, and standardization are necessary steps to ensure the accuracy and consistency of the data. Aggregators may employ data enrichment techniques to enhance the quality and completeness of the information. Additionally, they may apply data aggregation methods to summarize and organize the data, including using aggregation functions like summation, averaging, and filtering to extract specific insights.

- Presentation of Data: Data aggregators present the processed data in a format that is understandable and actionable. This can include generating reports, charts, graphs, dashboards, or structured datasets. The presentation of data aims to make the insights easily accessible to end-users, decision-makers, and analysts. Data may be delivered through web interfaces, APIs, or other means based on user preferences.

Types of Data Aggregation

- Time Aggregation: Aggregating data over specific time intervals, such as daily, weekly, or monthly, to analyze trends and patterns over time.

- Spatial Aggregation: Combining data based on geographical regions or spatial units, facilitating geographic analysis and visualization.

Time intervals for the data aggregation process

- Reporting Period: A reporting period is the time interval over which data is summarized and reported. For example, a monthly reporting period may be used to provide a summary of sales figures for each month. It helps in creating regular reports for stakeholders and decision-makers.

- Polling Period: The polling period is the frequency at which data is collected or sampled. For instance, a system might poll sensor data every 15 minutes to monitor environmental conditions. The polling period affects the real-time nature of data analysis.

- Granularity: The term granularity refers to the degree of detail present in aggregating data. A finer granularity provides more detailed insights, while a coarser granularity offers a broader view. For instance, in stock market data, intraday granularity (minute-by-minute) provides detailed trading insights, while daily granularity offers a broader perspective.

Techniques for Aggregation

A. Aggregation Functions

- Summation: This function adds up values within a group, providing the total. It helps calculate sums, like total sales revenue.

- Averaging: Averages the values within a group, yielding the mean. This is commonly used for finding average temperatures or ratings.

- Counting: Counts the number of occurrences within a group, often used for tallying events like website visits or product sales.

- Max and Min: These functions find the highest (max) and lowest (min) values within a group, helping identify extremes, such as peak or minimum temperatures.

B. Aggregation Methods

- Grouping and Group-by Operations: Smaller subsets of data are created through specific criteria, such as product categories. They are common in SQL queries to aggregate data within these groups.

- Roll-up and Drill-down: In hierarchical data structures, roll-up involves aggregating data from lower to higher levels, while drill-down does the opposite—breaking aggregated data into more detailed levels.

- Cube Aggregation: This method is used in multidimensional data analysis. It involves summing up data across multiple dimensions or “slices” of a data cube to provide different perspectives on the data.

- Hierarchical Aggregation: In hierarchical data, this method summarizes data at different levels of a hierarchy, allowing analysis at various levels of granularity, from broad to detailed views.

To simplify data for analysis and decision-making in data mining and analytics, summarizing approaches are crucial.

Applications of Aggregation in Data Mining

- Summarizing and Reporting: Aggregation is widely used to create concise and informative summaries of large datasets. It allows data analysts and decision-makers to get a high-level overview of the data. For instance, sales data can be aggregated to provide monthly or yearly summaries for reporting purposes. Summarized data is more accessible to visualize and communicate to stakeholders, facilitating informed decision-making.

- Data Reduction: Data aggregation helps reduce the dimensionality of data, making it more manageable while retaining essential information. By aggregating data, you can significantly decrease storage and processing requirements. This is particularly valuable in big data scenarios, where handling vast amounts of information efficiently is crucial.

- Pattern Discovery: Aggregation can reveal hidden patterns and trends within data. By summarizing data at various levels of granularity, patterns may become more apparent. For example, by aggregating web traffic data, you can discover peak usage times or recurring visitor behavior, which can inform content optimization and marketing strategies.

- Anomaly Detection: Aggregation plays a role in identifying anomalies or outliers in data. By aggregating data and comparing individual data points to the aggregated values, unusual deviations from the expected behavior can be flagged. This is essential in fraud detection, network security, and quality control applications, where detecting irregularities is crucial.

- Predictive Analytics: Aggregated data can be input for predictive analytics models. By summarizing historical data, analysts can identify trends and patterns that can be used to build predictive models. For example, by aggregating customer purchase history, businesses can predict future buying behavior and tailor marketing strategies accordingly.

Tools and Technologies

A. Software and Tools for Data Aggregation

Various software and tools are available for data aggregation, including commercial and proprietary solutions. Some popular software for data aggregation include:

- Microsoft Excel: Often used for simple data aggregation and reporting tasks.

- Tableau: A data visualization tool that can also perform data aggregation.

- Power BI: A business analytics service by Microsoft that supports data aggregation and reporting.

- QlikView and Qlik Sense: Business intelligence platforms for data visualization and analysis.

- SAS (Statistical Analysis System): Offers data management and analytics tools for data aggregation and reporting.

B. Open-source Libraries and Frameworks

Open-source libraries and frameworks are widely used for data aggregation, providing flexibility and customization. Some notable open-source tools for data aggregation include:

- Pandas: A Python library that offers powerful data manipulation and aggregation capabilities, making it popular for data scientists.

- Apache Spark: This refers to a computing framework that is designed to handle big data by supporting its analysis and aggregation across multiple systems.

- Hadoop: An open-source framework that provides distributed storage and processing for large datasets, suitable for data aggregation tasks.

- R: A statistical programming language that includes packages and functions for data aggregation.

- SQL (Structured Query Language): SQL databases and query tools are commonly used for data aggregation through SQL queries.

- Elasticsearch and Kibana: These tools are often used for log and event data aggregation and analysis.

Challenges and Considerations

- Data Quality and Preprocessing: Data quality is a critical challenge in data aggregation. Aggregating inaccurate or incomplete data can result in misleading outcomes. Therefore, it is crucial to ensure that the data used is accurate and complete to avoid any erroneous conclusions. To ensure reliable and meaningful data aggregation, preprocessing steps such as cleaning, normalization, and outlier detection are essential.

- Computational Complexity: Aggregating large datasets can be computationally intensive. As the volume of data grows, the time and resources required for aggregation can become a bottleneck. Efficient algorithms and hardware are necessary to handle the computational complexity of aggregation, particularly in big data scenarios.

- Privacy and Security Concerns: Combining data from various sources is called aggregation. However, this process can pose a threat to privacy and security. When data is aggregated, sensitive information may be exposed. That’s why it’s essential to have robust security measures, anonymize data, and comply with data protection regulations like GDPR or HIPAA.

- Handling Missing Data: In real-world datasets, missing data is common. Aggregation techniques need to account for missing values appropriately. Depending on the situation, missing data can be ignored, imputed, or handled in a way that doesn’t distort the aggregated results. Dealing with missing data is a key consideration to avoid bias.

- Aggregation Bias: Aggregation bias can occur when aggregated results do not accurately represent the underlying data. This can occur due to unequal weighting, selection bias, or inappropriate aggregation. It’s essential to be aware of the potential for bias and to choose aggregation methods that minimize it.

Future Trends and Innovations

Regarding data aggregation and mining, there are some exciting trends and innovations to look forward to in the future.

- AI-Driven Aggregation: Integrating artificial intelligence and machine learning to automate data aggregation, improving accuracy and efficiency.

- Real-time Data Aggregation: Shift towards real-time data aggregation to support instant decision-making and analysis.

- Edge Computing: Aggregating data closer to data sources at the edge to minimize latency and manage vast amounts of data supplied by IoT devices.

- Privacy-Preserving Aggregation: Enhanced techniques for aggregating data while preserving privacy, complying with strict data protection regulations.

- Blockchain for Data Provenance: Blockchain technology can guarantee the integrity and traceability of data in aggregated datasets.

- Federated Learning: Aggregating data across decentralized and distributed networks for collaborative model training while preserving data privacy.

- Graph-Based Aggregation: Utilizing graph databases and analytics for aggregating and analyzing interconnected data, such as social networks and supply chains.

- Explainable AI in Aggregation: Developing methods to make aggregated data and insights more interpretable and transparent.

- Quantum Computing: Quantum computing can speed up the process of data aggregation and analysis for complex problems.

- Data Trusts: Emergence of platforms and frameworks for secure and ethical data sharing and aggregation.

Conclusion

Aggregation in data mining is a pivotal technique that simplifies complex data, supports practical analysis, and facilitates informed decision-making. It serves as a bridge between vast datasets and actionable insights, enabling the discovery of patterns, trends, and anomalies. As the volume and complexity of data increase, aggregation remains essential. The future promises even more innovations, such as real-time and privacy-preserving aggregation, demonstrating its enduring importance in the data-driven world. Aggregation is an indispensable tool that empowers organizations to harness the true potential of their data for enhanced understanding and strategic advantages.

Frequently Asked Questions (FAQs)

Q1. How is data aggregation used in real-world applications?

Answer: Data aggregation is utilized in various domains, such as retail sales analysis, healthcare data analysis, network traffic monitoring, social media engagement tracking, and financial risk assessment.

Q2. How can data aggregation benefit businesses and organizations?

Answer: Data aggregation enables organizations to gain a competitive edge, make data-driven decisions, and optimize resource allocation by uncovering valuable insights and patterns.

Q3. How can I ensure that my data aggregation is unbiased and accurate?

Answer: To minimize aggregation bias, choose appropriate aggregation methods and ensure data preprocessing, quality checks, and adherence to best practices. It is also helpful to validate results with domain experts.

Q4. Is there a role for data aggregation in machine learning?

Answer: Yes, Preprocessing data for machine learning models often involves data aggregation to create aggregated features that improve predictive analytics.

Recommended Article

We hope that this EDUCBA information on “Aggregation in data mining” was beneficial to you. You can view EDUCBA’s recommended articles for more information,