Updated December 5, 2023

Difference Between Mutex and Spinlock

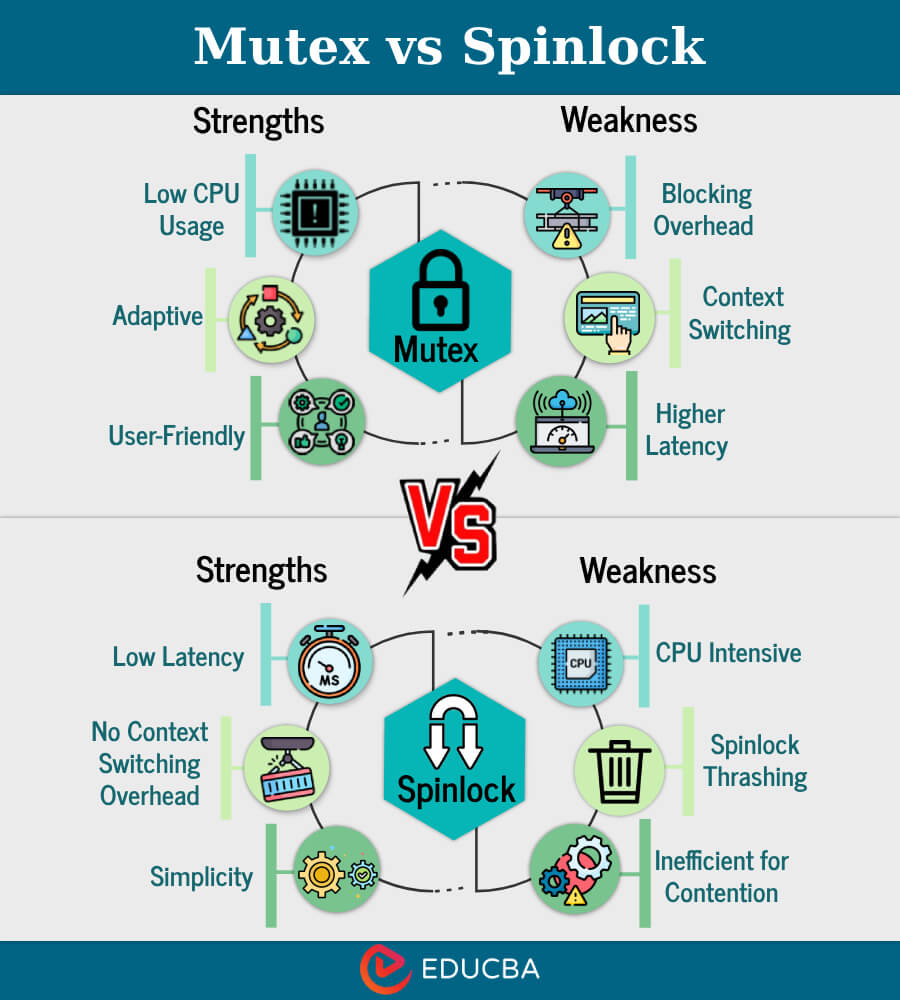

In concurrent programming, Mutex and Spinlock are synchronization mechanisms crucial for managing shared resources. Mutex, short for Mutual Exclusion, ensures exclusive access to a resource, preventing multiple threads from simultaneously modifying it. It uses a wait-and-signal approach, making threads wait for access. On the other hand, Spinlock employs a busy-waiting strategy, continuously checking for resource availability. At the same time, Mutex offers simplicity and reliability; Spinlock, though faster in specific scenarios, may lead to higher CPU usage. Understanding Mutex vs Spinlock differences and use cases is essential for effective concurrent programming and optimal resource utilization.

Table of Contents

What is Mutex?

A synchronization mechanism called a mutex, or mutual exclusion, is used in concurrent programming to guarantee that only one thread may access a shared resource or crucial portion of code at a time. It prevents data corruption or inconsistencies caused by multiple threads attempting to modify the resource simultaneously. A Mutex typically provides a lock that a thread must acquire before entering the critical section and release after completing its operation. If a thread attempts to reach the lock when another thread already holds it, it will be blocked until it becomes available. This ensures orderly access and avoids race conditions.

How Mutex Works?

A mutex ensures that only one thread at a time can access a shared resource or a crucial portion of code by offering a method for mutual exclusion. The fundamental idea involves a lock associated with the resource. Here’s a step-by-step description of how Mutex works:

- Initialization: The Mutex is initialized and associated with the critical section or resource that needs protection.

- Acquiring the Lock: A thread must acquire the Mutex lock before entering the critical section. If another thread does not currently hold the lock, the requesting thread successfully acquires it and proceeds to execute the critical section.

- Blocking on Contention: The requesting thread is blocked if another thread already holds the lock. It enters a waiting state until the lock becomes available.

- Releasing the Lock: Once a thread completes its work in the critical section, it removes the Mutex lock. This action signals to other waiting threads that they can now attempt to acquire the lock.

- Ensuring Mutual Exclusion: The Mutex provides mutual exclusion by allowing only one thread to hold the lock at any given time. This prevents race conditions and data corruption from multiple threads modifying shared resources simultaneously.

- Priority and Fairness: Mutex implementations may incorporate strategies for thread priority and fairness to avoid scenarios where specific threads monopolize the lock, leading to the potential starvation of others.

Limitations and Considerations of Mutex

Mutexes are a valuable tool for synchronization. Still, they have limitations that developers need to consider when implementing them into their code:

- Potential for Deadlocks: Improper use of Mutexes can lead to deadlocks, where threads are indefinitely blocked because they wait for each other to release locks.

- Performance Overhead: Mutexes may introduce performance overhead due to thread contention and context switching, mainly when implemented with waiting and signaling mechanisms.

- Priority Inversion: Mutexes can lead to priority inversion, where a higher-priority thread is blocked by a lower-priority thread holding the lock.

- Complexity and Correctness: Implementing Mutexes correctly requires careful consideration of potential issues, making the code more complex and increasing the risk of errors.

- Scope of Protection: Mutexes protect specific critical sections, and if not used judiciously, other areas of the code might remain unprotected, leading to potential issues.

- Resource Ownership: Determining the ownership of resources protected by Mutexes can be challenging, especially in larger systems with multiple components.

- Limited Scalability: In scenarios with many threads contending for locks, Mutexes may exhibit limited scalability, impacting overall system performance.

- Programming Discipline: Correct usage of Mutexes relies on disciplined programming practices, and violations can result in subtle bugs that are challenging to identify and fix.

- Recursive Locking: Some Mutex implementations support recursive locking, but excessive use can make code more complicated to understand and maintain.

- Interrupt Handling: Mutexes might not be suitable for all scenarios, particularly in environments where interrupt handling or asynchronous events play a significant role.

What is Spinlock?

A Spinlock is a synchronization mechanism in concurrent programming where a thread repeatedly checks for the availability of a lock instead of entering a waiting state. Unlike Mutex, it employs a busy-waiting strategy, continuously spinning until the lock is acquired. Spinlocks are lightweight and efficient in low-contention scenarios but may lead to increased CPU usage and performance degradation under high contention. They are suitable for short critical sections where waiting for a lock might introduce more overhead than repeatedly checking for availability, making them beneficial in specific low-latency and real-time applications.

How Spinlock Works?

Spinlocks work by utilizing a busy-waiting strategy, where a thread repeatedly checks for the availability of a lock instead of entering a waiting state. Here’s a step-by-step explanation of how a Spinlock typically works:

- Initialization: The Spinlock is initialized, usually with a state variable indicating whether the lock is currently held or available.

- Acquiring the Lock: When a thread wants to enter a critical section, it attempts to reach the Spinlock by repeatedly checking the lock’s state in a tight loop. If the lock is available, the thread atomically sets it to indicate it is now held and proceeds into the critical section.

- Busy-Waiting: If another thread already holds the lock, the requesting thread enters a busy-waiting loop, continuously checking the lock’s state.

- Releasing the Lock: When the thread finishes its work in the critical section, it removes the Spinlock, making it available for other threads. Any thread that was busy waiting for the lock can now attempt to acquire it.

- Atomic Operations: The operations to check and set the lock’s state are typically atomic to avoid race conditions where multiple threads might simultaneously try to acquire the lock.

- Low Overhead: Spinlocks have low overhead when contention is common because threads can quickly acquire the lock without entering a waiting state.

- High Contention Considerations: In scenarios with high contention, where multiple threads are vying for the lock, spinlocks may increase CPU usage and reduce overall performance due to continuous spinning.

- Priority Inversion: Like Mutexes, spinlocks can also suffer from priority inversion, where a higher-priority thread might be delayed by a lower-priority thread holding the lock.

Limitations and Considerations of Spinlock

- High CPU Utilization: Spinlocks can lead to high CPU utilization, especially in high-potting scenarios, as threads continuously spin while waiting for the lock.

- Inefficiency Under Contention: In situations where contention for the lock is frequent, spinlocks may become inefficient compared to other synchronization mechanisms like Mutex, which can make threads wait rather than actively spin.

- Priority Inversion: Like Mutexes, spinlocks can suffer from priority inversion, where a higher-priority thread is delayed by a lower-priority thread holding the lock.

- Not Suitable for Long Operations: Spinlocks are more suitable for short critical sections. Using them for lengthy operations may lead to inefficient use of resources due to continuous spinning.

- Limited Scalability: The efficiency of spinlocks may diminish in systems with many threads contending for locks, impacting overall scalability.

- Potential for Deadlocks: Spinlocks can be prone to deadlocks if not used carefully, particularly in scenarios involving complex interactions between multiple locks or resources.

- Lack of Priority Awareness: Spinlocks do not inherently prioritize threads based on their importance, potentially leading to suboptimal thread scheduling.

- Resource Ownership: Determining the ownership of resources protected by spinlocks can be challenging, especially in larger systems with multiple components.

- No Blocking Mechanism: Unlike Mutexes, spinlocks lack a built-in blocking mechanism. Threads continuously spin, which might not be suitable for all scenarios, especially those involving power efficiency.

- Programming Discipline: Correct usage of spinlocks requires disciplined programming practices, and misuse can result in subtle bugs that are challenging to identify and fix.

Key Difference Between Mutex vs Spinlock

Now, let’s examine the key distinctions between Users in Knowledge Discovery in Mutex vs Spinlock

| Feature | Mutex | Spinlock |

| Waiting Strategy | Blocks waiting threads | Busy wait for lock availability |

| Efficiency | Lower efficiency due to potential context switching | Higher efficiency in low-contention scenarios |

| CPU Utilization | Lower CPU utilization | Higher CPU utilization under contention |

| Suitability | Suitable for longer critical sections or when waiting is expected | Ideal for short critical sections or low-contention scenarios |

| Complexity | Generally more straightforward | This may introduce complexity due to busy waiting and the potential for priority inversion. |

| Resource Overhead | May have higher resource overhead due to blocking | Lower resource overhead, especially in low-contention scenarios |

| Priority Inversion | May suffer from priority inversion | May also suffer from priority inversion |

| Scalability | May exhibit better scalability in high-contention scenarios | It may show lower scalability in high-contention scenarios |

| Use Cases | Well-suited for scenarios with varying lengths of critical sections | Well-suited for scenarios with short and predictable critical sections |

| Waiting Mechanism | Threads enter a waiting state when the lock is not available | Threads actively spin while waiting for the lock |

Choosing Between Mutex vs Spinlock

Choosing between Mutex and Spinlock depends on the specific requirements and characteristics of the concurrent programming scenario:

Choose Mutex When:

- Use it when protecting longer critical sections or waiting for the lock to be expected.

- Well-suited for scenarios with variable-length critical sections.

- Lower CPU utilization in scenarios with lower contention.

- It is more straightforward to use and less prone to priority inversion.

Choose Spinlock When:

- Use for short critical sections or in low-contention scenarios.

- Provides higher efficiency and lower overhead in situations with minimal contention.

- Suitable for scenarios where waiting for a lock might introduce more overhead than busy waiting.

- Be cautious about potential higher CPU utilization, especially in high-contention scenarios.

When deciding between using Mutex and Spinlock for synchronization in concurrent programming, consider the length of critical sections, expected contention levels, and system characteristics to ensure effective synchronization.

Conclusion

The choice between Mutex and Spinlock in concurrent programming hinges on the application’s specific requirements. Mutexes are simple, suitable for longer critical sections, and exhibit better behavior under contention, albeit with potentially higher overhead. Spinlocks, efficient in low-contention scenarios with short critical sections, may lead to increased CPU utilization under heavy contention. Developers should carefully evaluate the characteristics of their application, considering essential lengths of section, expected contention, and system efficiency, to make an informed decision between Mutex and Spinlock for optimal synchronization and performance.

Recommended Articles

We hope this EDUCBA information on “Mutex vs Spinlock” benefited you. You can view EDUCBA’s recommended articles for more information,