Introduction to CycleGAN

CycleGAN is a versatile approach to image-to-image translation, empowering the conversion of images from one domain to another without the need for paired datasets. This innovative method, introduced by Zhu et al. in 2017, leverages a cycle consistency loss to maintain the identity of images when translated back to their original domain. By transcending the limitations of traditional methods, CycleGAN has opened up new possibilities for artistic style transfer and domain adaptation in various fields, inspiring many applications.

Table of Contents

- Introduction to Cycle GAN

- Understanding Generative Adversarial Network

- Explanation of Cycle GAN Architecture

- Training Process of Cycle GAN

- Applications of Cycle GAN

- Comparison with Other GAN Variants

- Challenges and Limitations of Cycle GAN

- Case Study: Translating Weather Conditions in Images

- Future Trends and Research Directions

What is Cycle GAN?

CycleGAN (Cycle-Consistent Generative Adversarial Network) is a type of GAN (Generative Adversarial Network) designed for image-to-image translation tasks. Unlike traditional GANs that require paired training data, CycleGAN can learn to translate images between domains without paired examples. It employs two generators and two discriminators to translate images from one domain to another. The cycle consistency loss ensures that an image translated to another domain and then back to the original domain remains unchanged, preserving the essential characteristics of the original image.

Limitations of Traditional Image-to-Image Translation Methods

Traditional image-to-image translation methods, such as Pix2Pix, rely on paired datasets where each input image has a corresponding output image. This requirement for paired data makes it challenging to apply these methods to many real-world scenarios where such datasets are unavailable or difficult to obtain. Additionally, traditional methods often struggle with overfitting the training data, limiting their generalization ability to new images. They also tend to perform poorly when dealing with complex transformations, especially when there is no clear direct mapping between the source and target domains. CycleGAN addresses these limitations by learning from unpaired data and ensuring consistency through cycle consistency loss.

Understanding Generative Adversarial Network

A Generative Adversarial Network (GAN) is a potent category of machine learning frameworks tailored for generative tasks, such as creating realistic images, text, or audio. Developed by Ian Goodfellow and colleagues in 2014, a GAN comprises two neural networks: a generator and a discriminator. These networks are trained simultaneously through adversarial training, competing against each other to improve their performance.

Generator

The generator’s primary role is to create data that resembles the real distribution. As an input, it takes a random noise vector and then generating a synthetic data sample, such as an image. The generator aims to produce realistic data that can deceive the discriminator into believing it is genuine.

Discriminator

The discriminator’s role is to distinguish between real data samples and those generated by the generator. It takes an input (either a real data sample or a synthetic one from the generator) and outputs a probability indicating whether the input is real or fake. The discriminator aims to identify real versus fake samples accurately.

Adversarial Training

During training, the generator and discriminator engage in a two-player minimax game:

- The generator aims to minimize that the discriminator correctly identifies its outputs as fake.

- The discriminator attempts to maximize the probability of accurately distinguishing between real and fake samples.

Explanation of Cycle GAN Architecture

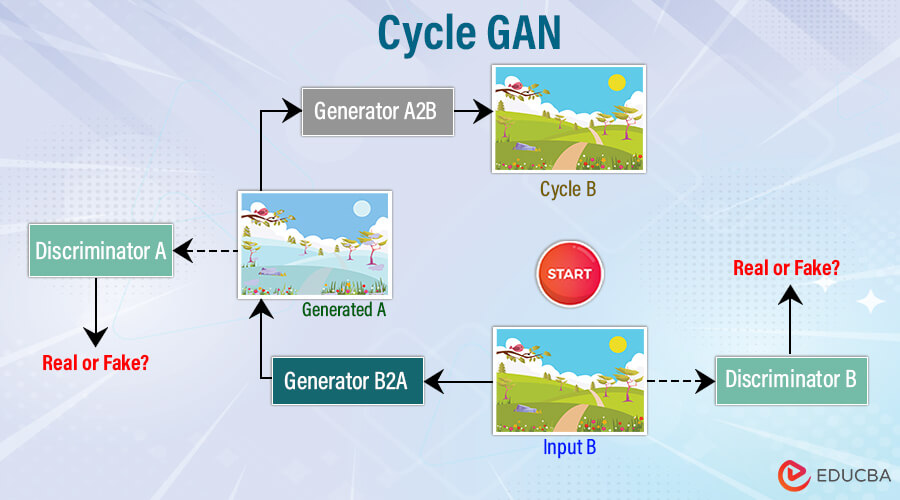

CycleGAN (Cycle-Consistent Generative Adversarial Network) extends the GAN architecture designed for unpaired image-to-image translation tasks. It consists of two pairs of generators and discriminators, working to translate images between two domains while ensuring consistency.

Generators

Cycle GAN employs two generators:

- Generator G: Translates images from domain X to domain Y.

- Generator F: Translates images from domain Y to domain X.

Discriminators

Cycle GAN includes two discriminators:

- Discriminator DX: Distinguishes between real images from domain X and fake images F(Y) generated by F.

- Discriminator DY: Distinguishes between real images from domain Y and fake images G(X) generated by G.

Architecture Workflow

- Image Translation: The generator G takes an image x from domain X and generates an image G(x) in domain Y. Similarly, generator F takes an image y from domain Y and generates an image F(y) in domain X.

- Discrimination: Discriminators DX and DY evaluate the generated images to distinguish them from real images in domains X and Y.

- Cycle Consistency: Train the generators to ensure that translating an image to the other domain and then back results in the original image. For example, F(G(x)) should closely resemble x, and G(F(y)) should closely resemble y.

Training Process of Cycle GAN

Training CycleGAN involves two primary objectives: adversarial training and cycle consistency training.

1. Adversarial Training:

- The generators G and F aim to produce images that can fool the discriminators DY and DX, respectively.

- The discriminators DX and DY aim to distinguish between real and generated images.

The adversarial loss for generator G and discriminator DY is:

Similarly, the adversarial loss for generator F and discriminator DX is:

2. Cycle Consistency Training:

- Cycle consistency loss ensures that an image translated to another domain remains unchanged when translated back.

- Define this loss as the cycle consistency loss:

3. Identity Loss:

- Identity loss helps the generators preserve the color and structure of images when applied to images of their domain.

- It is beneficial when an image from domain X is passed through generator F and should remain unchanged.

4. Total Loss Function:

The total loss function for CycleGAN is a combination of adversarial loss, cycle consistency loss, and identity loss:

Here, and are the weights for the cycle consistency and identity losses, respectively.

Applications of Cycle GAN

CycleGAN has a wide range of applications due to its ability to perform unpaired image-to-image translation. Some of the notable applications include:

- Artistic Style Transfer:

CycleGAN can convert photographs into various artistic styles, such as transforming a photo into a painting in the style of different famous artists. This transfer allows for the creation of new artwork and the preservation of artistic styles.

- Image Super-Resolution:

Enhancing the resolution of low-quality images by learning the mapping from low-resolution to high-resolution images. It is instrumental in medical imaging, satellite imagery, and other fields where high resolution is crucial.

- Image Denoising:

This application removes noise from images, making them clearer and more visually appealing. It is valuable in photography, surveillance, and any field requiring clean, high-quality images.

- Domain Adaptation:

Adapting images from one domain to another improves machine learning models’ performance. For example, CycleGAN can adapt synthetic images to real-world images, enhancing the training of models used in autonomous driving.

- Medical Image Synthesis:

It generates medical images from different modalities, such as converting MRI to CT scans. This application helps in multi-modal medical image analysis and improves diagnostic capabilities.

Comparison with Other GAN Variants

1. Pix2Pix:

- Paired Data Requirement: Pix2Pix requires paired training data, where each input image has a corresponding output image, which can be a limitation when such data is unavailable.

- Use Case: Suitable for tasks with paired datasets, such as translating maps to satellite images or sketches to photos.

- CycleGAN Advantage: CycleGAN does not require paired data, making it more versatile for unpaired image translation tasks.

2. DCGAN (Deep Convolutional GAN):

- Architecture: DCGAN uses convolutional neural networks (CNNs) for both the generator and discriminator, making it well-suited for generating realistic images.

- Use Case: Primarily used for generating new images from a random noise vector.

- CycleGAN Difference: While DCGAN focuses on generating new images, CycleGAN translates images between domains with the added complexity of cycle consistency.

3. StarGAN:

- Multi-Domain Translation: StarGAN can perform image-to-image translation across multiple domains using a single model, unlike CycleGAN, which typically focuses on two domains.

- Use Case: Suitable for tasks requiring translation between multiple styles or attributes, such as changing hair color or facial expressions in images.

- CycleGAN Limitation: CycleGAN is typically applied to tasks involving two domains, Though you can extend it for multiple domains with additional models.

4. WGAN (Wasserstein GAN):

- Stability and Convergence: WGAN improves the stability of GAN training by using the Wasserstein distance as a metric for the loss function, addressing issues of mode collapse and training instability.

- Use Case: Generating high-quality images with more stable and reliable training processes.

- CycleGAN Enhancement: Techniques from WGAN, such as improved loss functions, can be integrated into CycleGAN to enhance training stability and image quality.

5. StyleGAN:

- High-Resolution and Control: StyleGAN is known for generating high-resolution images with detailed control over the generated image’s style and attributes.

- Use Case: Generating high-quality, photorealistic images for applications like face generation.

- CycleGAN Focus: While StyleGAN excels in generating high-quality single-domain images, CycleGAN is tailored for translating images between different domains, focusing on maintaining consistency and identity.

Challenges and Limitations of Cycle GAN

1. Complex Image Transformations:

- Limitation: CycleGAN may struggle with highly complex or intricate transformations, especially when the content and style of the two domains are significantly different.

- Example: Translating between different artistic styles or converting highly detailed photographs to abstract art.

2. Mode Collapse:

- Limitation: Like other GANs, CycleGAN can experience mode collapse, where the generator produces a limited variety of outputs, resulting in fewer diverse images.

- Consequence: This limits the model’s effectiveness, especially in applications requiring a wide range of outputs.

3. Training Instability:

- Limitation: Training GANs, including CycleGAN, can be unstable and sensitive to hyperparameter settings, making it challenging to achieve optimal results consistently.

- Challenge: Requires careful tuning and experimentation to find the right balance for training.

4. High Computational Cost:

- Limitation: Training CycleGAN models can be computationally expensive and time-consuming, especially for high-resolution images and large datasets.

- Requirement: Access to powerful GPUs and extensive computational resources.

5. Dependence on Large Datasets:

- Limitation: Although it does not need paired datasets, CycleGAN requires large amounts of data from each domain to learn effective mappings.

- Data Scarcity: This can be a significant limitation in domains where data is scarce or difficult to collect.

6. Identity Map Failure:

- Limitation: CycleGAN can sometimes fail in identity mapping, where an image from a domain should remain unchanged when passed through the generator of the same domain.

- Effect: This can result in undesirable color shifts or structural changes in the output images.

7. Overfitting to Training Data:

- Limitation: CycleGAN can overfit the training data, especially when the data is not diverse enough, leading to poor generalization on unseen data.

- Consequence: This limits the model’s applicability to real-world scenarios where new, unseen data is common.

8. Hyperparameter Sensitivity:

- Limitation: CycleGAN’s performance is highly sensitive to the choice of hyperparameters, such as the weights for the cycle consistency loss and identity loss.

- Experimentation: Experimentation is required to find the optimal settings for specific tasks.

Case Study: Translating Weather Conditions in Images

Background

In applications such as autonomous driving and remote sensing, it is essential to have training data that covers various weather conditions. Generating images that depict different weather conditions from the same scene can significantly enhance the robustness and performance of machine learning models.

Objective

To translate images of outdoor scenes between different weather conditions (e.g., sunny to rainy, rainy to snowy) using CycleGAN, facilitating the generation of diverse training data for autonomous driving systems.

Method

- Dataset Collection:

Collect unpaired images of outdoor scenes under different weather conditions, including sunny, rainy, and snowy.

- Training Process:

- Train the CycleGAN model with images from different weather conditions as separate domains. For example, domain X could represent sunny, and domain Y could represent rainy weather.

- Employ the cycle consistency loss to ensure that translating an image to a different weather condition and back to the original condition maintains the scene’s integrity.

- Evaluation:

- It evaluates the quality of the generated images by comparing them against real images of the same scenes under different weather conditions.

- Use quantitative metrics like FID to assess the similarity between the generated and real images.

Results

- The trained CycleGAN model successfully translates images between sunny, rainy, and snowy conditions.

- The generated images realistically depict weather changes, such as adding rain effects to sunny scenes or snow to rainy scenes while preserving the original scene’s content and structure.

- The evaluation shows that the generated images are visually convincing and comparable to real images captured under different weather conditions.

Future Trends and Research Directions

1. Improved Training Stability and Efficiency

Challenge: Training GANs, including CycleGAN, is often unstable and computationally intensive.

Research Directions:

- Advanced Optimization Techniques: Developing more robust optimization algorithms to enhance training stability and reduce hyperparameter sensitivity.

- Efficient Architectures: Designing more efficient network architectures that require less computational power and training time, potentially through techniques like model pruning and quantization.

2. Enhanced Quality of Generated Images

Challenge: Preserving fine details and achieving high fidelity in the generated images remain challenging.

Research Directions:

- High-Resolution Image Generation: Improving CycleGAN’s capability to handle high-resolution images with finer details.

- Combining GANs with Other Techniques: Integrating GANs with other deep learning techniques, such as super-resolution networks or attention mechanisms, enhances the generated images’ quality and realism.

3. Multi-Domain and Multi-Modal Translation

Challenge: CycleGAN typically handles translation between two domains, limiting its flexibility in multi-domain applications.

Research Directions:

- Multi-Domain GANs: Extending CycleGAN to support translations across multiple domains utilizing a single model, similar to StarGAN, but with cycle consistency mechanisms.

- Multi-Modal Translation: Enabling translation between visual domains and different modalities (e.g., images to text, images to audio).

4. Integration with Reinforcement learning

Challenge: Leveraging the strengths of both GANs and reinforcement learning (RL) to address complex tasks.

Research Directions:

RL-GAN Integration: Combining CycleGAN with RL techniques to enhance applications such as robotics, where image translation can be used for sim-to-real transfer, improving the performance of RL agents in real-world environments.

5. Self-supervised and Unsupervised Learning

Challenge: Reducing the reliance on large labeled datasets for training.

Research Directions:

- Self-Supervised Learning: Utilizing self-supervised learning techniques enables CycleGAN to learn effective image translations with minimal labeled data.

- Unsupervised Domain Adaptation: Developing methods for unsupervised domain adaptation to enhance the model’s ability to generalize to new, unseen domains.

Conclusion

CycleGAN represents a significant advancement in image-to-image translation, enabling the transformation of images between unpaired domains with remarkable fidelity. Its architecture, incorporating generators, discriminators, and cycle consistency, allows for versatile applications ranging from artistic style transfer to medical imaging. Despite challenges such as training instability and computational demands, ongoing research focuses on enhancing its capabilities and addressing ethical concerns. As these models evolve, they promise to unlock new possibilities and applications. This research pushes the boundaries of what machine learning and computer vision can achieve.

Frequently Asked Questions (FAQs)

Q1. How does CycleGAN differ from traditional GANs?

Answer: Unlike traditional GANs, which require paired datasets for training, CycleGAN can perform image-to-image translation using unpaired datasets. This process is thanks to its cycle consistency loss, which ensures the preservation of the original content during translation.

Q2. Can CycleGAN be used for real-time applications?

Answer: While CycleGAN can produce high-quality image translations, its computational requirements might limit real-time applications. However, real-time implementations are becoming more feasible with advances in model optimization and hardware acceleration.

Q3. What are some practical applications of CycleGAN in industries other than art and photography?

Answer: Beyond art and photography, CycleGAN has applications in medical imaging (e.g., converting MRI scans to CT scans), environmental monitoring (e.g., translating satellite images across seasons), and enhancing training data diversity for machine learning models in autonomous driving and robotics.

Recommended Articles

We hope that this EDUCBA information on the “Cycle Generative Adversarial Network (CycleGAN)” benefited you. You can view EDUCBA’s recommended articles for more information,