Updated June 15, 2023

Introduction to Hadoop vs Splunk

Hadoop in simpler terms is a framework for processing ‘Big Data’. Hadoop uses distributed file system and map-reduce algorithm to process loads of data.

Splunk is a monitoring tool. It offers a platform for log analytics, it analyzes the log data and creates visualizations out of it. Splunk facilitates the software for indexing, searching, monitoring and analyzing machine data, through a web-based interface.

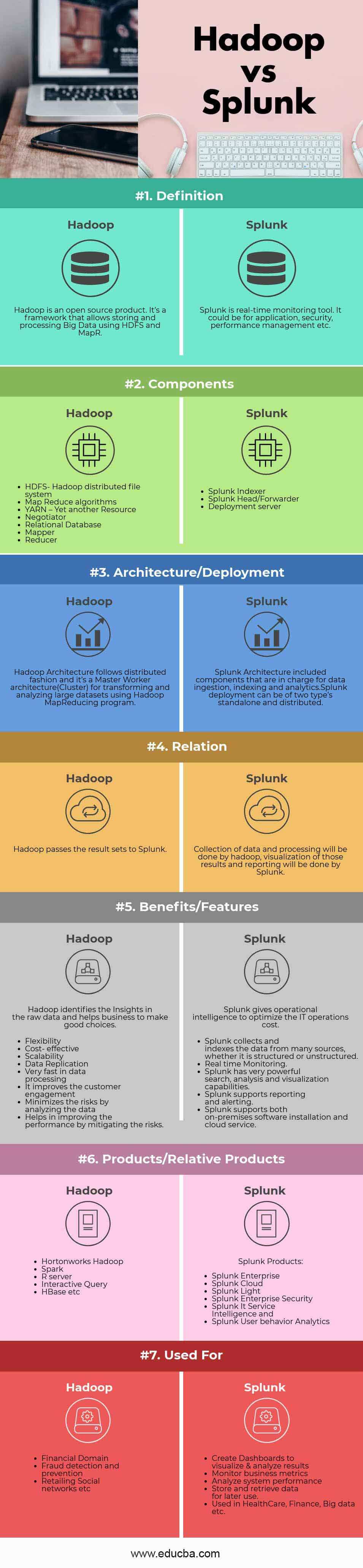

Head to Head Comparison Between Hadoop and Splunk (Infographics)

Below is the 7 comparison between Hadoop and Splunk:

Key Differences Between Hadoop and Splunk

Below is the differences between Hadoop and Splunk are as follows:

- Hadoop gives insight and hidden patterns by processing and analyzing the Big Data coming from various sources such as web applications, telematics data and many more.

- In Hadoop cluster, vital components are Hadoop Distributed File System-HDFS, Hadoop MapReduce, and Yet Another Resource Negotiator. Hadoop set up includes Name node/Master node and Data node/Worker node, which are the backbone of the Hadoop cluster

- Name Node: Name node is a background process, runs on Hadoop Master Node/Head Node. Name node saves all the metadata of all the worker nodes in a Hadoop cluster, such as File path, File name, Block id, Block location etc.

- DataNode: DataNode is a background process, runs on worker/slave nodes in Hadoop cluster. In Hadoop while processing the input files will be broken into smaller chunks/blocks, these blocks or chunks will be stored in DataNode. DataNode stores the actual data; this is the reason why data nodes should have more disk space. DataNode is responsible for reading/write operation to disks.

- Splunk work can be divided into three phases: Phase1: Gather data from as many sources as necessary. Phase2: Transforming data into solutions. Phase3: Representing the answer in the visual form; reports, interactive chart, or graph etc

- Splunk starts with indexing, which is nothing but gathering data from all the sources and combining it into centralized indexes.

- Indexes help Splunk to quickly search the logs from all the servers. Splunk stores indexes and correlated real-time data into searchable repo from which it can create and generate graphs, reports, alerts, visualizations, and dashboards.

- MapReduce is software which gives the platform for writing code/applications for processing big amounts of data in parallel on clusters which are very large. MapR includes two different tasks; Map Task and Reduce Task

- Map Task: Mapper is responsible for converting the input data into data sets, where individual data elements are broken down into key-value pairs (tuples).

- Reduce Task: Reducer takes the output from Mapper as input and combines those results data tuples into a smaller set of tuples. The reducer will work after Mapper.

- The other components of the MapR framework are Job Tracker and Task Tracker. It consists of a single master Job Tracker and once slave Task Tracker per cluster node and the master is responsible for monitoring the resources, tracking and scheduling the jobs of slaves. Task Tracker will execute the tasks as directed by Master node and gives the information task-status to master periodically

- Whereas in Splunk indexing is the major process to analyze the logs. Splunk can easily index the data from many sources such as Files and Directories, Network traffics, Machine Data and many more. Splunk can handle the time series data as well.

- Splunk uses standard API’s to connect with applications and devices to get the source data. Whereas for databases, Splunk has DB Connect to connect with many relational databases. The user can use this for importing structured data and perform powerful indexing, analysis, dashboards, and visualizations.

Hadoop and Splunk Comparison Table

Below is the comparison table between Hadoop and Splunk.

| Basis Of Comparison | Hadoop | Splunk |

| Definition | Hadoop is an open source product. It’s a framework that allows storing and processing Big Data using HDFS and MapR. | Splunk is real-time monitoring tool. It could be for an application, security, performance management etc. |

| Components |

|

|

| Architecture/Deployment | Hadoop Architecture follows distributed fashion and it’s a Master-Worker architecture(Cluster) for transforming and analyzing large data sets using Hadoop MapReduce program | Splunk Architecture included components that are in charge for data ingestion, indexing, and analytics. Splunk deployment can be of two type’s standalone and distributed. |

| Relation | Hadoop passes the result sets to Splunk | Collection of data and processing will be done by Hadoop, visualization of those results and reporting will be done by Splunk. |

| Benefits/features | Hadoop identifies the Insights in the raw data and helps business to make good choices.

|

Splunk gives operational intelligence to optimize the IT operations cost.

|

| Products/ Relative Products | Splunk Products:

|

|

| Used For |

|

|

Conclusions

Hadoop vs Splunk both help in extracting quick insights from Big Data. As discussed above Hadoop passes the results to Splunk, with that information Splunk can create visualizations and displays via a web-based interface.

Recommended Articles

This has been a guide to Hadoop vs Splunk. Here we have discussed Hadoop vs Splunk head to head comparison, key difference along with infographics and comparison table. You may also look at the following articles to learn more –