Introduction to Allocation of Frames in OS

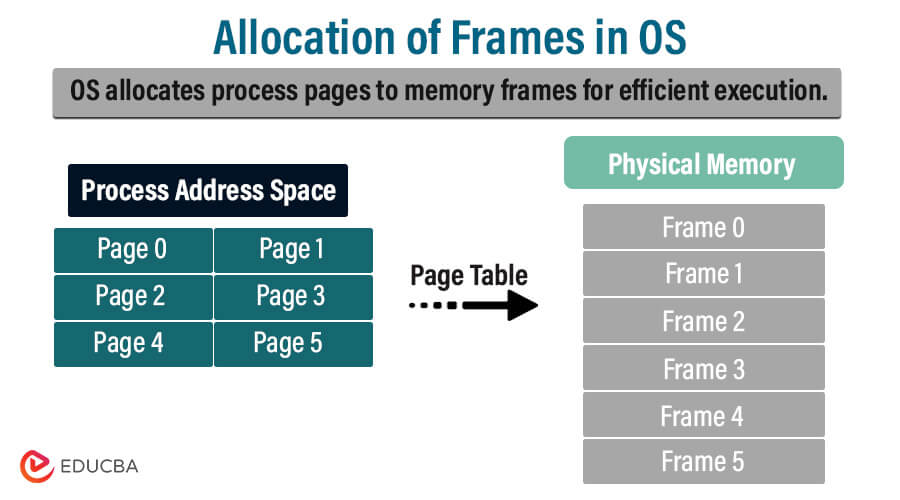

Frames allocation is an essential aspect of modern operating systems (OS), significantly in virtual memory implemented by demand paging. Demand paging necessitates the development of a page replacement algorithm and frame allocation algorithm. Frame allocation algorithm involves managing and dividing the main memory of the operating system (OS) into multiple frames for storing processes. These frames are fixed-sized memory blocks assigned to each process as required, enabling multiple processes to run simultaneously in an operating system. The operating system implements various frame allocation algorithms to efficiently allocate frames to multiple running processes.

Two primary strategies for frame allocation are as follows:

- The frames allocated to the process cannot exceed the total number of frames available.

- Each process must allocate a specific number of frames. This limitation is crucial for two reasons:

- Allocating minimum numbers of frames increases the page fault ratio, decreasing the process execution performance.

- There must be sufficient frames to accommodate all the multiple pages referenced by any instruction.

Table of Contents

- Introduction to Allocation of Frames in OS

- What Are Frames

- Importance of Frame Allocation

- Techniques for Frame Allocation

- Frame Allocation Algorithms in the OS

- Advanced Techniques and Optimizations

- Case Studies and Real-World Implementations

- Comparison of Memory Management Strategies in Different OS

- Real-world Examples

- Challenges and Future Trends

Key takeaways

- Frames allocation optimizes memory usage and improves process performance (OS).

- Frames divide physical memory into fixed-size memory blocks.

- The operating system assigns frames to each corresponding process.

- Frame allocation supports memory swapping and page replacement to accommodate incoming processes.

What Are Frames?

Paging in memory management retrieves processes from secondary memory in the form of pages. Each page represents an equal division of these processes, and the frame stores each page, which is a storage block in the main memory of the operating system.

Frames are the fixed-size physical memory blocks in the operating system that store processes running in the OS, typically ranging in size from kilobytes to megabytes. Each frame normally consists of a contiguous block of memory addresses.

Frames store the necessary resources like data and program instructions for executing processes and applications. These frames play a pivotal role in efficiently organizing and managing system memory, providing a structured framework for allocating resources for each process. Operating systems (OS) employ different frames allocation methods to manage system resources according to the process requirement.

Importance of Frame Allocation

Physical memory (RAM) is a fundamental but limited resource on a computer. Frame allocation is the task of dividing RAM into fixed-size blocks (frames) and assigning these frames to different processes running on the system. The fundamental importance of allocating frames is as follows:

- Optimize memory usage: The operating system efficiently allocates the limited memory available by using frame allocation. The operating system effectively maximizes memory usage by allocating frames based on the requirement of the process. It effectively optimizes memory usage and minimizes waste, improving overall system performance.

- Fair resource allocation: The operating system allocates impartial and balanced memory resources to all competing processes through frame allocation. Allocating frames based on different requirements such as size, priority, and resource demand prevents memory wastage and starvation. Ensuring that each process receives the necessary resources for execution.

- Minimize page faults: When a process needs access to the memory page not currently in the physical memory, it incurs a page fault. The system then fetches this memory page from secondary storage. The effective frame allocation strategies minimize page faults by prioritizing frequently accessed data in memory, improving system responsiveness and performance.

- Support multi-tasking environment: Effective frame allocation is necessary for executing multiple processes and providing diverse workloads smoothly in a multi-universe. Operating systems manage memory resources seamlessly, enabling the smooth execution of various tasks and user applications without system conflict.

Techniques for Frame Allocation

- Dynamic Allocation:

Dynamic allocation typically refers to the allocation of frames based on the requirement of the executing process. Dynamic allocates memory blocks to the process dynamically in response to the system condition and process requirements. This allocation technique allows an operating system to optimize memory usage by allocating and deallocating frames based on the necessity of the process.

Benefits:

- Flexibility: Dynamically allocating memory in the operating system adapts to varying workloads and manages resources efficiently

- Efficient Resource Utilization: The operating system dynamically allocates frames to processes based on their individual demands. This minimizes memory wastage and improves the overall system performance.

Limitation:

- Overhead: Dynamically allocating and deallocating memory cause overhead in terms of memory management, impacting system performance

- Complexity: The dynamic allocation mechanism imposes complex algorithms and data structures to trace the memory availability for managing the allocation of frames effectively.

- Contiguous Allocation:

This technique allocates contiguous blocks of physical memory to process. Each process receives a contiguous range of memory addresses, simplifying memory addressing and access. Contiguous allocations work well with the fixed size of memory frames. This approach allocates each process by equal-sized contiguous chunks in memory.

Benefits:

- Simplify Memory management: Contiguous allocation cuts the overhead as each process utilizes a contiguous block of memory, simplifying the memory access and addressing.

- Efficient Utilization: The contiguous allocation technique mitigates the internal fragmentation of memory since contiguous memory allocation reduces waste space.

Limitation:

- Limited Scalability: Contiguous allocation limits its scalability with the increasing demand of memory for the process as it requires the contiguous block of free memory.

- Fragmentation: Contiguous allocation may endure external fragmentation as free memory is fragmented into small non-contiguous blocks, making it challenging to satisfy large memory requests.

- Non-contiguous Allocation:

The non-contiguous memory allocation technique provides memory to the process in such a way that they are not adjacent to each other or, in other words, they are not dependent on the line location of the memory. In comparison to the contiguous technique, this technique has proven to be more efficient since it provides Memory from any available free memory blocks, and memory management is optimized.

Benefits:

- Flexibility: Non-contiguous allocation adapts dynamic memory tickets and diminishes disintegration by providing available memory blocks across the address space.

- Scalability and better resource utilization: By allocating available memory blocks from all around, this technique makes better resource utilization without wasting any space, and hence, it can quickly scale with diverse workloads and memory requirements.

Limitation:

- External Fragmentation: Although it reduces internal fragmentation, it still faces issues with external fragmentation, where free memory becomes disintegrated into tiny, non-adjacent bits over time.

- Memory management: Sometimes, it faces difficulty searching for and allocating free available spaces.

- Demand Paging and Swapping Technique:

The demand Paging and Swapping technique only dumps data into the memory block when required. Data is removed from the memory block when the memory space becomes limited. Demand Paging involves putting data in memory only when there is a demand. Swapping involves transiently migrating the entire or part of the process to secondary storage.

Benefits:

- Optimized Memory Utilization: The demand paging and swapping technique is the well-defined process of bringing the data into the memory only when there is a demand for it and removing the data when the memory space gets saturated. It decreases the memory wastage and increases the overall efficiency of the system.

- Improved responsiveness: Since the data is loaded only when required, this reduces the initial process loading time, and the system works faster than usual.

Limitations:

- Under heavy load, disk I/O operations and memory management negatively impact system performance.

- During swapping, There should be a secondary memory space to allocate the swapped-out processes during swapping, which affects system configuration.

- Adaptive Allocation policy:

Adaptive Allocation policy dynamically adapts memory allotment depending on workload features and existing system circumstances. It facilitates operating systems’ varied allot memory to refine system utilization and performance.

Benefits:

- Performance enhancement: Dynamic memory allocation with changing workload requirements improves the operating system’s overall performance.

- Resource management: This technique verifies Optimized Memory usage and removes performance constraints by tailoring memory allocating strategies based on changing requirements.

Limitations

- Complexity: Since this method mainly depends upon studying the changing system conditions and workload characteristics, complex algorithms and mechanisms are required to handle adaptive Allocation policy.

Frame Allocation Algorithms in the OS

The frame Allocation Algorithm is a fundamental mechanism in the Operating System for managing the physical memory for different processes. These algorithms optimize memory utilization, enhance system performance, and ensure fair resource allocation among various processes by allocating frames. Operating systems employ different algorithms to efficiently manage the frame allocation for running multiple processes as follows:

- Equal Frame Allocation

In equal frame allocation, the algorithm will allocate frames among all the processes equally in the operating system. Each process will have an equal share of available memory regardless of its size or memory requirement.

The operating system with X frames and Y processes assigns each process an equal number of frames, calculated as X divided by Y. If a system has 38 frames and five processes. Each process will be assigned seven frames. The operating system leaves the remaining three frames unassigned, making them available for future use by processes as a free-frame buffer pool.

Advantages:

- Simplicity: Equal frame allocation algorithm is easy to understand and implement in basic memory allocation with an equal number of frames allocated to each corresponding process.

- Fairness: Ensure fairness in resource distribution by assigning equal frames to each process.

Disadvantages:

- Inefficiency: Equally allocating frames for each process does not make much sense. Allocating small task processes with large frames may lead to memory wastage, and large task processes may face memory shortage.

- Lack of Flexibility: Equal frame allocation is not an optimal solution as it does not consider process size, priority, or resource requirement, leading to insufficient solutions and resource utilization.

- Proportional Frame Allocation

The proportional frame allocation overcomes the drawback of equal memory allocation by allocating frames based on the size required to execute a process and the total number of frames in the memory.

For each process pi of the size si, the number of allocated frames is defined by ai = (si/S)*m, where

S is the total sum of the size of all the processes.

m is the number of frames in the system.

If a system has 72 frames in the memory and there are two processes of 20KB and 92KB then the process with size 20KB will be allocated by (20/112)*72=12 frames, and the other process, with a size of 92KB, will allocate (92/112)*72 = 59 frames.

Advantages:

- Efficiency: Allocating memory proportionally optimizes memory utilization by assigning frames to process according to the resource demands.

- Flexibility: This algorithm provides flexibility by adapting to various workload conditions and prioritizing the size of the process by allocating memory according to the resource requirement.

Disadvantages:

- Complexity: Proportionally allocating memory requires additional logic to determine frame size according to the process priority.

- Overhead: Calculating memory for frames proportionally introduces computational complexity, impacting the system performance and increasing overhead.

- Priority Frame Allocation

In priority frames allocation, frames are allocated based on the process’s priority level, ensuring the critical processes receive adequate memory resources. The higher-priority processes are given precedence by allocating the required number of frames

and allocating the lower-priority frames next.

This algorithm assigns a priority value to each process by sorting the process based on the priority level, and the OS will give the first preference to the higher-priority process. The process with a higher priority level is assigned more frames.

Advantages:

- Responsiveness: Priority frame allocation optimizes the system’s responsiveness and performance for important tasks by ensuring that critical processes receive adequate memory storage.

- Resource management: Prioritizing memory allocation improves the system resource and storage management and ensures that high-priority frames do not experience memory shortage.

Disadvantages:

- Starvation: Low-priority processes may undergo memory starvation as high-priority processes dominate memory resources, leading to suboptimal performance and a deadlock situation.

- Complexity: Dynamically managing memory allocation based on the process’s priority introduces complexity for managing memory.

- Global Replacement Allocation:

The program executing in the operating system requires pages that contain memory for allocation. The operating system stores some pages in primary memory for quick access. If the required frame in a page isn’t currently in primary memory, it triggers a page fault. This page fault calls for the required page from the secondary memory to load into the primary memory.

The Global replacement allocation manages the memory frames when the page fault occurs. When the system needs a page for a process that is not currently in memory, it brings in the new page and allocates its frame from all available sets of frames, even if another process already has an allocation. The global replacement algorithm tells which process with low priority can give frames to the process with higher priority and ensures that fewer page faults occur. The process can utilize frame replacement from another process, employing algorithms like LRU and FIFO.

Advantages:

- System-wide optimization: Maximize the system performance by replacing the least used and less critical pages.

- Efficient Utilization of Memory: Improve overall memory utilization by allowing the process to share memory and dynamically adjust to changing workloads.

Disadvantages:

- Interference: Replacing frames from any process leads to interference between processes, impacting the system performance for unrelated tasks.

- Fairness Issues: The global replacement algorithm prioritizes certain processes over the process, leading to fairness issues in multiple tasking and user environments.

- Local Replacement Allocation

In local replacement allocation, when a page fault occurs, a process needs a page that is not present in the memory. It brings in the new page. It allocates a frame to that page from its own set of allocated frames without impacting the behavior of another process.

When the page fault occurs, the Operating system allocates frames from the faulting process, chosen from the reserved frame for that particular process.

Advantages:

- Isolation: Local replacement allows each process to manage its memory resources without making any interference between processes.

- Predictability: The algorithm provides predictable behavior and avoids unexpected impacts by limiting frame replacement to the faulting process.

Disadvantages:

- Fragmentation: Cause memory fragmentation by restricting frame replacement to each process, impacting memory efficiency.

- Potential for deadlocks: local replacement may make multiple processes wait for memory resources held by each other, creating deadlock situations.

Advanced Techniques and Optimizations

- Memory Swapping Techniques

When Random Access Memory cannot manage all the actively running programs and data, the operating system transfers some of the data to secondary storage, such as a hard disk. This technique of managing data by swapping them is termed memory swapping. Due to insufficient physical memory, the less frequently used pages are swapped to the hard disk to free up the space for critical tasks.

The following describes the memory-swapping procedure:

- Trigger Swapping: When the operating system detects that the available physical memory is insufficient for system resources, it initializes memory swapping to create additional space for the running process.

- Page Replacement: The page replacement algorithms swap the least recently used or less critical pages to the disk. We use the First-In-First-Out, LRU, or Clock algorithm to decide on page replacement.

- Swapping Process: The system swaps the selected page from RAM to disk, known as swap space. This space serves as an extension of physical memory.

- Memory Reclamation: After the completion of the swapping process, the physical memory is now available for other methods to allocate.

- Swapping Back: If a process requires access to the swapped pages, the system retrieves those pages back into the physical memory from the swap space. To complete this process, the operating system reads pages from the disk and copies them to the RAM.

- Integration with Virtual Memory Management

In modern operating systems, integration with virtual memory management involves synchronizing physical memory allocation with virtual memory. It allows the process to use more memory when required by utilizing the secondary storage, disk storage. Virtual memory allows a process to use disk storage when the available memory is insufficient. The operating system switches the least used data into the disk, making RAM accessible for processes by allocating one or more memory frames.

Below are the steps to effectively manage memory resources by integrating virtual memory:

- Virtual Address Space Allocation: When the operating system creates a new process, it establishes a virtual address space with a range of memory addresses for the process to use.

- Page Table Creation: The operating system creates an empty page table and maintains each process, which maps the process’s virtual addresses to the physical addresses.

- Demand paging: In Demand paging, the operating system loads the process pages that are actively used or accessed into physical memory as needed.

A page fault takes place when a process tries to access a page not in the physical memory. It triggers the Operating system to load a page into physical memory from the secondary storage.

- Page replacement: If the operating system brings in a new page and there is no space in the physical memory, then a page is selected from the memory to evict. The page to evict from the memory is chosen based on the LRU (Least Recently Used) or FIFO (First-In-First-Out) algorithm.

After modification, the system writes the evicted page back to secondary storage and then brings in the new page from secondary storage.

- Memory protection: A virtual memory system ensures that processes cannot access unauthorized locations. Each page in the memory is associated with read, write, and execute access permission through memory management units.

- Memory mapping: The operating system manages the mapping between the virtual addresses used by the process and the physical addresses in secondary storage. The operating system maintains a page table to translate virtual memory addresses to physical addresses.

- Optimization and fault handling: Virtual memory management uses optimization techniques, and the Operating system handles page faults for smooth and efficient system performance.

Case Studies and Real-World Implementations

Windows OS

- Case study

Let’s analyze the frame allocation strategy in the Windows operating system by considering a real-world scenario where an organization operates a web server receiving multiple HTTP requests and displaying websites.

- Frame allocation strategy:

To handle high traffic on a web server, a Windows server utilizes the demand paging technique for memory management.

When a process requires memory, it brings pages into RAM. When the space on the physical memory (RAM) becomes saturated, according to the Most Recently Loaded Used (MRLU) algorithm, pages are removed.

- Implementation:

- The web server monitors memory usage while handling HTTP requests from users. Task manager plays a crucial role in keeping track of parameters like page faults and available memory.

- The MLRU technique keeps frequently requested web pages in memory so that when a request for a particular web page is received, it can be displayed in real time without delay. MLRU maintains a modified bit for each web page to achieve this, determining whether it has been modified since being dumped into memory. It removes those less frequently requested pages since they are clean (not modified).

- But sometimes users also request those web pages that are used less frequently, and now they have already been evicted. So, the web server retrieves them back from the disk, potentially causing disk lagging and affecting web server responsiveness.

- With constant fluctuating workloads, web servers dynamically adjust memory and use additional memory in case of high-traffic sessions.

- Administrators use monitoring tools to understand memory utilization patterns, and based on these patterns, they configure memory settings to set upper and lower memory limits for application pools.

- Result

In the above case study, we examined real-world scenarios of web server memory management techniques such as demand Paging and MLRU algorithm to display frequently and less frequently requested web pages, thereby maintaining system performance.

Mac OS

- Case study

Let us understand the frame allocation in the Mac operating system by considering a real-world situation where a user opens multiple windows simultaneously, like a browser, a terminal, a media player, etc. The operating system assigns memory blocks to each application but loads only necessary components into memory simultaneously.

- Frame allocation strategy

The Mac operating system has opted for the demand paging technique, which loads pages into memory space only when there is a demand instead of loading the entire application simultaneously. Optimized Memory Utilization is the key to better performance in the Mac operating system.

- Implementation

When the user tries to open multiple applications, the Mac operating system responsively allocates and deallocates bits of the application as per their demand. For example, when the user is working with the terminal and wants to move to the browser, the system will allocate some pages related to the terminal and space to the browser’s pages. LRU (Least Recently Used) or clock algorithms administer the allocation and release of memory space. They aim to keep on-demand pages in memory and release pages that the application doesn’t currently need.

- Result

In the above scenario, we determined that the Mac Operating System employs a demand paging technique for memory management. This means it loads only the necessary pages of an application upon initial launch. When switching between applications, the system prioritizes storing frequently used application pages in memory. This approach delivers optimal performance within the Mac operating system.

Linux OS

- Case study

Let us understand the memory administration in the Linux operating system, where the buddy system allocates and de-allocates memory to the processes.

- Frame allocation strategy:

The buddy system extensively manages physical memory in the Linux operating system. It divides memory into fixed-size bits called pages and then allocates and de-allocates this memory unit to the processes.

- Implementation:

Suppose a process requests for memory of 8 kb from the system. The buddy system maintains a record of free memory called a free list. This list also contains the size of the memory like 4 kb memory, 8 kb memory, 16 kb memory, etc. Now the Linux kernel searches across the list to find a suitable memory block for the incoming request made by the process. It considers that there is a 16 kb block free for use. It then divides the 16 kb block into 2 blocks of 8 kb each and assigns one of the blocks to the process. Once the process has completed its transactions, the 8 kb memory block is retrieved from the process and then again merged with its 8 kb other half block to form a 16 kb block. This 16 kb block is again free and available in the free list.

- Result

From the above scenario, we conclude that the Linux kernel employs the buddy system to partition memory into small memory bits. It maintains a list of each available free block to be assigned to a process and efficiently reclaimed after completion.

Comparison of Memory Management Strategies in Different OS

| Section | Windows | macOS | Linux |

| Frame Allocation Strategies | Demand paging with MLRU algorithm | Demand paging with LRU or Clock Algorithm | Buddy system for physical memory management |

| Memory Management Philosophy | Handle and monitor dynamic workload efficiently | Enable dynamic resource management and responsive memory allocation | Prioritize efficient resource utilization, block management, and simplify memory allocation. |

| User Experience | Offers user-friendly experience with a task manager to supervise memory usage. | Provide a responsive user experience with enhanced memory allocation and easy application switching. | Offers a stable user experience, reliable memory management, and minimal overhead. |

| Performance | Optimize memory utilization with dynamic adjustment and smart caching strategies. | Optimize resource management and memory usage with a frequently used page strategy. | Efficient memory allocation, minimize fragmentation, and maximize system performance |

| Compatibility | Compatible with a wide range of software and hardware applications | Integrate with Apple hardware and software, provide consistent readability and performance | Support a wide range of computing environments, compatible with various hardware architecture |

| Customization and flexibility | Ensure a high level of customization and flexibility | Provide moderate customization | Provide extensive customization and flexibility |

Real-world Examples

Virtual memory

- Consider a scenario where a photo editor is trying to edit a high-resolution photo on digital software like Adobe Photoshop.

- As the user opens the software, the operating system provides some amount of the system’s physical memory, i.e., RAM, to run the software. However, when the user selects high-resolution photos and images to edit, the initial memory becomes saturated and requires memory for the software to function correctly.

- Using demand paging, the system dynamically allocates extra virtual memory to the software to handle the images and its editing process, such as storing raw pixel data, editing history, and adjusting settings.

- When working with digital software and high-resolution images & videos, the operating system efficiently administers the allocation of frames and physical & virtual memory utilization, enhancing the user’s workflow by proper allocation and de-allocation techniques.

Computer Memory Management

Let us take an example of a user exploring the internet on their favorite web browser.

As the user opens the web browser and tries to visit a website, the operating system has to provide memory to the three main components of the web browser.

1) Executable code – The operating system provides memory to the browser’s executable code, which is the main functionality of the browser. It contains some set of protocols and information to run the browser efficiently.

2) User interface elements – These interactive elements (menus, buttons, dropdowns, etc.) allow users to interact with the browser. The browser must store the graphical data for these elements in memory.

3) Page data – Once the user interacts with the browser, the browser returns some web pages. These pages may be cached in the RAM to avoid page loading delay and give quick results for frequently accessed pages.

The browser and operating system function together to administer memory usage efficiently. This includes prioritizing resources for active browser tabs and processes, optimizing frame allocation to background code, UI, and pages, and freeing up memory space when it’s no longer required, such as when exiting tabs or closing the browser.

Database Management System

- Imagine inventory management uses a database to record all the inventory.

- When the user starts the database application, the system allocates memory to load essential software components. This includes the database engine, query optimizer, and other modules. Memory frames hold the executable code and structures of the database system for efficient operation. Additionally, memory frames store the actual inventory data and information. Application performance hinges on rapid data transfer from the database; therefore, each system component requires dedicated memory space.

- The Database system maintains a buffer pool in memory to cache frequently accessed data pages from disk storage. When a query executes, the database engine performs a buffer pool lookup to find the desired data pages. If not, the operating system allocates memory frames to store the requested pages, reducing the need for costly disk I/O operations.

- The database system continuously tracks memory usage and manages the memory space accordingly. Deallocation of unused memory is done responsively. Hence this leads to efficient management of information in the database and its fluent data transactions.

Graphics Processing Units (GPUs) in Gaming

- Consider a scenario where a gamer plays a graphics-intensive video game on a high-performance computer with a dedicated GPU.

- As the game renders complex 3D graphics, the GPU allocates memory frames to store textures, shaders, vertex data, and other graphical elements required for rendering.

- The GPU optimizes memory usage and ensures smooth gameplay with high frame rates by utilizing efficient frame allocation techniques, such as texture paging or buffer allocation.

- When rendering large game environments or intricate visual effects, the GPU dynamically allocates and deallocates memory frames to accommodate changing scene demands, providing a seamless gaming experience for the player.

Challenges and Future Trends

Challenges

- Increasing memory demand:

Complex applications require significant memory resources to run efficiently in the operating system. This application often requires large datasets and complex algorithms to run, and these demand considerable memory space.

Every virtual machine requires memory allocation in a virtualized environment, leading to increased memory demands.

Large datasets must be processed and analyzed in big data technologies, demanding ample memory to store and manage data effectively.

- Fragmentation:

When processes are loaded and unloaded, the memory is divided into non-contiguous blocks, causing fragmentation.

External fragmentation occurs when free memory is divided into non-contiguous blocks, making it challenging for OS to allocate contiguous memory blocks to process, resulting in inefficient memory usage. Internal fragmentation arises when allocated memory blocks are larger than required for processes leading to memory wastage within allocated blocks.

- Dynamic workloads:

In modern computing, memory demand for processes may vary dynamically over time. A web server may experience high peak traffic at a particular time, eventually leading to an increase in memory requirement. The operating system must adapt to these varying changes effectively by allocating and deallocating frames according to the process demand for efficient system performance.

- Page replacement overhead:

When the physical memory capacity attains maximum capacity, the operating system must switch to secondary storage, like a disk, to create space for new pages. It consists of reading pages from memory to disk and writing new pages from disk to memory, acquiring I/O overhead. Page replacement algorithms also incur CPU overhead, impacting overall system performance with high memory pressure.

Future Trends

- Memory compression:

To address limited physical memory, the operating system might dynamically compress memory pages, allowing it to store more data in physical memory and reduce the need for frequent paging.

- Intelligent page replacement Algorithm:

Intelligent page replacement algorithms can be deployed by using Machine learning(ML) and Artificial Intelligence(AI) techniques to analyze the behavior of processes such as access patterns and memory usage. This analysis allows OS to decide for which page to evict from memory, improving overall system performance.

- Hardware-Assisted Memory Management:

Hardware-assisted memory architecture may include features for efficient management of page tables, memory protection, and virtual memory translation. These improve system performance and reduce overhead.

- Non-Volatile Memory:

The OS may adopt adaptive memory management policies to adjust memory allocation dynamically based on workload and system resources. These optimize memory usage, minimize fragmentation, and adapt to the workload on demand.

Conclusion

In an operating system, frame allocation is critical to managing memory efficiently. The fixed-sized blocks of the frame allow the operating system to allocate memory in a structured and controlled manner. The efficient allocation technique minimizes fragmentation, maximizes resource utilization, and enables dynamic allocation strategies, improving overall system performance and security.

Frequently Asked Questions (FAQs)

Q1) How does frame allocation interact with containerization technologies?

Answer: Frame allocation dictates the storage of processes in physical memory. To employ containerization technologies like Docker, the host operating system’s frame allocation strategies manage memory allocation for the host system and the containers. Docker relies entirely on operating system memory management, so the frame allocation interaction occurs at the operating system level. Docker does not directly manipulate the frame allocation; it utilizes the operating system strategies to allocate containers with memory resources effectively. This incorporates leveraging OS features like namespace and groups to control container isolation and resource allocation.

Q2) What can be the influence of hardware accelerators on frame allocation algorithms?

Answer: In an operating system, hardware acceleration can affect the frame allocation algorithm, particularly in environments where these accelerators utilize specific tasks. Accelerators like GPUs for graphical processing or hardware for AI inference often have their own memory requirements and optimization strategies. Frame allocation algorithms may need to consider these accelerators’ requirements and characteristics for efficiently allocating memory resources. The operating system may need to prioritize allocating contiguous memory blocks to transfer DMA(Direct Memory Access) to and from these hardware accelerators. The operating system must coordinate memory allocation to manage interactions with hardware accelerators.

Q3) how does the Operating system allocate frames in the case of heterogeneous memory architecture?

Answer: In the case of heterogeneous memory architecture, the Operating system needs to be more accurate and manage memory resources across different types of memory devices like NVM (Non-Volatile Memory), DRAM (Dynamic Random Access Memory), and HBM (High Bandwidth Memory). The operating system has a unified memory pool with different regions depending on the device. Memory pooling makes it easy to allocate memory resources to other devices according to their type of application.

Recommended Articles

We hope that this EDUCBA information on “Allocation of Frames in OS” was beneficial to you. You can view EDUCBA’s recommended articles for more information.