Updated June 14, 2023

Difference Between Apache Hive and Apache Spark SQL

With the massive amount of increase in big data technologies today, it is becoming very important to use the right tool for every process. The process can be anything like Data ingestion, Data processing, Data retrieval, Data Storage, etc. In this post, we will read about two such data retrieval tools, Apache Hive and Apache Spark SQL. Hive has been known to be the component of the Big data ecosystem where legacy mappers and reducers are needed to process data from HDFS whereas Spark SQL is known to be the component of Apache Spark API which has made processing in the Big data ecosystem a lot easier and real-time. A major misconception most professionals today have is that hive can only be used with legacy big data technology and tools such as PIG, HDFS, Sqoop, and Oozie. This statement is not completely true, as Hive is compatible not only with the legacy tools but also with Spark-based other components, like Spark Streaming. The idea behind using them is to reduce the effort and bring better output for the business. Let us study both Apache Hive and Apache Spark SQL in detail.

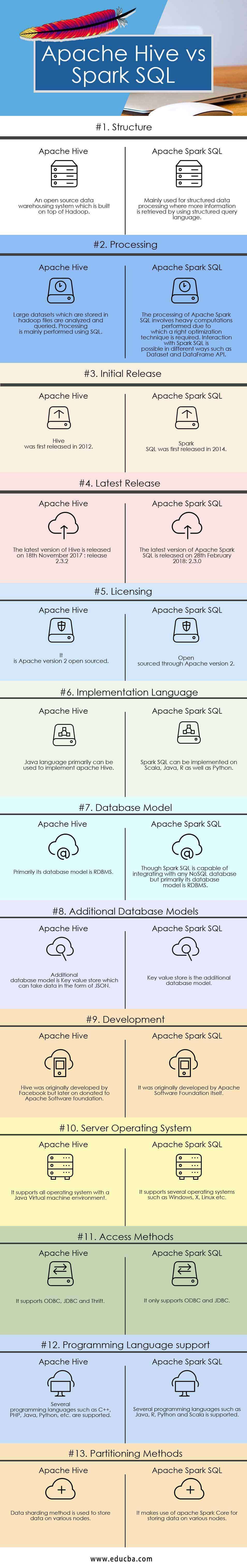

Head to Head Comparison Between Apache Hive and Apache Spark SQL (Infographics)

Below is the Top 13 comparison between Apache Hive and Apache Spark SQL:

Key Differences Between Apache Hive and Apache Spark SQL

- Hive is known to make use of HQL (Hive Query Language), whereas Spark SQL is known to make use of Structured Query language for processing and querying data.

- Hive provides schema flexibility, portioning and bucketing the tables, whereas Spark SQL performs SQL querying. It is only possible to read data from existing Hive installation.

- Hive provides access rights for users, roles, and groups, whereas Spark SQL provides no facility to provide access rights to a user.

- Hive provides the facility of selective replication factor for redundant data storage, whereas spark SQL, on the other hand, does not provide any replication factor for storing data.

- As JDBC, ODBC, and thrift drivers are available in Hive, we can use them to generate results, whereas, in the case of Apache Spark SQL, we can retrieve results in the form of Datasets and DataFrame APIs if Spark SQL is run with another programming language.

- There are several limitations:

- Row-level updates and real-time OLTP querying are not possible using Apache Hive, whereas row-level updates and real-time online transaction processing is possible using Spark SQL.

- Provides acceptable high latency for interactive data browsing, whereas, in Spark SQL, the latency is up to the minimum to enhance performance.

- Hive, like SQL statements and queries, supports the UNION type, whereas Spark SQL is incapable of supporting the UNION type.

Apache Hive and Apache Spark SQL Comparision Table

Below are the comparison table between Apache Hive and Apache Spark SQL.

| Basis of Comparison | Apache Hive | Apache Spark SQL |

| Structure | An open-source data warehousing system that is built on top of Hadoop | Mainly used for structured data processing, where more information is retrieved by using a structured query language. |

| Processing | Large datasets which are stored in hadoop files are analyzed and queried. Processing is mainly performed using SQL. | The processing of Apache Spark SQL involves heavy computations, which require the right optimization technique. Interaction with Spark SQL is possible in different ways, such as Dataset and DataFrame API. |

| Initial Release | Hive was first released in 2012 | Spark SQL was first released in 2014 |

| Latest Release | The latest version of Hive was released on 18th November 2017: release 2.3.2 | The latest version of Apache Spark SQL was released on 28th February 2018: 2.3.0 |

| Licensing | It is Apache version 2 open sourced | Open-sourced through Apache version 2 |

| Implementation language | Java language primarily can be used to implement Apache Hive | Spark SQL can be implemented in Scala, Java, R as well as Python |

| Database model | Primarily its database model is RDBMS | Though Spark SQL is capable of integrating with any NoSQL database but primarily its database model is RDBMS |

| Additional Database Models | An additional database model is a key-value store that can take data in the form of JSON. | A key-value store is the additional database model |

| Development | Facebook originally developed Hive but later on donated to the Apache Software Foundation. | Apache Software Foundation itself originally developed it |

| Server Operating System | It supports all operating systems with a Java Virtual machine environment | It supports several operating systems, such as Windows, X, Linux, etc. |

| Access Methods | It supports ODBC, JDBC, and Thrift | It only supports ODBC and JDBC |

| Programming Language Support | Several programming languages, such as C++, PHP, Java, Python, etc., are supported. | Several programming languages, such as Java, R, Python, and Scala, are supported. |

| Partitioning Methods | The data sharding method is used to store data on various nodes | It makes use of Apache Spark Core for storing data on various nodes |

Conclusion

We cannot say that Apache Spark SQL replaces Hive or vice-versa. Hive has its special ability to switch between engines frequently, which is an efficient tool for querying large data sets. The usage and implementation of what to choose depending on your goals and requirements. Both Apache Hive and Apache Spark SQL are players in their own fields. After reviewing the post, I hope you will get a fair enough idea about your organization’s needs. Follow our blog for more posts like these, and we make sure to provide information that fosters your business.

Recommended Articles

This has been a guide to Apache Hive vs Apache Spark SQL. Here we have discussed the Apache Hive vs Apache Spark SQL meaning, head-to-head comparison, key Differences, infographics, and comparison tables. You may also look at the following articles to learn more –