Updated April 27, 2023

Difference Between Apache Kafka vs Flume

Apache Kafka is an open-source system for processing ingested data in real time. Kafka is a durable, scalable, and fault-tolerant public-subscribe messaging system. The publish-subscribe architecture was initially developed by LinkedIn to overcome the limitations in batch processing of large data and to resolve issues of data loss. The architecture in Kafka will disassociate the information provider from the consumer of information. Hence, the sending and receiving applications will not know anything about each other for the data sent and received.

Apache Kafka will process incoming data streams irrespective of their source and destination. It is a distributed streaming platform with capabilities similar to an enterprise messaging system but has unique capabilities with high levels of sophistication. With Kafka, users can publish and subscribe to information as and when they occur. It allows users to store data streams in a fault-tolerant manner. Irrespective of the application or use case, Kafka efficiently factors massive data streams for analysis in enterprise Apache Hadoop. Kafka also can render streaming data through a combination of Apache HBase, Apache Storm, and Apache Spark systems and can be used in various application domains.

In simplistic terms, Kafka’s publish-subscribe system comprises publishers, Kafka clusters, and consumers/subscribers. Data published by the publisher are stored as logs. Subscribers can also act as publishers and vice-versa. A subscriber requests a subscription, and Kafka forwards the data to the requested subscriber. Numerous publishers and subscribers can be on different topics on a Kafka cluster. Likewise, an application can act as both a publisher and a subscriber. A message published for a topic can have multiple interested subscribers; the system processes data for every interested subscriber. Some of the use cases where Kafka is widely used are:

- Track activities on a website

- Stream processing

- Collecting and monitoring metrics

- Log Aggregation

Apache Flume is a tool that collects, aggregates, and transfers data streams from different sources to a centralized data store such as HDFS (Hadoop Distributed File System). Flume is a highly reliable, configurable, and manageable distributed data collection service designed to gather streaming data from different web servers to HDFS. It is also an open-source data collection service.

Apache Flume is based on streaming data flows and has a flexible architecture. Flume offers a highly fault-tolerant, robust, and reliable mechanism for fail-over and recovery with the capability to collect data in batch and in-stream modes. Enterprises leverage Flume’s capabilities to manage high-volume data streams to land in HDFS. For instance, data streams include application logs, sensors, machine data, social media, etc. When landed in Hadoop, these data can be analyzed by running interactive queries in Apache Hive or serve as real-time data for business dashboards in Apache HBase.

Some of the features include:

- Gather data from multiple sources and efficiently ingest it into HDFS

- A variety of source and destination types are supported

- Flumes can be easily customized, reliable, scalable and fault-tolerant

- Can store data in any centralized store (e.g., HDFS, HBase)

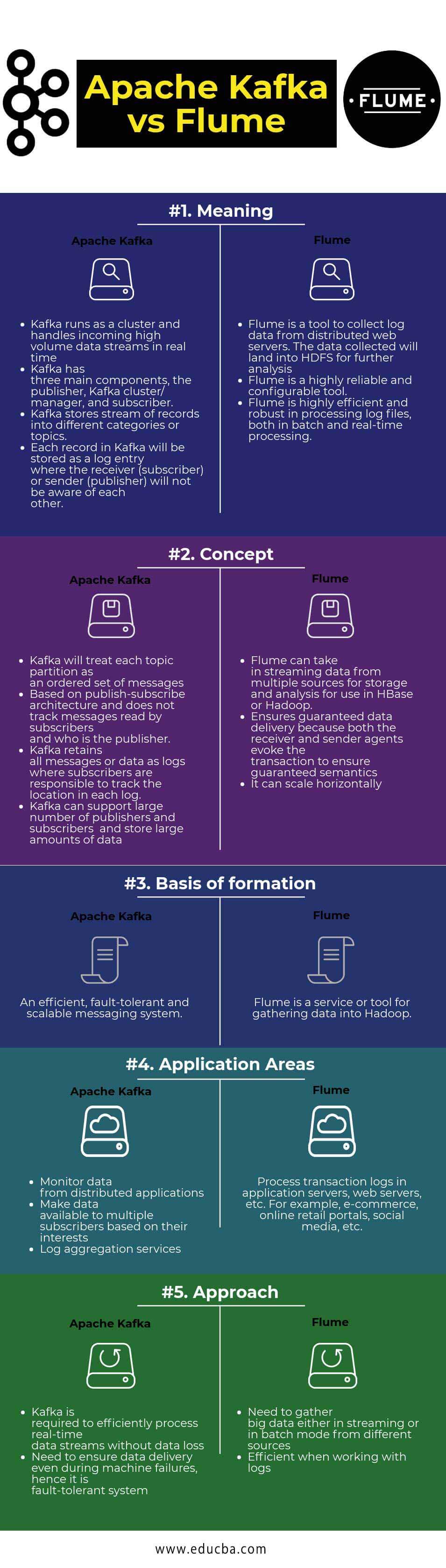

Head-to-Head Comparison Between Apache Kafka vs Flume (Infographics)

Below are the Top 5 Comparision Between Apache Kafka vs Flume:

Key Differences Between Apache Kafka vs Flume

The differences between Apache Kafka vs Flume are explored here:

- Apache Kafka and Flume systems provide reliable, scalable, and high-performance systems for easily handling large volumes of data. However, Kafka is a more general-purpose system where multiple publishers and subscribers can share multiple topics. Contrarily, Flume is a special-purpose tool for sending data into HDFS.

- Kafka can support data streams for multiple applications, whereas Flume is specific for Hadoop and big data analysis.

- Kafka can process and monitor data in distributed systems, whereas Flume gathers data from distributed systems to land data on a centralized data store.

- Apache Kafka and Flume are highly reliable when configured correctly, with zero data loss guarantees. Kafka replicates data in the cluster, whereas Flume does not replicate events. Hence, when a Flume agent crashes, access to those events in the channel is lost till the disk is recovered. On the other hand, Kafka makes data available even in case of single-point failure.

- Kafka supports large sets of publishers and subscribers and multiple applications. On the other hand, Flume supports a large set of source and destination types to land data on Hadoop.

Apache Kafka vs Flume Comparison Table

The comparison table between Apache Kafka vs Flume is mentioned below:

| Basis for Comparison | Apache Kafka | Flume |

| Meaning |

|

|

| Concept |

|

|

| Basis of Formation |

|

|

| Application Areas |

|

|

| Approach |

|

|

Conclusion

Apache Kafka vs Flume offers reliable, distributed, and fault-tolerant systems for aggregating and collecting large volumes of data from multiple streams and big data applications. Apache Kafka and Flume systems can be scaled and configured to suit different computing needs. Kafka’s architecture enables fault tolerance, but we can tune Flume to ensure fail-safe operations. Users planning to implement these systems must first understand and implement the use case appropriately to ensure high performance and realize full benefits.

Recommended Articles

This has been a guide to Apache Kafka vs Flume. Here we have discussed Apache Kafka vs Flume head-to-head comparison, key differences, and a comparison table. You may also look at the following articles to learn more –