Updated February 18, 2023

Introduction to Avro Schema Evolution

Avro schema evolution is defined as the schema evolution that can acknowledge us to update the schema, and it can be used for writing new data while sustaining backward compatibility by utilizing the schema of our past data; when all data can have the same schema, then we can able to read data thoroughly. Moreover, it has some accurate rules that can control the changes for supporting similarity that rules can come under the schema resolution; the schema evolution is the main feature of the data management when we try to use avro then the main thing is to control the schema.

What is Avro Schema Evolution?

If we have the avro file and want to modify its schema, we have to rewrite that file with the new schema on the inside. Still, if we have terabytes of avro files and we want to modify the schema so that we can rewrite all of the data whenever the schema has changed, in which we can say that the schema evolution in avro is the process of updating the schema while supporting the backward compatibility with the schema of our previous data and that to be utilizes for writing the new data, after that when we have all data can have one schema then it can also allow reading all the data at one time, there are some other use cases for reading and writing the schema after the evolution. For example, we have to use a reader as a filter; let us consider if we have hundreds of data fields in which we are only attentive in a handful, then we can generate the schema for handful fields for reading only the needful data.

We can say that the avro schema evolution is the main object in data management; when the initial schema has been described, then our application will have to evolve that on time and when it gets happen then, it can be analytic for the customers who are coming down to the schema to be able to hold the encoded data with the old and new schema, it can allow modifying the schema which can be used for writing the new schema.

How does Avro Schema Evolution?

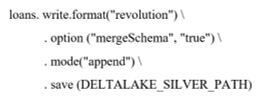

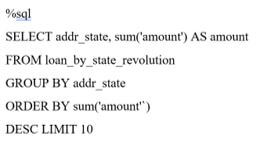

Let us see how the avro schema evolution does; as we know; the developers can able to utilize the schema evolution for adding the new columns, which can be rejected at first due to the schema mismatch; the schema evolution can be operated by adding ‘.option (‘mergeschema,’ ‘true’)’ to ‘.writeStreamSpark’ command.

1. For adding the merge schema option

2. To show the graph, we have to execute the Spark SQL command

When we include the ‘merge schema’ in our query, then the column which is present in the data frame can impulsively be appended to the boundary of the schema, which can be the section of the transaction, closing fields can also be appended, and those fields can be added to the closure of its specific columns. The Data scientist and data engineers can use such options for appending the new columns in their surviving offering tables without interrupting the old ones.

So we have to understand that if we want to reserve some data in a file or send it over the network, then we have to go through various states; for example, if we are using java programming language, we need to perform built-in serialization. So we must understand that being locked into one programming language, we must use the widely supported programming language such as JSON or XML.

Avro Schema Evolution Field Type

In avro, the idea of advancement in which one data type can be added to another, let us understand the avro primitive types, which are given below:

- null: This is a primitive field type that cannot have any value as it is null and cannot be promoted.

- Boolean: This primitive field type has a binary value but does not promote any value.

- int: It can have a 32-bit signed integer, and it can be promoted to long, float, and double, as it is a primitive field type.

- long: This primitive field type can have 64-bit signed integer and promoted to float and double.

- float: It can have the single-precision (32- bit) IEEE 754 floating-point number, which can be promoted to double.

- double: It has a double-precision (32-bit) IEEE 754 floating-point number, but it cannot be promoted to any value.

- bytes: It has a series of 8-bit unsigned bytes, and it can be promoted to ‘string.’

- string: It has a series of Unicode characters, and it can be promoted to the value ‘bytes,’ which can be the primitive field type.

Example of Avro Schema Evolution

Given below is the example of Avro Schema Evolution:

Below is the example with the user schema for the old section by appending the ‘favorite_flower.’

Code:

{

"namespace": "evolution.avro",

"type": "record",

"name": "user",

"fields": [

{"name": "name", "type": "string"},

{"name": "favorite_fruit", "type": "int"},

{"name": "favorite_flower", "type": "string", "default": "lotus"}

]

}

In the above example, the new field ‘favorite_flower’ can have the default value ‘lotus,’ which can able to encode the previous schema to be read with the new one, the default value has been described in the new schema, and that can be used for the absent field at the time of deserializing the data can be encoded with the previous schema, the default value can be excluded in the new field as the new schema will not be reversely correspondent with the old one as it is unable to understand which value can be allocated to the new field and which is misplaced in the old data.

Conclusion

This article concludes that schema evolution is the main thing for managing the data. However, while using the avro, it can also manage the schema and understand how the schema should evolve, so this article will help us understand the avro schema evolution concept.

Recommended Articles

This is a guide to Avro Schema Evolution. Here we discuss the introduction, avro schema evolution field type, and example. You may also have a look at the following articles to learn more –