What is Bayes Theorem?

Bayes’ theorem is a recipe that depicts how to refresh the probabilities of theories when given proof. It pursues basically from the maxims of conditional probability; however, it can be utilized to capably reason about a wide scope of issues, including conviction refreshes.

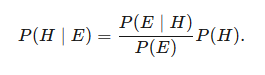

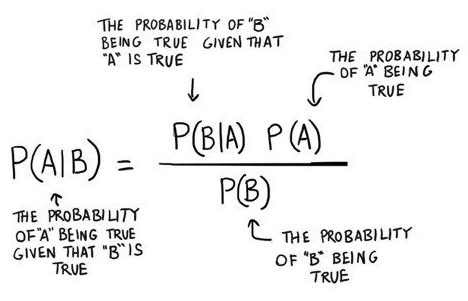

Given a theory H and proof E, Bayes’ theorem expresses that the connection between the probability of the speculation before getting the proof P(H) and the probability of the theory in the wake of getting the proof P(H∣E) is:

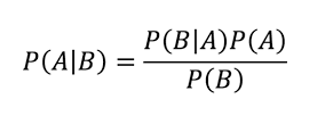

It is a beautiful concept of Probability where we find the probability when we know other probability:

- This tells us, how regularly A happens given that B occurs, composed P(A|B)

- When we know, how regularly B happens given that An occurs, composed P(B|A)

- Furthermore, how likely An is without anyone else, composed P(A)

- What’s more, how likely B is without anyone else, composed P(B)

Example of Bayes Theorem

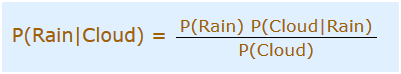

You are arranging an outing today; however, the morning is overcast; God helps us! Half of every single stormy day starts shady! In any case, shady mornings are normal (about 40% of days start overcast). Furthermore, this is generally a dry month (just 3 of 30 days will, in general, be stormy, or 10%). What is the Probability of downpour during the day? We will utilize Rain to mean downpour during the day and Cloud to mean overcast morning. The possibility of Rain given Cloud is composed of P(Rain|Cloud)

So we should place that in the equation:

- P(Rain) Probability that it will be Rain = 10%(Given)

- P(Cloud|Rain) Probability that Clouds are there and Rain happens = 50%

- P(Cloud) is the Probability that Clouds are there = 40%

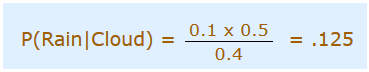

So we can say that In c:

That is Bayes Theorem: You can utilize the probability of one thing to foresee the probability of something else. Yet, Bayes Theorem is anything but a static thing. It’s a machine that you wrench to improve and better forecasts as new proof surfaces. An intriguing activity is to fidget the factors by relegating distinctive theoretical qualities to P(B) or P(A) and consider their coherent effect on P(A|B). For instance, if you increment the denominator P(B) on the right, at that point P(A|B) goes down. Solid model: A runny nose indicates measles, yet runny noses are undeniably more typical than skin rashes with little white spots.

That is, if you pick P(B), where B is a runny nose, at that point, the recurrence of runny noses in the overall public declines the opportunity that runny nose is an indication of measles. The probability of a measles finding goes down concerning side effects that become progressively normal; those manifestations are not solid pointers. Similarly, as measles become increasingly normal and P(A) goes up in the numerator on the right, P(A|B) essentially goes up because the measles is simply more probable, paying little mind to the side effect that you consider.

Use of Bayes Theorem in Machine Learning

Given below is the use of bayes theorem in machine learning:

Naive Bayes Classifier:

Naive Bayes is a characterization calculation for double (two-class) and multi-class grouping issues. The system is least demanding to comprehend when depicted utilizing double or straight out info qualities. It is called naive Bayes or imbecile Bayes because figuring the probabilities for every theory is streamlined to make their count tractable. As opposed to endeavoring to ascertain the estimations of each trait esteem P(d1, d2, d3|h), they are thought to be restrictively free given the objective worth and determined as P(d1|h) * P(d2|H, etc.

This is a solid supposition that is most far-fetched in genuine information; for example, the properties don’t communicate. By and by, the methodology performs shockingly well on information where this presumption doesn’t hold.

Portrayal Used By Naive Bayes Models:

The portrayal of a naive Bayes algorithm is probability.Set with probabilities are put away to petition for a scholarly naive Bayesian model.

This incorporates:

- Class Probability: The probability for everything in the preparation dataset.

- Conditional Probability: The conditional probability for every instance info worth given each class esteem.

Take in a Naive Bayes Model From Data. Taking in a naive Bayesian model from preparation information is quick. Preparing is quick because lone the probability values for every instance of the class and the probability value for every instance of the class given distinctive information (x) values should be determined. Enhancement systems should fit no coefficients.

Figuring Class Probabilities

A class probability is basically the recurrence of cases with a place with each class isolated by the complete number of cases.

For instance, in a parallel class, the probability of a case having a place with class 1 is determined as:

In the most straightforward case, every class has a probability of 0.5 or half for a two-fold classification issue with a similar number of occurrences in every instance of the class.

Figuring Conditional Probability

The conditional probabilities are the recurrence of each trait esteem for a given class worth partitioned by the recurrence of examples with that class esteem.

All Applications of Bayes’ Theorem

There are a lot of utilizations of the Bayes’ Theorem in reality. Try not to stress on the off chance that you don’t see all the arithmetic included immediately. Simply getting a feeling of how it functions is adequate to begin.

Bayesian Decision Theory is a measurable way to deal with the issue of example classification. Under this hypothesis, it is expected that the basic probability conveyance for the classes is known. In this way, we acquire a perfect Bayes Classifier against which every other classifier is decided for execution.

We will talk about the three fundamental uses of Bayes’ Theorem:

- Naive Bayes’ Classifier

- Discriminant Functions and Decision Surfaces

- Bayesian Parameter Estimation

Conclusion

The magnificence and intensity of Bayes’ Theorem never stop to astound me. A basic idea, given by a priest who passed on over 250 years back, has its utilization in the absolute most unmistakable AI procedures today.

Recommended Articles

This is a guide to Bayes Theorem. Here we discuss the use of bayes theorem in machine learning and the portrayal used by naive bayes models with examples. You may also have a look at the following articles to learn more –