Introduction to Black Box Testing

Black Box Testing is a critical software testing methodology that focuses on evaluating the functionality of a software system without having detailed knowledge of its internal structure or code. Unlike White Box Testing, which examines the internal logic and structure of the software, Black Box Testing treats the software as a “black box,” testing only the inputs and outputs against expected behavior.

Table of Content

Definition of Black Box Testing

Black Box Testing involves testing a software application’s functionalities without knowing its internal code structure, algorithms, or implementation details. Testers interact with the software through its user interface, APIs, or other interfaces to assess its behavior and validate whether it meets specified requirements.

Comparison with White Box Testing

While Black Box Testing focuses on external behavior and functionality, White Box Testing examines the internal logic, code structure, and implementation details of the software. Black Box Testing is more oriented towards validation and user perspective, whereas White Box Testing is concerned with verification and code coverage. Each approach has its advantages and limitations, and they are often used together to achieve comprehensive test coverage.

Techniques of Black Box Testing

The test cases that are designed to test a system play an important role in testing. The way they are created and the scenarios they cover should be taken into consideration.

Testers can create requirement specification documents by using the below techniques:

- Equivalence Partitioning

- Boundary Value Analysis

- Decision Table Testing

- State Transition Testing

- Error Guessing

- Graph-based Testing Methods

- Comparison Testing

- Use Case Technique

Following are the Techniques explained below:

1. Equivalence Testing

Equivalence Partitioning operates on the principle that inputs can be divided into groups or partitions that are expected to exhibit similar behavior. Test cases are then derived from each partition to represent the entire group. This technique assumes that if one test case within a partition detects an error, other test cases within the same partition are likely to encounter similar issues.

Process of Equivalence Partitioning

- Identify Input Classes: Analyze the input requirements and identify different classes or categories of inputs that are expected to produce similar results.

- Partition Inputs: Divide the input domain into partitions based on the identified classes. Inputs within each partition are considered equivalent and are expected to produce the same output behavior.

- Select Representative Values: Choose representative values from each partition to create test cases. These values should adequately cover the range of inputs within each partition.

- Design Test Cases: Develop test cases using the selected representative values. Each test case should represent a unique partition and cover different scenarios within that partition.

- Execute Test Cases: Execute the designed test cases against the software system and observe the output behavior. Verify whether the system behaves as expected for each input partition.

Example

Consider a software system that requires users to input their age, which must be between 18 and 65 years old.

Input Classes:

- Invalid Age (less than 18, greater than 65)

- Valid Age (between 18 and 65)

Equivalence Partitions:

- Invalid Age: {-5, 10, 16, 70}

- Valid Age: {18, 30, 50, 65}

Test Cases:

- Test Case 1: Input = 16 (Invalid Age)

- Test Case 2: Input = 30 (Valid Age)

- Test Case 3: Input = 70 (Invalid Age)

2. Boundary Value Analysis

Boundary Value Analysis (BVA) is a black box testing technique that focuses on testing the boundaries of input ranges rather than focusing on the interior values. It aims to identify errors at the boundaries or extremes of input domains, where the behavior of the software system is most likely to change.

Implementation Steps

- Identify Input Boundaries: Analyze the input requirements and identify the boundaries or limits of each input parameter.

- Determine Boundary Values: Determine the values immediately before and after each boundary for each input parameter. These values are crucial as they are more likely to expose errors in boundary conditions.

- Design Test Cases: Develop test cases that include boundary values for each input parameter. Test cases should cover both the lower and upper boundaries, as well as values just inside and just outside the boundaries.

- Include Normal Values: In addition to boundary values, include test cases with normal values within the valid input range to ensure comprehensive testing.

- Execute Test Cases: Execute the designed test cases against the software system and observe the behavior at the boundaries. Verify whether the system handles boundary values correctly and produces the expected output.

Example

Date Range (January 1, 2022, to December 31, 2022)

Boundary Values:

- Lower Boundary: January 1, 2022

- Upper Boundary: December 31, 2022

- Just Before Lower Boundary: December 31, 2021

- Just After Upper Boundary: January 1, 2023

Test Cases:

- Test Case 1: Input = December 31, 2021 (Just Before Lower Boundary)

- Test Case 2: Input = January 1, 2022 (Lower Boundary)

- Test Case 3: Input = July 1, 2022 (Within Range)

- Test Case 4: Input = December 31, 2022 (Upper Boundary)

- Test Case 5: Input = January 1, 2023 (Just After Upper Boundary)

3. Decision Table Testing

Decision tables are a powerful tool used in software testing to represent complex logical expressions and business rules in a structured and organized format. They provide a systematic approach for defining and analyzing the behavior of a system under different combinations of conditions. Decision tables are tabular representations that map conditions to actions or outcomes. They allow testers and developers to document and visualize the relationships between input conditions and corresponding actions, making it easier to understand and test the behavior of a system.

Example

ATM Withdrawal Decision Table

Consider a scenario where a user is attempting to withdraw cash from an ATM. The decision table can be constructed as follows:

| Condition | Condition | Condition | Action |

| Card Validity | Account Balance | Withdrawal Amount | Action |

| Valid | Sufficient | Within Limit | Dispense Cash |

| Valid | Sufficient | Exceeds Limit | Display Error: Exceeds Limit |

| Valid | Insufficient | – | Display Error: Insufficient Funds |

| Invalid | – | – | Display Error: Invalid Card |

| – | – | – | Display Error: System Error |

Explanation:

- Conditions:

-

- Card Validity: Valid or Invalid

- Account Balance: Sufficient or Insufficient

- Withdrawal Amount: Within Limit or Exceeds Limit

- Actions:

-

- Dispense Cash: If all conditions are met, cash is dispensed.

- Display Error Messages: Various error messages are displayed based on the conditions.

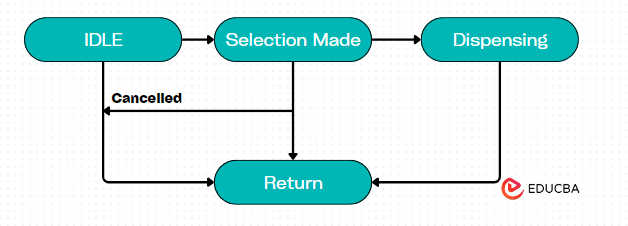

4. State Transition Testing

State Transition Testing is a black box testing technique that focuses on testing the behavior of a system as it transitions between different states. It is particularly useful for systems with discrete states, such as finite state machines, where the behavior of the system is determined by its current state and the input it receives.

Example:

Traffic Light System

Consider a traffic light system with three states: Red, Yellow, and Green. The system transitions between these states based on a predefined sequence: Red -> Green -> Yellow -> Red.

Test Cases:

- Transition from Red to Green: Verify that the system transitions from the Red state to the Green state when the appropriate input (e.g., timer expiration) is received.

- Transition from Green to Yellow: Verify that the system transitions from the Green state to the Yellow state when the appropriate input is received.

- Transition from Yellow to Red: Verify that the system transitions from the Yellow state to the Red state when the appropriate input is received.

- Invalid Transition: Verify that the system does not transition from the Red state to any other state when an invalid input is received.

5. Error Guessing

Error Guessing is an informal and intuitive black box testing technique where testers use their experience, knowledge, and intuition to identify potential errors or defects in the software system. Unlike systematic testing techniques that rely on predefined test cases, Error Guessing relies on testers’ ability to anticipate and guess where errors might occur based on past experiences, common pitfalls, and understanding of the system’s functionality.

Definition

Error Guessing involves brainstorming potential errors or defects in the software system based on testers’ expertise and familiarity with the application under test. Testers use their intuition and past experiences to identify areas of the software that are more likely to contain defects or vulnerabilities. This technique does not follow a specific algorithm or methodology but rather relies on testers’ judgment and creativity.

When to Apply Error Guessing

- Exploratory Testing: Error Guessing is often used during exploratory testing sessions, where testers explore the software system dynamically and identify potential issues on-the-fly.

- Ad Hoc Testing: When time and resources are limited, testers may resort to Error Guessing as a quick and efficient way to uncover defects without following a predefined test plan.

- Regression Testing: During regression testing, testers may use Error Guessing to identify areas of the software that are more susceptible to regression defects due to recent changes or enhancements.

- Domain Expertise: Testers with domain expertise can leverage Error Guessing to anticipate potential errors or misuse scenarios based on their understanding of industry standards, user expectations, and common pitfalls.

- Risk-Based Testing: Error Guessing can be incorporated into risk-based testing approaches to prioritize testing efforts on high-risk areas of the software that are more likely to contain critical defects.

- Exploratory Testing Sessions: Teams can conduct dedicated exploratory testing sessions focused on Error Guessing, where testers collaborate to brainstorm potential errors and defects in the system based on their collective knowledge and experience.

- Iterative Development: In iterative development environments, Error Guessing can complement structured testing approaches by providing additional insights into areas of the software that may require further testing or validation.

6. Graph-based Testing

Graph-based testing is a systematic approach that utilizes graphs or models to represent the behavior, structure, or relationships within a software system. These graphs serve as a visual representation of the system, allowing testers to analyze and derive test scenarios from them. Graph-based testing techniques can be applied to various aspects of software testing, including functional, structural, and behavioral testing.

Implementation Steps:

- Model Creation: Develop graph models of the system using appropriate modeling techniques based on the testing objectives and system characteristics.

- Test Case Derivation: Derive test cases from the graph models by identifying paths, states, or data flows that need to be exercised to achieve desired coverage criteria.

- Test Execution: Execute the derived test cases against the system and observe its behavior to validate the correctness of the implementation.

Analysis and Reporting: Analyze test results, identify defects, and report findings to stakeholders, providing insights into the quality and reliability of the system.

Example

Consider a vending machine that dispenses drinks based on user selections.

State Transition Diagram

The state transition diagram represents the different states of the vending machine and the transitions between them based on user inputs.

Test Cases:

Test Case 1: Transition from Idle to Selection Made by selecting a drink.

- Expected Path: Idle -> Selection Made

Test Case 2: Transition from Selection Made to Dispensing by confirming the selection.

- Expected Path: Selection Made -> Dispensing

Test Case 3: Transition from Dispensing to Return by cancelling the transaction.

- Expected Path: Dispensing -> Return

7. Comparison Testing

Comparison Testing is a technique used in software testing to compare the behavior, performance, or outputs of different versions of a software application or system. It involves testing two or more versions of the software to identify differences, inconsistencies, or improvements between them. Comparison Testing can be applied to various aspects of software development, including functionality, performance, usability, and security.

Example

Scenario:

A software company has developed a new version (Version 2.0) of its email client application and wants to compare it with the previous version (Version 1.0) to identify any differences or improvements.

Comparison Criteria:

- Functionality: Compare the core functionality of sending and receiving emails between Version 1.0 and Version 2.0.

- User Interface: Compare the user interface design and layout between the two versions.

- Performance: Compare the response time for common tasks such as opening emails and searching for messages.

- Compatibility: Ensure that both versions are compatible with popular email servers and operating systems.

8. Use Case Technique

The Use Case technique is a method widely used in software development and testing to specify, validate, and verify the functional requirements of a system from an end-user perspective. It involves defining interactions between users (actors) and the system to accomplish specific tasks or goals. Use cases are often depicted in a structured format called Use Case Diagrams and elaborated further in Use Case Descriptions.

Example: Perform Calculation

Actors:

- User: Initiates the calculation process by entering operands and selecting an operation.

Description:

- This use case describes the process of a user performing a calculation using the calculator application.

Preconditions:

- The calculator application is open and ready to receive input.

Postconditions:

- The calculator displays the calculation result on its screen.

Flow of Events:

- User enters operands: The user enters numerical values (operands) using the keypad.

- User selects operation: The user selects an arithmetic operation (addition, subtraction, multiplication, division) from the available options.

- System performs calculation: The system calculates the result based on the entered operands and selected operation.

- System displays result: The system displays the calculated result on the calculator screen.

Alternate Flows:

- Invalid Input: If the user enters invalid operands or selects an unsupported operation, the system displays an error message and prompts the user to retry.

- Division by Zero: If the user attempts to divide by zero, the system displays an error message and prompts the user to enter a valid divisor.

Conclusion

Black Box Testing evaluates software functionality without understanding its internal structure. Unlike White Box Testing, it focuses on inputs and outputs. Techniques include Equivalence Partitioning, Boundary Value Analysis, Decision Table Testing, State Transition Testing, Error Guessing, Graph-based Testing, Comparison Testing, and Use Case Technique. Equivalence Partitioning divides inputs into groups, while Boundary Value Analysis tests input boundaries. Decision Tables map conditions to actions, State Transition Testing examines transitions, and Error Guessing relies on tester intuition. Graph-based Testing uses models for test cases, Comparison Testing compares software versions, and Use Case Technique validates functional requirements from the user’s perspective.

Recommended Articles

We hope that this EDUCBA information on “Black Box Testing Techniques” was beneficial to you. You can view EDUCBA’s recommended articles for more information.