Updated April 11, 2023

What is Cache memory?

It is a memory component that stores frequently used data. While in operations, to fetch a piece of information the CPU would first approach the Cache for the same. Though it is imperative to say that the RAM is a fast device too, it is not as fast as the Cache Memory. Hence, computers are designed in such a manner, that commonly used data is first retrieved from memory. This increases the computational speed manifold.

Importance of Cache Memory

The above scenario gave us a glimpse of how important a feature it is. Let us delve a bit deeper.

The advent of the development of memory runs parallel to the development of computers themselves. The faster the computer the engineers wanted to build, the more robust the memory became.

However, one of the pitfalls of becoming the fastest set of memory was the apparent lack of size. Cache memory, even though faster than RAM, is nowhere in size as compared to the latter. However, due to its overall sophistication, memory tends to be more expensive.

It is also an example of volatile memory. The question to ask out here is what is a volatile memory?

Volatile memory is temporary by nature. The Memory stores data for the time period the CPU functions or is switched on. The moment we switch it off, the data gets erased mechanically. Non-volatile memory like ROM or Read Only Memory is the one that keeps the memory even after the CPU has been switched off. This makes ROM slower compared to Cache Memory.

This brings us to the types of Memory.

Types of Cache Memory

There are two types:

- Primary

- Secondary

The primary cache memory is either integrated into the processor or is the closest to the processor. The memory, other than being hardware-based, can also be disk-based. The method remains the same. A portion of the disk is approached for stored data.

How Does the Cache Memory Work?

As suggested before, there are primarily two types of memory. However, modern computer systems have three levels of Memory: L1, L2, and L3. L1 is generally the smallest of the cache memories. Its size ranges from 8kb to 64kb. However, because of its proximity to the CPU, its accessibility is far more than L2 and L3. L2 and L3 have longer access times.

Cache memory works in different configurations:

- Direct Mapping

- Full Associative Mapping

- Set Associative mapping

Direct Mapping: This feature enables the cache memory to block data to specified locations inside the cache.

Full Associative Memory: Unlike Direct mapping, does not disrupt any specific mapping. Allows full mapping to any location.

Set Associative Mapping: It is a go-between the first two. Inside the memory, each block is mapped to a smaller set.

Architecture

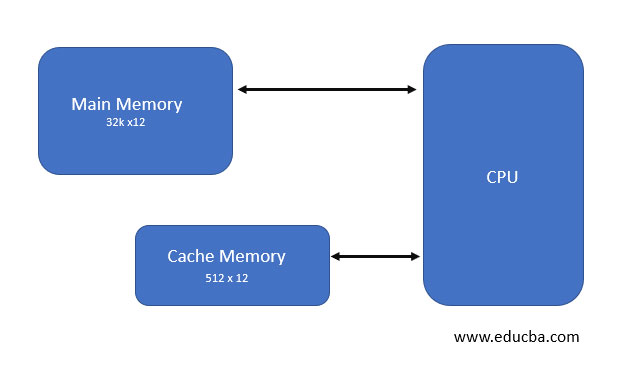

A cache memory is broken into three main parts: The directory store, the data section, and information status.

The memory’s actual role is to store the data which is to be read from the main memory. That piece of information is then stored in the data section.

However, for this to happen, the memory needs to locate the cache line, in which the information is stored. This is important because every cache line is identified by an address that is stored in the directory store. That particular directory entry is known as the cache tag.

The size of the cache has its particular significance. It is the amount of data that it can store from the main memory. This size however, does not include the memory that supports the cache tags or the status bits.

This is a basic block diagram of how a memory is located.

To summarize the operation of a memory:

Step 1: Whenever the CPU needs to access data, it will look for it in the cache. If that particular memory is found, it will be read. This makes the entire operation quick.

Step 2: If now it is not found in the memory, the main memory will then have to accessed.

Step 3: Now that you have accessed the main memory and got what you were trying to find, the data is transferred to the cache memory for future reference.

Step 4: The quality of the performance of the memory is known as the hit ratio

Step 5: If a particular memory is found to be present in the memory, it is called a Hit

Step 6: If the particular memory is not found in the memory and the main memory has to be accessed, then it’s a miss.

Conclusion

Given the functions, it can be seen that cache memory is one of the most important functions in a computer. One of the reasons why CPUs have become faster and smarter is because of the advancement of the memory. Over the years a lot of research has been poured into their efficiency and the result can be seen. Cache memories have become one of the mainstays of any computing system and as technology progresses they would become even more progressive by the day.

Recommended Articles

This is a guide to Cache Memory. Here we discuss an introduction to Cache Memory along with the importance, different types, architecture, and its working. You may also have a look at the following articles to learn more –