Updated March 23, 2023

Introduction to Cassandra Cluster

Apache Cassandra is designed to meet a scaling challenge that traditional database management systems aren’t capable of handling. These days there is a continuous and excessive amount of data to be managed. Large organizations cannot store these huge amounts of data on a single machine. Here is when databases like Cassandra with a distributed architecture become important. Large organizations store huge amounts of data on multiple nodes. These nodes communicate with each other which serves the purpose behind the Cassandra cluster being established.

The cluster is a collection of nodes that represents a single system. These clusters form the database in Cassandra to effectively achieve maintaining a high level of performance. As the size of your cluster grows, the number of clients increases, and more keyspaces and tables are added, the demands on your cluster will begin to pull in different directions. Taking frequent baselines to measure the performance of your cluster against its goals will become increasingly important.

Prerequisites for Cassandra Cluster

There are the following requirements for setting up a cluster.

- There should be multiple machines (Nodes)

- Nodes must be connected to each other on the Local Area Network (LAN)

- Linux must be installed on each node

- There should be a Cassandra Enterprise edition

- JDK must be installed on each machine

Rebuilding Nodes in a Cluster

Cassandra helps in automatically rebuilding a failed node using replicated data. When each node owns only a single token, that node’s entire data set is replicated to a number of nodes equal to the replication factor minus one. For example, with a replication factor of three, all the data on a given node will be replicated to two other nodes

While it might be straightforward to initially build your Cassandra cluster with machines that are all identical, at some point older machines will need to be replaced with newer ones. vnodes ease this effort by allowing you to specify the number of tokens, instead of having to determine specific ranges. It is much easier to choose a proportionally larger number for newer, more powerful nodes than it is to determine proper token ranges.

Adding to the Cassandra Cluster

Before creating a cluster with multiple nodes, we need to confirm that there is a working cluster with a single node. Then go ahead by incrementing the clusters or growing the clusters. Let’s start with the manual case, as the vnodes process is a subset of this.

The procedure to increment an additional node to a cluster without vnodes enabled may not be so direct most of the time. The primary step is to figure out the new total cluster size, then compute tokens for all nodes.

The primary difference while using vnodes is that there is no need to generate or set tokens as this happens automatically, and there is no reason to run nodetool move. Instead of setting the initial_token property, you should set the num_tokens property in accordance with the desired data distribution.

Over time, your cluster might naturally become heterogeneous in terms of node size and capacity. In the past, when using manually assigned tokens, this presented a challenge as it was difficult to determine the proper tokens that would result in a balanced cluster.

Cassandra achieves both availability and scalability using a data structure that allows any node in the system to easily determine the location of a particular key in the cluster.

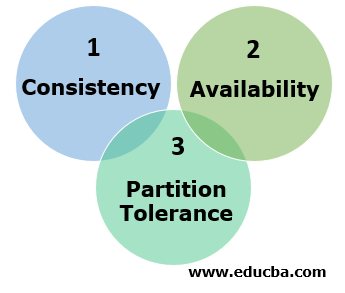

The CAP Theorem

The CAP acronym refers to three desirable properties in a replicated system:

- Consistency: This means that the data should appear identical across all nodes in the cluster

- Availability: This indicates that the system should always stay available to receive requests

- Partition Tolerance: This indicates that the system remains to function even in the event of a partial failure

Things to Keep in Mind

Since Cassandra is designed to be deployed in large-scale clusters on commodity hardware, an important consideration is whether to use fewer large nodes or a greater number of smaller nodes. Regardless of whether you use physical or virtual machines, there are a few key principles to keep in mind:

More RAM equals faster reads, so the more you have, the better they will perform. More processors equal faster writes.

Obviously, you will need more disk space if you intend to store more data. What might be less obvious is the dependence on your compaction strategy. In the worst case, SizeTieredCompactionStrategy can use up to 50 percent more disk space than the data itself. As an upper bound, try to limit the amount of data on each node to 1-2 TB. Cassandra is designed to use local storage hence it does not use shared storage.

Conclusion

One of the most primary aspects of a distributed data store is the manner in which it handles data and it’s replication across the cluster. If each partition is stored on a single node, the system would possess multiple points of failure, and a failure of any node could result in catastrophic data loss. These systems must be able to replicate data across different multiple nodes, making the failure of any nodes or data loss less likely.

Cassandra has a well-designed replication system, offering rack and data center awareness. Therefore, it t can be configured to place replicas to maintain availability even during otherwise catastrophic events such as switch failures, network partitions, or data center outages. Cassandra also includes a planned strategy that maintains the replication factor during node failures.

Recommended Articles

This is a guide to a Cassandra Cluster. Here we discuss the introduction and prerequisites for the Cassandra cluster with rebuilding nodes and CAP theorem. You may also look at the following articles to learn more –