Overview

Comprehensive Hadoop Course

What is Big Data

Big data is a collection of large datasets which cannot be processed using the traditional techniques. Big data uses various tools and techniques to collect and process the data. Big data deals with all types of data including structured, semi structured and unstructured data. Big data is used in various fields data like

- Black box data

- Social media data

- Stock exchange data

- Power Grid Data

- Transport Data

- Search Engine Data

Benefits of Big Data

Big data has become very important and it is emerging as one of the crucial technologies in today’s world. The benefits of big data are listed below

- Big data can be used by the companies to know the effectiveness of their marketing campaigns, promotions and other advertising media

- Big data helps the companies to plan their production

- Using the information provided through Big data companies can deliver better and quick service to their customers

- Big data helps in better decision making in the companies which will increase the operational efficiencies and reduces the risk of the business

- Big data handles huge volume of data in real time and thus enables data privacy and security to a great extent

Challenges faced by Big Data

The major challenges of big data are as follows

- Curation

- Storage

- Searching

- Transfer

- Analysis

- Presentation

What is Hadoop

Hadoop is an open source software framework which is used for storing data of any type. It also helps in running applications on group of hardware. Hadoop has huge processing power and it can handle more number of tasks. Open source software here means it is free to download and use. But there are also commercial versions of Hadoop which is becoming available in the market. There are four basic components of Hadoop – Hadoop Common, Hadoop Distributed File System (HDFS), MapReduce and Yet Another Resource Negotiator (YARN).

Benefits of Hadoop

Hadoop is used by most of the organizations because of its ability to store and process huge amount of any type of data. The other benefits of Hadoop includes

- Computing Power

- Flexibility

- Fault Tolerance

- Low Cost

- Scalability

Uses of Hadoop

Hadoop is used by many of the organization’s today because of its following uses

- Low cost storage and active data archive

- Staging area for a data warehouse and analytics store

- Data lake

- Sandbox for discovery and analysis

- Recommendation Systems

Disadvantages of Using Hadoop

The challenges faced by Hadoop are listed below

- MapReduce Programming does not suit all problems. Specially the creation of multiple files between MapReduce phase makes it inefficient for complex analytical computing

- Hadoop does not have easy to use tools for data management, data cleansing and metadata

- Another great challenge that Hadoop faces is data security

Course Objectives

By the end of this course you will be able to

- Learn the concepts of Hadoop and Big Data

- Perform Data Analytics using Hadoop

- Master the concepts of Hadoop framework

- Get experience on different configurations of Hadoop cluster

- Work with real time projects using Hadoop

Pre Requisites for taking this course

There are no special skills needed to take this course. Basic knowledge of Java and SQL will serve as an added advantage. Educba also offers you comprehensive Java course to enhance your Java Skills.

Target Audience for this course

This course will be of great help to the following professionals

- Software developers and Architects

- Analytics Professional

- Data Management Professionals

- Business Intelligence Professionals

- Testing Professionals

- Data Scientists

- Anyone who is interested in pursuing his career in Big data analytics

Course Description

Section 1: Getting Started with Big Data Hadoop

Introduction to Big Data Hadoop

Due to the advancement in technologies and communication the amount of data has been increasing abundantly every year. The rate of increase in data is growing enormously and thus traditional computing techniques are overcome by Big data analytics. Big data is a collection of large datasets which is difficult to be processed using the traditional techniques.

Hadoop is an open source framework which is written using Java. Hadoop helps in distribution of large data sets across multiple computers using simple programming. This chapter deals with the introduction to Big data and Hadoop. It also includes its features, advantages and disadvantages in brief.

Scenario of Big Data Hadoop

Hadoop was created by Doug Cutting who is also the creator of Apache Lucene. Hadoop was named after the Doug Cutting Son’s toy elephant. Hadoop was first released in the year 2016. This section contains the history of Hadoop and its inventors.

Write Anatomy

There are two types of nodes in a Hadoop cluster – Namenode and Datanode. The Namenode is the commodity hardware that manages the file system namespace. This contains the Linux operating system and the namenode software. The Namenode helps in performing operations like renaming, closing and opening files and directories. The Datanode is used to perform the read and write operations of the Hadoop according to the client request. The Datanode is also used to perform operations like block creation, deletion and replication.

Continuation Write Anatomy

The write operation in HDFS is difficult than the read operation. To write a data in Hadoop there are seven steps involved. These steps are explained in detail under this chapter

Read Anatomy

The read operations in HDFS is given in this chapter with step by step procedure and a pictorial representation.

Section 2: Hadoop Word Count

Word Count in Hadoop

Word count is an application in Hadoop which helps to count the number of occurrences of a word in a given set of input. Word Count works with local standalone or pseudo distributed or fully distributed Hadoop installation. A sample word count program along with its output in Hadoop is given under this chapter.

Running Hadoop Application

Hadoop is a complex system with lot of components. Hadoop is written using Java for running applications on large clusters. Java knowledge will be very helpful in troubleshooting the problems faced during installation of Hadoop. Hadoop has a highly fault tolerant feature and it can be installed using low cost. This tutorial will help you to learn more about the Hadoop installation and running so that you can play around with the Hadoop easily. The topics covered under this chapter are

- Pre Requisites – Supported platforms, Required software, Installing software

- Preparing to start the Hadoop Cluster

- Hadoop Cluster Set Up

- Running Hadoop on Ubuntu Linux (Single Node Cluster)

- Stand Alone Operation

- Pseudo Distributed Operation

- YARN on a single node

- Fully Distributed Operation

- Configuring Hadoop in Non Secure Mode

Working on Sample Program

Hadoop is used for processing large data sets with the use of less level commodity machines. Hadoop is built on two main parts – Hadoop Distributed File System (HDFS) and Map Reduce Framework. The MapReduce framework has two algorithms – Map and Reduce. The algorithm of the MapReduce framework along with the Map stage and Reduce stage is explained in detail under this chapter. The writing and running of a simple Hadoop MapReduce program is explained in detail here.

Creating Method Map

MapReduce is a processing technique and a program model based on Java. The MapReduce framework is easy to scale data processing over multiple computing nodes. The Map component of this model takes a set of data and converts it into another set of data. The data processing components in MapReduce framework is called mappers and reducers. The mappers job is to process the input data. The mapper implementation is done through the Map method. The mapper input flow and the tasks of the Input Format class are explained in this lesson.

Iterable Values

The reducer of the Hadoop Map Reduce program receives an iterable of inputs with the same key. The iterator of the reducer phase of the Map reduce program is explained in detail under this chapter with a sample program.

Output Path

Hadoop has different output formats for each input format. This chapter will give you an overview of the different output formats and their usage. There are two requirements of the output format in Hadoop which is explained in brief under this chapter. There are many built in output formats in Hadoop which is given under the following topics in this lesson

- File Output format

- Text Output format

- Sequence Output format

- Sequence File As Binary Output format

- Map File Output format

- Multiple Outputs

The different output paths are also discussed in here

FAQ’s General Questions

- What is the need for Hadoop training ?

There are a lot of reasons why one must go for Hadoop training. Few of the reasons are listed below

- Hadoop is a successful new technology but only there are only few skilled professionals in this field

- Large number of people are looking for career enhancement and so they are willing to change their career to Hadoop

- There is an increased demand for Hadoop professional because of its advanced features

- There are increased opportunities for people who are skilled in Hadoop. Companies prefer recruiting person who is a professional expert in Hadoop over others.

- Why should I take this course ?

Through this online course you will know how to use Hadoop to solve the problems. You will have an in depth understanding of the concepts of Hadoop Distributed File System (HDFS) and MapReduce. At the end of this course you will be able to write your own MapReduce programs and solve problems on your own.

- What will I get from this course ?

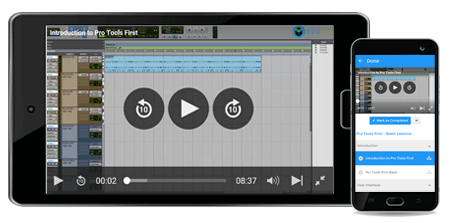

This online course has a high quality video content which contains animations and pictorial representations to make the learning interesting and easy. The codes of all the programs are attached to this course and students enrolled in this course can run the programs in map reduce framework. Few pdf notes are attached to the course which you can refer at the time of working with Hadoop. Finally there is a question session at the end of this course through which you can find out how much knowledge you have gained about Hadoop and whether you are ready to be certified.