Definition: Data Cleaning in Data Mining

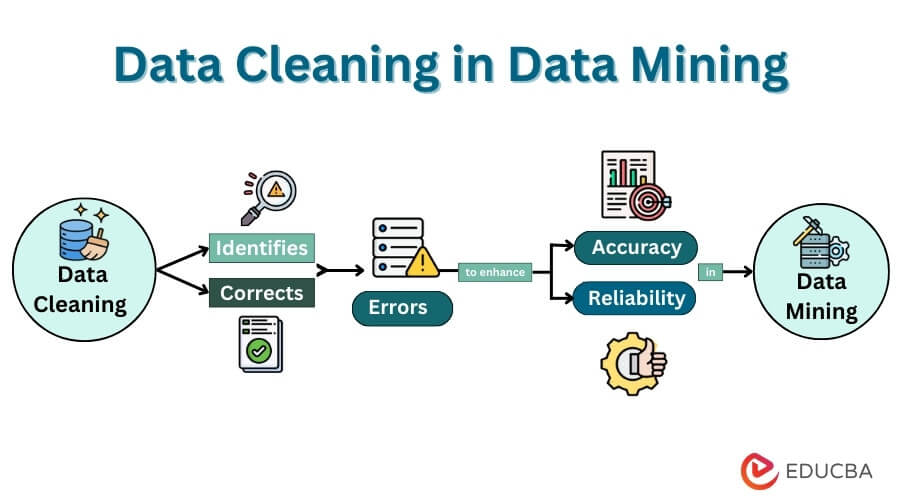

In data mining, the crucial data-cleaning process is essential for refining raw datasets. This introductory overview explores the significance of data cleaning, addressing challenges associated with unclean data. As a pivotal step in the data mining workflow, this article delves into key concepts, methods, and tools, shedding light on the techniques employed to enhance data quality. By exploring real-world examples and future trends, we aim to underscore the indispensable role of data cleaning in optimizing the accuracy and reliability of insights derived from mining vast datasets.

Table of Contents

- Definition

- What is Data Cleaning?

- Why is data cleaning important

- How to clean data in data mining

- Strategies for Handling Missing Data in Datasets

- Automated Data Cleaning Tools

- Data cleansing vs. data cleaning vs. data scrubbing

- Challenges of Data Cleaning in Data Mining

- Best Practices in Data Cleaning

- Future Trends

What is Data Cleaning?

Data cleaning is the process of identifying and fixing errors, inconsistencies, and inaccuracies in datasets. It is often referred to as data cleansing or data scrubbing. It involves handling missing or duplicate data, addressing outliers, resolving inconsistencies, and standardizing formats. Using advanced data cleansing software, organizations can streamline these tasks efficiently, improving the quality of the data to ensure it is accurate, reliable, and suitable for analysis. In the context of data mining, where large volumes of data are processed to extract meaningful patterns and insights, effective data cleaning is crucial to enhance the validity and reliability of the results obtained from the analysis.

Why Data Cleaning is Important?

Data cleaning is critical in data science and analytics for various reasons, all of which add to the accuracy, dependability, and efficacy of data-driven decision-making. Here is an explanation of why data cleansing is essential:

- Improves Data Accuracy: Data cleaning helps identify and rectify errors, inconsistencies, and inaccuracies in datasets, ensuring that the data accurately reflects the real-world entities and events it represents.

- Enhances Data Quality: By addressing issues like missing values, duplicates, and outliers, data cleaning improves the overall quality of the dataset, providing a solid foundation for meaningful analysis and interpretation.

- Ensures Reliable Insights: Clean data leads to more reliable and trustworthy insights. Decision-makers can have confidence in the analysis results, as the data is accessible from distortions and inaccuracies.

- Supports Effective Decision-Making: High-quality data is essential for making informed and effective decisions. Data cleaning contributes to the reliability of the information used in decision-making processes across various industries.

- Increases Credibility: Clean data enhances the credibility of analyses and reports. Stakeholders, clients, and other interested parties are more likely to trust the results and recommendations when based on accurate and well-maintained data.

- Facilitates Data Integration: In scenarios involving multiple data sources, data cleaning ensures compatibility and consistency across datasets, enabling seamless integration for more comprehensive analyses.

- Saves Time and Resources: Cleaning the data at an early stage prevents the need for rework and corrections later in the analysis process. This saves valuable time and resources by avoiding the repetition of analyses due to data issues.

- Supports Regulatory Compliance: In industries where regulatory compliance is essential (such as finance and healthcare), data cleaning is crucial to meet data quality standards and comply with legal and ethical requirements.

- Reduces Risk of Biased Insights: Inaccuracies or biases in the data can lead to flawed analyses and biased insights. Data cleaning minimizes these risks, helping to ensure that the insights derived are as unbiased and objective as possible.

- Facilitates Machine Learning Model Training: Clean, well-prepared data is a prerequisite for training accurate machine learning models. Data cleaning contributes to the success of predictive modeling by providing a reliable input dataset.

How to Clean Data in Data Mining?

Cleaning data in data mining involves identifying and rectifying errors, inconsistencies, and inaccuracies in a dataset. Here is a general guide on how to clean data in the context of data mining:

1. Identify and Handle Missing Data:

- Analyze how much of the dataset is missing.

- Select suitable approaches to deal with missing data, such as imputation or removal of affected records.

2. Handle Duplicate Data:

- To prevent duplication and maintain data integrity, find and delete duplicate records.

- Use unique identifiers or a combination of attributes to detect duplicates.

3. Address Outliers:

- Visualize the data to identify outliers using statistical methods or graphical tools.

- Based on domain knowledge, decide whether to correct, remove, or analyze outliers separately.

4. Standardize Data Formats:

- Ensure consistency in data formats, such as date formats, units of measurement, and categorical variables.

- Standardize text data by converting it to a common case (lowercase or uppercase) and removing unnecessary spaces.

5. Resolve Inconsistencies:

- Identify and address inconsistencies in data, such as conflicting information within the dataset.

- Use domain knowledge or external sources to resolve conflicting data.

6. Perform Exploratory Data Analysis (EDA):

- Visualize the data using plots and charts to identify patterns, trends, or anomalies.

- Use statistical measures to gain insights into the distribution of data.

7. Impute Missing Values:

- Choose appropriate imputation techniques (mean, median, mode, or advanced methods like predictive modeling) for handling missing values.

- Be mindful of the impact of imputation on data integrity and analysis results.

8. Utilize Automated Data Cleaning Tools:

- Explore and use specialized tools that automate certain data cleaning aspects, helping streamline the process.

- Ensure compatibility and integration with your data mining platform.

9. Establish Data Cleaning Protocols:

- Develop and document standard procedures for data cleaning to ensure consistency and reproducibility.

- Create a log to track changes made during the data cleaning process.

10. Continuous Monitoring and Maintenance:

- Regularly monitor data quality and update cleaning processes as needed.

- Integrate data cleaning into routine maintenance to ensure ongoing data quality.

Strategies for Handling Missing Data in Datasets

- Ignoring Tuples: Refraining from disregarding tuples is impractical, especially when a tuple contains multiple characteristics with missing values. This approach may overlook valuable information and needs to be more suitable for comprehensive data cleaning.

- Filling in Missing Values: While filling in missing values is common, it can be impractical and time-consuming. Manual input is one option, but attribute means or the most likely value can also be considered. However, this approach may only be effective in some cases.

- Binning Method: The binning method involves sorting data and dividing it into equal-sized parts. This strategy is relatively straightforward, using nearby values to smooth the data. After binning, various techniques can be applied to handle missing values within each bin.

- Regression: Regression, whether multivariate or linear, is a technique that involves smoothing out data using regression functions. Multivariate regression considers multiple independent variables, while linear regression focuses on a single independent variable. Both methods can be applied to predict and fill in missing values.

- Clustering: Clustering is a technique that emphasizes grouping data. Initially, data points are clustered, and outliers are identified. Subsequently, similar values are grouped into clusters. This method effectively handles missing values within groups and identifies patterns within the dataset.

Automated Data Cleaning Tools

Several automatic data cleaning solutions are out there, and they’re all equipped with unique features that make the process go more smoothly. These are a few noteworthy tools:

1. OpenRefine:

- Features: Faceted browsing, data transformation, clustering for duplicate detection.

- Use Case: Cleaning and transforming messy data quickly.

2. Trifacta Wrangler:

- Features: Intelligent suggestions, visual profiling, automated transformations.

- Use Case: Enhancing efficiency in data cleaning through a user-friendly interface.

3. DataRobot:

- Features: Automated machine learning, data preprocessing, outlier detection.

- Use Case: Integrating data cleaning with machine learning workflows.

4. Pandas Profiling:

- Features: HTML-based profiling reports, data visualizations, and statistical insights.

- Use Case: Quick exploratory data analysis and identification of data issues.

5. Dedupe.io:

- Features: Record linkage, deduplication, fuzzy matching.

- Use Case: Efficiently handling and merging duplicate records.

6. IBM InfoSphere QualityStage:

- Features: Data standardization, address validation, survivorship rules.

- Use Case: Enterprise-level data quality management.

7. Talend Data Preparation:

- Features: Data wrangling, cleaning, and transformation.

- Use Case: Preparing data for analysis and reporting.

8. WinPure Clean & Match:

- Features: Deduplication, data cleansing, matching, and merging.

- Use Case: Enhancing data quality for improved decision-making.

9. RapidMiner:

- Features: Data cleaning operators, anomaly detection.

- Use Case: Integrating data cleaning into end-to-end data science workflows.

10. Microsoft Excel (Power Query):

- Features: Data transformation, cleaning, and shaping.

- Use Case: Quick and familiar tool for small to medium-sized datasets.

Data cleansing vs. data cleaning vs. data scrubbing

| Section | Data Cleansing | Data Cleaning | Data Scrubbing |

| Definition | Process of correcting or handling errors in data. | A general term encompassing various activities to improve data quality. | Focuses on identifying and removing inaccuracies and inconsistencies in data. |

| Scope | Broader term includes various activities beyond just error correction. | Encompasses a range of activities to ensure data accuracy and reliability. | Specifically targets data inaccuracies and errors. |

| Activities | Error correction, handling inconsistencies, and more. | Handling missing values, duplicates, outliers, etc. | Identifying and removing errors, ensuring data accuracy. |

| Focus | Correcting and improving data quality. | Ensuring overall data quality. | Identifying and removing inaccuracies. |

| Usage Context | Commonly used as a general term for improving data | A general term used in data management and analytics. | Specific terms used when dealing with data errors. |

Challenges of Data Cleaning in Data Mining

Data cleaning in data mining is a crucial but challenging process due to various factors that can compromise the quality and reliability of datasets. These challenges include:

- Missing Data: Handling incomplete datasets and determining the most suitable method for imputing missing values without introducing bias.

- Duplicates: Identifying and efficiently handling duplicate records, especially in large datasets, to prevent analysis redundancy.

- Outliers: Detecting outliers and deciding whether to correct, remove, or analyze them separately, considering their potential impact on statistical models.

- Inconsistencies: Resolving conflicting information within the dataset may arise from errors or variations in data collection methods.

- Standardization: Ensuring uniformity in data formats (dates, units, etc.) to maintain consistency and facilitate meaningful comparisons.

- Scalability: Managing data cleaning processes for large datasets efficiently, as the computational demands and processing time can increase significantly.

- Dynamic Data Sources: Adapting to changes in data from dynamic sources, which may evolve, requiring continuous updates and adjustments.

- Time and Resource Constraints: Balancing the need for thorough data cleaning with the available time and computational resources, particularly in time-sensitive projects.

- Data Quality Assessment: Evaluating the overall quality of the data and establishing criteria for acceptable levels of accuracy, completeness, and consistency.

- Integration with Automated Tools: Ensuring seamless integration and compatibility between automated data cleaning tools and existing data mining workflows.

Best Practices in Data Cleaning

Data cleaning is an essential stage in the data preparation process to guarantee the quality and dependability of datasets for efficient analysis. Data cleansing best practices call for a systematic approach.

- Understand the Data: Before cleaning, gain a deep understanding of the dataset, its structure, and the context in which it was collected.

- Develop Data Cleaning Protocols: Establish standardized procedures and documentation for data cleaning to ensure consistency and reproducibility.

- Address Missing Values Strategically: Choose appropriate strategies for handling missing values, such as imputation or removal, based on the nature of the data and analysis goals.

- Detect and Handle Duplicates: Implement methods to identify and remove duplicate records, maintaining data integrity and avoiding redundancy.

- Manage Outliers with Domain Knowledge: Approach outliers with an understanding of the domain, deciding whether to correct, remove, or analyze them separately.

- Standardize Data Formats: Ensure uniformity in data formats, including date formats, units of measurement, and test cases, for consistency.

- Resolve Inconsistencies: Use domain knowledge to address conflicting information within the dataset, ensuring accuracy and coherence.

- Leverage Exploratory Data Analysis (EDA): Employ visualizations and statistical analyses to explore data patterns, trends, and anomalies.

- Utilize Automated Data Cleaning Tools: Explore and integrate specialized tools that automate certain aspects of data cleaning, enhancing efficiency.

- Implement Continuous Monitoring: Regularly monitor data quality, update cleaning processes, and integrate data cleaning into routine maintenance for sustained accuracy.

Future Trends

Predicting future data cleaning trends entails predicting advances generated by technological breakthroughs, techniques, and an evolving data consumption scenario. Here are some probable future data-cleaning trends.

- Integration of Artificial Intelligence & Machine Learning: Increased use of AI and ML algorithms for automated identification and cleaning of data errors, leveraging predictive modeling and pattern recognition.

- Blockchain for Data Provenance: Integrating blockchain technology to establish and trace the provenance of data, enhancing transparency and trust in data sources.

- Advanced-Data Quality Metrics: Development and adoption of more sophisticated data quality metrics to provide nuanced assessments beyond traditional completeness and accuracy measures.

- Automated Data Cleaning as a Service: The emergence of cloud-based platforms offering automated data cleaning allows organizations to leverage specialized tools without heavy infrastructure requirements.

- Privacy-Preserving Data Cleaning: Increased focus on data cleaning methods that preserve individual privacy, especially in evolving data protection regulations.

- Data Cleaning in Edge Computing: Integration of data cleaning processes into edge computing environments, allowing for real-time data cleaning at the source.

- Natural Language Processing (NLP) for Text Data Cleaning: Advancements in NLP techniques to improve the cleaning of unstructured text data, addressing challenges such as entity resolution and sentiment analysis.

- Explainable Data Cleaning Algorithms: Development of algorithms that provide transparent explanations for the decisions made during the data cleaning process, enhancing interpretability and trust.

- Crowdsourced Data Cleaning: Use of crowdsourcing platforms for large-scale data cleaning tasks, leveraging human intelligence to complement automated methods.

- Context-Aware Data Cleaning: Adopting context-aware approaches considering the specific characteristics and requirements of different domains, industries, or applications.

Conclusion

Data cleaning stands as an indispensable process in the realm of data science, ensuring the accuracy and reliability of datasets. Data cleaning lays the foundation for trustworthy analyses, credible insights, and informed decision-making by addressing errors, inconsistencies, and inaccuracies. Its significance extends across industries, fostering data integrity, enhancing the credibility of results, and supporting compliance with regulatory standards. As technology evolves, the continual emphasis on meticulous data cleaning remains vital, underscoring its pivotal role in unlocking the true potential of data for meaningful and impactful applications in various domains.

Frequently Asked Questions (FAQ)

Q1. How does data cleaning impact machine learning models?

Answer: Clean data is required for accurate machine learning model training. Data cleansing helps predictive modeling succeed by providing an accurate and representative input dataset.

Q2. How does data cleaning contribute to regulatory compliance?

Answer: Data cleansing provides compliance with data quality standards, ethical concerns, and legal requirements in industries with regulatory constraints, laying the foundation for reliable analysis.

Q3. Can data cleaning be applied to real-time data streams?

Answer: Yes, data cleaning can be adapted for real-time data streams, ensuring that data is cleaned and prepared for analysis as it arrives, enabling timely and accurate insights.

Q4. Is data cleaning a one-time process or an ongoing activity?

Answer: Data cleaning is ideally an ongoing activity. Regular monitoring and maintenance are essential to adapt to changes in the dataset, ensuring sustained data quality and reliability over time.

Recommended Article

We hope that this EDUCBA information on “Data Cleaning in Data Mining” was beneficial to you. You can view EDUCBA’s recommended articles for more information,