What is Data Exploration

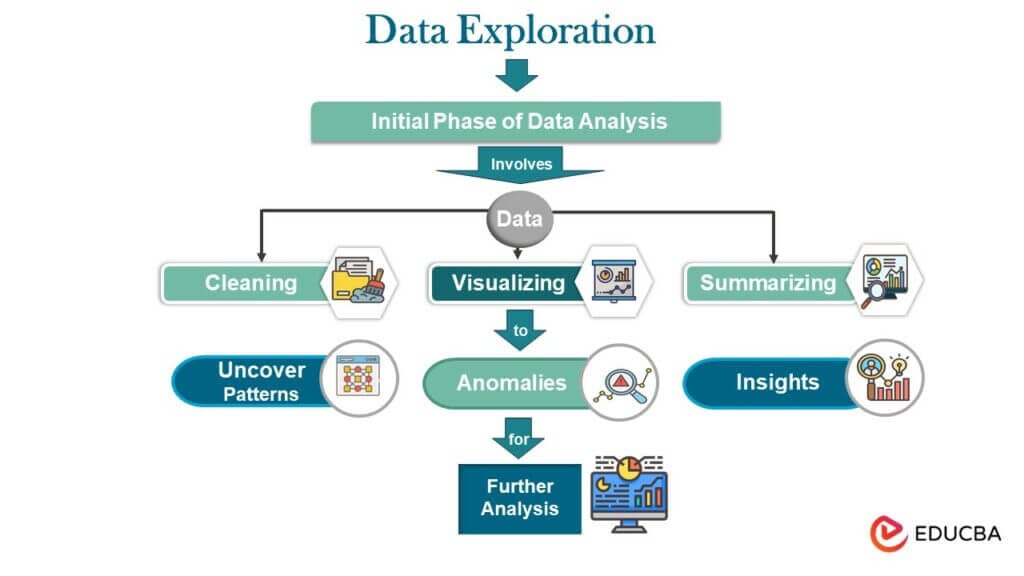

Data exploration is the essential first step in the data analysis process. It involves scrutinizing datasets to uncover hidden patterns, outliers, and insights. Whether in business, healthcare, or research, data exploration serves as the compass guiding decision-makers. This article sheds light on the intricacies of data exploration, from understanding datasets and cleaning data to advanced techniques and the power of data visualization. It provides readers with the tools to unlock their data’s hidden secrets.

Table of Contents

- What is Data Exploration

- Importance

- How Data Exploration Works

- Tools

- Data Exploration in Machine Learning

- GIS

- Interactive Data Exploration

- Best Language for Data Exploration

- Data exploration vs. data mining

- Which industries use it?

- Advanced-Data Exploration Techniques

- Real-Life Applications

- Future Trends

Importance of Data Exploration

Data exploration is crucial for several reasons:

- Identifying Patterns and Trends: Data exploration enables businesses to make better choices based on historical data by revealing relevant patterns and trends within the data.

- Understanding Relationships: Exploratory data analysis allows businesses to understand relationships between variables. This insight is valuable for strategizing marketing campaigns, optimizing processes, and improving overall efficiency.

- Detecting Anomalies and Outliers: It aids in identifying outliers and anomalies in the data, which might indicate errors or unique occurrences. Detecting these irregularities is vital for maintaining data quality and accuracy.

- Informing Decision-Making: By exploring data, organizations gain insights into customer behavior, market trends, and operational efficiencies. This information is instrumental in making data-driven decisions that can enhance products, services, and overall business strategies.

- Enhancing Predictive Modeling: It provides a deep understanding of the data distribution, helping data scientists select appropriate variables for predictive modeling. Understanding the data thoroughly improves the accuracy and reliability of machine learning algorithms.

- Improving Data Quality: Data inconsistencies and missing values can be identified and corrected through exploration. Clean and reliable data is essential for meaningful analysis and reporting.

- Facilitating Communication: Data visualization, a significant data exploration component, simplifies complex data sets into understandable visual representations. These visuals facilitate communication among stakeholders, making it easier to convey insights and trends.

- Innovation and Competitive Advantage: Businesses can gain a competitive edge by exploring data creatively. Innovative solutions often arise from a deep understanding of customer preferences and market dynamics, which can be explored through comprehensive data analysis.

How Data Exploration Works

Here’s a step-by-step guide on how data exploration works:

- Define Your Objective:

Start by understanding the problem or question you want to answer through data exploration. Having a clear goal will help focus your exploration.

- Gather the Data:

Collect the relevant data for your analysis. This could involve data acquisition from various sources, such as databases, APIs, spreadsheets, or files.

- Understand the Data:

Examine the data’s structure and format. Key steps include:

- Data Loading: Import the data into your analysis environment (e.g., Python, R, Excel).

- Data Description: Check the dataset’s size, shape, and basic statistics (e.g., mean, median, standard deviation).

- Data Types: Identify the types of variables (categorical, numerical, date, etc.).

- Column Names: Review the column names for clarity and consistency.

- Data Cleaning:

Before diving into exploration, address data quality issues:

- Missing Data: Handle missing values through imputation or removal.

- Outliers: Detect and address outliers that could skew results.

- Data Transformation: Normalize, standardize, or scale data when necessary.

- Data Visualization:

Create visual representations of the data to reveal patterns and relationships. Common visualization techniques include:

- Bar Charts and Histograms: Display frequency distributions of categorical and numerical data.

- Scatter Plots: Show relationships between two numerical variables.

- Box Plots: Visualize the spread and distribution of numerical data.

- Heat Maps: Display correlations between variables.

- Time Series Plots: Explore data over time.

- Summary Statistics:

Compute summary statistics to gain a deeper understanding of the data:

- Central Tendency: Calculate mean, median, and mode.

- Dispersion: Assess variance, standard deviation, and range.

- Skewness and Kurtosis: Understand the shape of the data distribution.

- Correlation: Evaluate relationships between variables.

- Exploratory Data Analysis (EDA):

Perform in-depth analysis to uncover patterns, anomalies, and insights:

- Frequency Analysis: Examine the distribution of categorical data.

- Box Plots and Violin Plots: Visualize data distributions and outliers.

- Correlation Matrix: Identify relationships between numerical variables.

- Hypothesis Testing: Conduct statistical tests to confirm or reject hypotheses.

- Dimensionality Reduction (Optional):

If dealing with high-dimensional data, consider techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) to decrease the number of variables.

- Clustering (Optional):

Use clustering algorithms like K-Means or Hierarchical Clustering to group similar data points for further analysis.

- Interpret Results:

Draw meaningful insights from your data exploration. What have you discovered about your data? How does it relate to your initial objective?

- Document Your Findings:

Record your data exploration process, visualizations, and key findings. This documentation will be valuable for reporting and sharing insights.

- Iterate and Refine:

It is often an iterative process. You may need to revisit and refine your analysis as you gather more insights or adjust your objectives.

Data Exploration Tools

Here is a list of several popular tools that you may find useful for your data analysis tasks:

1. Python:

- Pandas: A powerful Python library that allows efficient data manipulation and analysis, making it a crucial tool for data cleaning and preprocessing.

- Matplotlib: A widely-used Python library for creating static, animated, and interactive visualizations.

- Seaborn: A data visualization library built on Matplotlib, designed for creating attractive and informative statistical graphics.

2. R:

- RStudio: R’s integrated development environment (IDE). It offers data analysis, visualization, and reporting features.

- ggplot2: A popular R package for creating elegant and customizable data visualizations based on the Grammar of Graphics.

3. Jupyter Notebook:

An open-source web tool for creating and sharing documents with live code, equations, visualizations, and narrative text. It is compatible with a number of programming languages, including Python and R.

4. Tableau:

A new data visualization tool that simplifies creating interactive and shareable dashboards for all levels of technical expertise.

5. Power BI:

Microsoft’s business analytics solution allows you to create interactive reports and dashboards. It interfaces with numerous data sources, making it suitable for data research and business insight.

6. Excel:

Microsoft Excel is a well-known data exploration tool, particularly for smaller datasets. It can perform basic data cleaning, visualization, and analysis.

7. SQL and Relational Databases:

SQL (Structured Query Language) is essential for data retrieval and manipulation when working with relational databases. Tools like MySQL, PostgreSQL, and SQL Server are commonly used for data exploration in database-driven scenarios.

8. Google Data Studio:

A free tool for creating dynamic and customizable reports and dashboards from data sources such as Google Analytics and Google Sheets.

9. Apache Spark:

An open-source, big data processing framework that can be used for data exploration at scale. It supports distributed data analysis and machine learning.

10. KNIME:

An open-source data analytics, reporting, and integration platform with a visual workflow interface that provides a wide range of data exploration and analysis capabilities.

11. RapidMiner:

A data science platform that includes data preparation, machine learning, and predictive analytics. It has a visual design environment for data exploration and analysis.

12. Orange:

An open-source data visualization and analysis tool with a user-friendly interface, making it accessible for those without extensive coding skills.

13. QlikView/Qlik Sense:

Tools for data visualization, exploration, and business intelligence. They provide associative data modeling, making it easier to explore data relationships.

14. IBM SPSS:

A software package for statistical analysis, which includes data exploration and visualization capabilities.

15. SAS:

A comprehensive analytics platform that offers data exploration, data mining, and statistical analysis tools for enterprise-level data analysis.

Data Exploration in Machine Learning

Here are key points to explain data exploration in the context of machine learning

- Understanding the Dataset:

- Grasp the dataset’s structure, including features and target variables.

- Identify data types (numerical, categorical) and potential issues (missing values, outliers).

- Data Cleaning and Preprocessing:

- Handle missing data through imputation or removal techniques.

- Detect and deal with outliers to ensure data quality.

- Normalize or standardize numerical features for consistency.

- Exploratory Data Analysis (EDA):

- Generate descriptive statistics to understand the data’s central tendencies and spread.

- Visualize data using histograms, box plots, and scatter plots to identify patterns and correlations.

- Perform correlation analysis to understand feature relationships.

- Feature Engineering:

- Create new features based on domain knowledge to enhance model performance.

- Encode categorical variables using techniques like one-hot encoding or label encoding.

- Transform variables (logarithmic, polynomial) to capture complex relationships.

- Dimensionality Reduction:

- Apply techniques like Principal Component Analysis (PCA) to reduce the number of features.

- Preserve essential information while reducing computational complexity.

- Data Splitting:

- To accurately evaluate the model’s performance, separate the dataset into training and testing sets.

- To ensure accurate evaluation, make sure that there is a balanced representation of classes in both sets.

- Visualization and Interpretation:

- Use advanced visualization tools to create interactive charts and dashboards.

- Interpret model predictions and visualize decision boundaries for better understanding.

- Iterative Process:

- Data exploration is iterative; revisiting the exploration phase might be necessary after model evaluation.

- Continuously refine the process based on insights gained during model building and testing.

- Documentation:

- Document findings, transformations, and visualizations to share insights with stakeholders.

- Maintain a detailed record of data exploration steps for reproducibility and future reference.

- Validation and Cross-Validation:

- Validate model performance using techniques like cross-validation to ensure consistency.

- Fine-tune the model based on insights gained from validation results.

Data Exploration in GIS

Data exploration in GIS (Geographic Information Systems) involves the systematic analysis and visualization of geospatial data. It includes tasks like:

- Data Visualization: Creating maps, graphs, and spatial representations to analyze spatial patterns.

- Spatial Analysis: Examining relationships, proximity, and spatial statistics.

- Attribute Exploration: Investigating non-spatial data associated with geographic features.

- Geoprocessing: Performing operations like buffering, clipping, and overlay analysis.

Data exploration in GIS is crucial for informed decision-making, resource management, and solving spatial problems.

Interactive Data Exploration

Key aspects of interactive data exploration include:

- Visual Exploration: Users can manipulate data visualizations (e.g., charts, maps, dashboards) to drill down into specific data points, zoom in, filter, or highlight areas of interest.

- Dynamic Filtering: Interactive exploration allows for real-time adjustments of filters and parameters to view data subsets or specific dimensions instantly.

- Tool Customization: Users can choose from various tools and parameters to suit their analytical needs, making it easier to explore data effectively.

- Feedback Loop: Users can observe the immediate impact of their actions, which fosters a more iterative and exploratory data analysis process.

- User-Friendly Interfaces: Interactive exploration tools are often designed with intuitive interfaces, making it accessible to a wider audience, including non-technical users.

- Collaboration: Interactive exploration can support collaborative efforts, allowing multiple users to work together in real-time and share insights.

Best Language for Data Exploration

Some of the most popular languages for data exploration include:

- Python: One of the best languages for data exploration. It is a versatile choice for data analysis and visualization due to its rich ecosystem of libraries and tools, such as Pandas, NumPy, Matplotlib, Seaborn, and Jupyter Notebooks.

- R: R is another powerful language specifically designed for statistics and data analysis. It excels in data exploration with packages like ggplot2, dplyr, and shiny, which provide extensive data visualization and manipulation capabilities.

- SQL: Structured Query Language (SQL) is excellent for exploring and querying structured databases. It’s essential for data exploration when working with relational databases.

- Jupyter Notebooks: While not a language, Jupyter Notebooks provide an interactive environment for data exploration and analysis. You can use Jupyter with Python, R, Julia, and more, making it a versatile choice.

- Julia: Julia is gaining popularity in the data science community for its high-performance computing capabilities. It’s well-suited for exploring large datasets and conducting complex computations.

- Scala: Scala, often used with Apache Spark, is beneficial for big data exploration and analysis due to its distributed computing capabilities.

Data exploration vs. data mining

| Section | Data Exploration | Data Mining |

| Purpose | To understand and describe data. | To discover hidden patterns and relationships in data. |

| Stage in Data Analysis | Early stage; precedes data mining. | Follows data exploration; more in-depth analysis. |

| Main Activities | Descriptive statistics, data visualization, data cleaning. | Predictive modeling, clustering, association rule mining. |

| Focus | Summary statistics, data visualization, and outliers detection. | Prediction, classification, clustering, and pattern recognition. |

| Goal | Gain insights, identify data issues, and prepare data for modeling. | Extract actionable knowledge, make predictions, and find associations. |

| Techniques | Basic statistics, visualization tools, and data cleaning. | Machine learning, algorithms, pattern recognition. |

| Tools and Software | Pandas, Matplotlib, R, Excel. | Scikit-learn, TensorFlow, Weka, RapidMiner. |

| Example Application | Identifying missing values and visualizing data distributions. | Predicting customer churn, market basket analysis. |

Data Discovery vs Data Exploration

| Section | Data Discovery | Data Exploration |

| Goal | Identifying new and valuable insights often involves big data and unstructured data sources. | Understanding the dataset, finding patterns, and gaining initial insights. |

| Stage in Data Analysis | Typically follows data exploration. | The initial step in the data analysis process precedes data mining. |

| Data Sources | Emphasizes diverse and unstructured data sources, including big data, social media, and sensor data. | Focuses on structured and semi-structured data, often from databases and spreadsheets. |

| Techniques | Advanced analytics, machine learning, natural language processing, sentiment analysis. | Descriptive statistics, data visualization, basic statistical analysis. |

| Tools and Software | Apache Hadoop, Spark, Elasticsearch. | Pandas, R, Matplotlib, Tableau. |

| Use Case | Uncovering novel trends, patterns, and relationships from large and complex datasets. | Cleaning, transforming, and summarizing data for further analysis. |

| Focus | Big data analytics, predictive modeling, and identifying emerging trends. | Visualizing data distributions,

detecting outliers, and understanding feature relationships. |

Data Examination vs Data Exploration

| Section | Data Examination | Data Exploration |

| Objective | Reviewing data for known aspects, confirming specific details or requirements. | Understanding the overall dataset, finding patterns, and uncovering hidden insights. |

| Timing | Typically conducted as a follow-up to data exploration. | Usually, the initial step in the data analysis process. |

| Focus | Confirming specific data details or characteristics. | General understanding of data, detecting anomalies, and preparing for further analysis. |

| Techniques | Data validation, verification, and fact-checking. | Descriptive statistics, data visualization, preliminary data cleaning. |

| Use Case | Ensuring data accuracy, compliance with predefined standards, or specific business

requirements. |

Initial data assessment to determine data quality and usability. |

| Tools and Software | May involve data validation tools, SQL queries, or specific validation scripts. | Pandas, R, Matplotlib, Excel, data profiling tools. |

| Example Scenario | Verifying that customer addresses are correctly formatted and meet postal standards. | Investigating data distributions, identifying missing values, and spotting outliers. |

Which industries use data exploration?

Some of the key industries that extensively use data exploration include:

- Finance and Banking: Banks and financial institutions use data exploration to analyze customer transactions, detect fraudulent activities, assess credit risks, and optimize investment strategies.

- Healthcare: Healthcare organizations explore patient data to identify trends, improve patient outcomes, manage resources efficiently, and enhance healthcare services.

- Retail and E-commerce: Retailers analyze customer behavior, sales patterns, and inventory data to optimize pricing, improve supply chain management, personalize marketing efforts, and forecast demand.

- Marketing and Advertising: Marketers explore customer demographics, preferences, and engagement metrics to design targeted marketing campaigns, measure campaign effectiveness, and optimize customer acquisition and retention strategies.

- Manufacturing: Manufacturers use data exploration to monitor production processes, identify defects, reduce downtime, optimize supply chains, and enhance operational efficiency.

- Telecommunications: Telecom companies explore network data to optimize network performance, predict equipment failures, analyze customer usage patterns, and improve service quality.

- Education: Educational institutions explore student performance data, learning patterns, and attendance records to identify at-risk students, improve teaching methods, and enhance overall educational outcomes.

- Transportation and Logistics: Transportation companies explore data related to routes, delivery times, fuel consumption, and vehicle maintenance to optimize logistics operations, reduce costs, and enhance customer satisfaction.

- Energy: Energy companies explore data to optimize energy production, monitor equipment performance, predict maintenance needs, and improve energy efficiency.

- Government and Public Sector: Government agencies use data exploration for various purposes, such as analyzing census data, monitoring public healthcare datasets, tracking public health trends, optimizing urban planning, and improving public services.

Advanced Techniques

Here are some advanced data exploration techniques:

1. Exploratory Data Analysis (EDA):

- Multivariate Analysis: Examining relationships between multiple variables simultaneously to identify patterns not apparent in bivariate analysis.

- Interactive Data Visualization: Using tools like Plotly or Bokeh to create interactive visualizations, enabling users to explore data dynamically.

- Distribution Fitting: Fitting probability distributions to the data to understand the underlying patterns and assess the goodness of fit.

2. Feature Engineering:

- Derived Features: Creating new features from existing ones to capture complex relationships in the data, enhancing the predictive power of machine learning models.

- Temporal Aggregation: Aggregating data over time intervals to identify trends and patterns in time series data.

- Correlation and Causation Analysis:

- Causal Inference: Using techniques like causal inference frameworks (such as do-calculus) to establish causation between variables, moving beyond mere correlation.

- Granger Causality: A statistical hypothesis test to determine whether one time series can predict another, often used in time series analysis.

3. Anomaly Detection:

- Isolation Forest: An unsupervised learning system for detecting anomalies in high-dimensional datasets.

- One-Class SVM: A support vector machine algorithm for novelty detection, identifying observations that deviate significantly from the norm.

4. Text and Sentiment Analysis:

- Natural Language Processing (NLP): Analyzing textual data to extract meaningful insights, sentiment, and topics using tokenization, stemming, and word embeddings.

- Sentiment Analysis: Determining the sentiment (positive, negative, neutral) of text data to understand customer opinions, reviews, or social media posts.

5. Dimensionality Reduction:

- t-Distributed Stochastic Neighbor Embedding (t-SNE): A technique for visualizing high-dimensional data in two or three dimensions while preserving local relationships between data elements.

- Uniform Manifold Approximation and Projection (UMAP): Another dimensionality reduction technique similar to t-SNE, often faster and preserving both local and global structures in the data.

6. Clustering and Segmentation:

- DBSCAN: A clustering algorithm called Density-Based Spatial Clustering of Applications with Noise (DBSCAN) groups together closely packed data points and identifies noise as outliers.

- Latent Dirichlet Allocation (LDA): A probabilistic model used for topic modeling, identifying topics within a collection of documents and the words associated with each topic.

7. Interactive Dashboards:

- Dashboard Tools: Creating interactive dashboards with tools like Tableau, Power BI, or Plotly Dash allows users to explore and analyze data in real-time, promoting data-driven decision-making.

Real-Life Applications

Here are some specific examples of how data exploration in different domains:

1. Healthcare Industry:

- Predictive Analytics for Disease Outcomes: Data exploration is used to analyze historical patient data, identifying patterns and risk factors associated with diseases. Predictive models help healthcare professionals anticipate and prevent disease outbreaks or complications.

- Patient Segmentation for Personalized Medicine: By exploring patient data, healthcare providers can segment patients based on demographics, genetics, and lifestyle factors. This segmentation allows for personalized treatment plans, leading to improved patient outcomes.

2. Business and Marketing:

- Customer Segmentation: It helps businesses analyze customer data to identify segments based on purchasing behavior, demographics, or psychographics. This information is invaluable for targeted marketing campaigns and product customization.

- Market Basket Analysis: Retailers use data exploration techniques to analyze customer purchase patterns. By exploring transaction data, businesses can identify products frequently bought together, leading to optimized product placements and cross-selling strategies.

3. Social Sciences:

- Sentiment Analysis in Social Media Data: Data exploration in social media data allows researchers to understand public sentiment and opinions on various topics. Analyzing trends in social media conversations provides insights into public perception, which can inform social and political strategies.

- Political Polling and Opinion Analysis: Polling data exploration helps political analysts understand voter preferences, sentiments, and trends. By exploring survey data, analysts can make predictions and inform election strategies.

4. Financial Services:

- Fraud Detection: Financial institutions use data exploration techniques to detect unusual patterns and anomalies in transaction data. By exploring transaction histories, they can identify potential fraud and take preventive measures to protect customers and the business.

- Credit Risk Assessment: It helps assess credit risk by analyzing financial data, customer behavior, and credit histories. Exploring this data allows financial institutions to make informed lending decisions and set appropriate interest rates.

5. Manufacturing and Supply Chain:

- Predictive Maintenance: By exploring sensor data from manufacturing equipment, businesses can predict when machines are likely to fail. Predictive maintenance ensures timely repairs, reduces downtime, and optimizes production processes.

- Inventory Optimization: In the supply chain, data helps businesses optimize inventory levels. By analyzing demand patterns, seasonality, and supplier performance, organizations can maintain optimal stock levels, reducing carrying costs and stockouts.

6. Education:

- Student Performance Analysis: Schools and educational institutions explore student data to identify factors influencing academic performance. By analyzing attendance records, test scores, and other variables, educators can develop targeted interventions to support struggling students.

- Educational Resource Allocation: It aids in analyzing resource utilization, class attendance, and teacher effectiveness. Schools can optimize resource allocation, ensuring that funds are allocated efficiently for maximum educational impact.

Future Trends

Several future trends are likely to impact the landscape of data exploration in the coming years:

- Artificial Intelligence (AI) and Machine Learning Integration: Integrating AI and machine learning algorithms into data exploration tools will become more prevalent. Automated machine learning (AutoML) techniques will assist analysts in identifying patterns and trends, allowing for faster and more accurate insights extraction.

- Explainable AI: As AI-driven models become more sophisticated, the need for transparency and interpretability is growing. It is methods will evolve to provide clearer explanations of how AI models make decisions, ensuring that insights are not only accurate but also understandable and trustworthy.

- Ethical and Responsible Data Exploration: With increased awareness of data privacy and ethics, there will be a focus on responsible data exploration practices. Companies and researchers will need to navigate the balance between extracting valuable insights and ensuring the ethical use of data, especially concerning issues like bias and fairness.

- Advanced Natural Language Processing (NLP): Advancements in natural language processing (NLP) will enable more accurate sentiment analysis, entity recognition, and language translation. This will provide deeper insights from unstructured data sources like social media, customer reviews, and support tickets.

- Augmented and Virtual Reality (AR/VR) Data Visualization: AR/VR technologies will enable immersive and interactive data visualization experiences. Analysts can explore multidimensional datasets in 3D space, leading to more intuitive insights discovery and improved collaboration among teams.

- Streaming Data Exploration: With the rise of IoT devices and real-time data sources, there will be an increased focus on exploring streaming data. Data exploration tools will need to adapt to handle continuous streams of data, enabling organizations to gain insights in real-time and make immediate decisions.

- Federated Learning: Federated learning enables the training of models across multiple decentralized edge devices without exchanging raw data. Data exploration techniques will need to adapt to this distributed learning paradigm, enabling insights extraction while preserving data privacy and security.

- Graph Data Exploration: With the proliferation of graph databases, exploring and analyzing complex relationships within interconnected data points will become more critical. Graph-based data exploration techniques will be essential for understanding networks, social interactions, fraud patterns, and supply chain relationships.

- Data Exploration in Augmented Analytics: Augmented analytics integrates AI and machine learning directly into the analytics workflow, automating insights discovery and natural language generation. It is an integral part of augmented analytics, enabling users to explore and validate automatically generated insights.

- Collaborative Data Exploration: Collaborative features within data exploration platforms will become more sophisticated. Real-time collaboration, version control, and shared exploration sessions will enhance teamwork among data analysts, data scientists, and domain experts, fostering a more collaborative and productive environment.

Conclusion

It is a crucial step in data analysis that enables organizations to discover valuable insights, make informed decisions, and adapt to changing trends. With the integration of AI, ethical considerations, and emerging technologies, the future of data exploration promises even greater accuracy, transparency, and accessibility. Exploring complex and voluminous data is key to success in the data-driven age.

Recommended Articles

We hope that this EDUCBA information on “Data Exploration” was beneficial to you. You can view EDUCBA’s recommended articles for more information.