APACHE SPARK

Specialization | 3 Course Series

his Apache Spark Training includes 3 courses with 12+ hours of video tutorials and One year access. You get to learn fundamental mechanisms and basic internals of the framework and understand the need to use Spark, its programming and machine learning in detail.

Enroll now and get a FREE Exam Voucher worth $285!

Offer ends in:

What you'll get

- 12+ Hours

- 3 Courses

- Course Completion Certificates

- One year access

- Self-paced Courses

- Technical Support

- Mobile App Access

- Case Studies

Synopsis

- Courses: You get access to all 3 courses, Projects bundle. You do not need to purchase each course separately.

- Hours: 12+ Video Hours

- Core Coverage: Fundamentals of Spark, Machine learning using Spark and Spark streaming

- Course Validity: One year access

- Eligibility: Anyone serious about learning Big Data with Apache Spark and wants to make a career in this Field

- Pre-Requisites: Basic knowledge about data analytics would be preferable

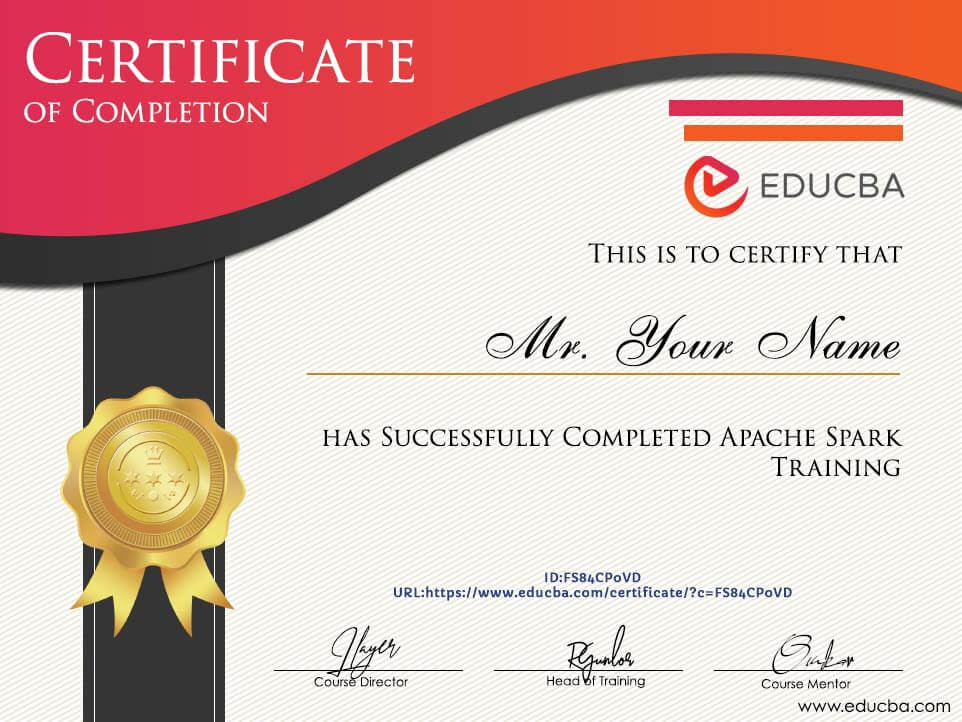

- What do you get? Certificate of Completion for each of the 3 courses, Projects

- Certification Type: Course Completion Certificates

- Verifiable Certificates? Yes, you get verifiable certificates for each course with a unique link. These link can be included in your Resume/LinkedIn profile to showcase your enhanced skills

- Type of Training: Video Course – Self Paced Learning

Content

-

MODULE 1: Apache Spark Training Essentials

Courses No. of Hours Certificates Details Learning spark programming 6h 4m ✔ Apache Spark - Beginners 1h 38m ✔ -

MODULE 2: Projects based Learning

Courses No. of Hours Certificates Details Apache Spark - Advanced 5h 49m ✔

Description

Spark is an open-source framework widely used in many organizations to process large Data source and developed in the year 2009. It drives as a friendly platform for machine learning tasks, interactive queries, and large-scale SQL. Apache Spark has become one of the primary big data frameworks which have overtaken the old map-reduce module. Spark helps data scientists in analysis, transformation, querying. The day it was incepted, most of the tasks happen in memory which is quite speedy. Spark took their part in data integration to reduce the cost and time. Most of the leading companies were already join their hands with Apache like IBM, Huawei, and all the major Hadoop vendors.

It is a Primary analytical engine for large scale data processing. To Build Apache Spark project most importantly you require Spark SQL has SQL engine which processes structured data. They help in data optimization in the data processing workflow. To handle machine learning it has its own MLB libraries. Spark uses Cluster computing for its analytical purpose and storage. Next, for a cluster manager Spark run on YARN. Apache Spark is the first Big data structure to process data-in-memory. The process is 1000 times faster than Hadoop. Before starting into an application, it is necessary to choose a language. To have better APIs spark is developed in Scala.

Sample Certificate

Requirements

- Basic understanding of Java Programming language, Python. Some familiarity on SQL is helpful, Knowledge on Map Reduce. You should have exposure to the Linux Operating system. Scala Programming and Data warehouse backgrounder who wishes to get into the big data domain can do this Apache Spark Training Course. Hands-on experience with computer knowledge on Windows, Linux and background knowledge on Big data.

Target Audience

- Big data developers, data scientists, analysts, data engineers, software engineers. Professionals are interested in a career in the field of big data, Academic professors who keen their research on Apache Spark training. It will be useful for ETL developers and analytical professionals. Big data Architects and who have a passion to develop their career in Business Intelligence and Business Intelligence professionals.

Course Ratings

Enroll now and get a FREE Exam Voucher worth $285!

Offer ends in:

Training 5 or more people?

Get your team access to 5,000+ top courses, learning paths, mock tests anytime, anywhere.

Drop an email at: info@educba.com

Good way to start learning the very basics of SPARK architecture and programming.People who don't have programming should first attend the SCALA tutorial before this tutorial. The diagrammatic explanation was very clearly explained for easier understanding of how the processes get implemented in SPARK .

Sukhasis Basu Roy