Updated July 6, 2023

Introduction to Dataset Normalization

Basically, data normalization is used to rescale one or more attributes as per the user requirement between the ranges of 0 to 1. The notation of 0 and 1, 1 is used to define the largest value of each and every attribute, and 0 is used for the smallest value of each and every attribute. As per requirement, we can normalize all attributes of our dataset by using a normalization filter. In machine learning, we need to use normalization because of variation in data. Suppose one attribute may be present in kilograms and another is present in grams, so that is the reason we need to use normalization on the dataset to maintain the uniformity in datasets.

What is Dataset Normalization?

Normalization is a method frequently applied as a component of information groundwork for AI. The objective of normalization is to change the upsides of numeric sections in the dataset to a typical scale, without misshaping contrasts in the scopes of qualities. For AI, each dataset doesn’t need normalization. It is required just when elements have various reaches.

Normalization has the following technique as follows:

1. Scaling

Scaling implies changing over drifting point highlight esteems from their normal reach (for instance, 100 to 900) into a standard reach—generally 0 and 1 (or once in a while – 1 to +1).

We can use the following formula for scaling.

Scaling to a reach is a decent decision when both of the accompanying conditions are met:

- You know the surmised upper and lower limits on your information with few or no anomalies.

- Your information is roughly consistently dispersed across that reach.

- A genuine model is an age. Most age esteems fall somewhere in the range of 0 and 90, and all aspects of the reach have a significant number of individuals. Interestingly, you would not utilize scaling on pay, in light of the fact that a couple of individuals have exceptionally major league salaries. The upper bound of the straight scale for money would be exceptionally high, and the vast majority would fit into a little piece of the scale.

2. Clipping

In the event that your informational index contains outrageous exceptions, you may attempt to include cutting, which covers all element esteems above (or underneath) a specific worth to fixed worth. For instance, you could cut all temperature esteems over 60 to be by and large 60. You might apply highlight cutting previously or after different normalizations.

3. Log Scaling

Log scaling is useful when a modest bunch of your qualities has many focuses, while most different qualities have not many focuses. This information dispersion is known as force law appropriation. Film evaluations are a genuine model.

For Log scaling, we can use the following formula.

4. Z-Score

Z-score is a variety of scaling that addresses the number of standard deviations from the mean. You would utilize a z-score to guarantee your component circulations have mean = 0 and sexually transmitted disease = 1. It’s valuable when there are a couple of anomalies, however not really outrageous that you need cutting.

For a Z-score, we can use the following formula.

Why Use Dataset Normalization?

Let’s see why we use dataset normalization in machine learning as follows:

- Normalization is a decent strategy to utilize when you don’t have the foggiest idea about the dissemination of your information or when you realize the dispersion isn’t Gaussian (a ringer bends).

- Normalization is valuable when your information has fluctuating scales and the calculation you are utilizing doesn’t make presumptions about the conveyance of your information, for example, k-closest neighbors and counterfeit neural organizations.

Examples of Dataset Normalization

Given below are the examples mentioned:

Example #1

Code:

from sklearn import preprocessing

import numpy as npvalue

value = npvalue.array([2,3,5,6,7,4,8,7,6])

n_array = preprocessing.normalize([value])

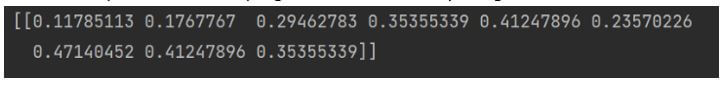

print(n_array)Explanation:

- By using the above code we try to implement the normalization in the dataset, here first we import the sklearn and preprocessing, here we also import the NumPy for the dataset as shown.

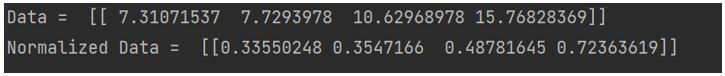

- The final output of the above program we illustrated by using screenshots as follows.

Output:

Example #2

Code:

from sklearn import preprocessing

import numpy as npvalue

value = npvalue.random.random((1, 4))

value = value*20

print("Data = ", value)

normalized = preprocessing.normalize(value)

print("Normalized Data = ", normalized)Explanation:

- The final output of the above program we illustrated by using screenshots as follows.

Output:

Normalization vs Standardization

Given below is the basic difference between normalization vs standardization:

| Normalization | Standardization |

| In normalization, we can use min and max for scaling. | In standardization, we can use mean and standard for scaling. |

| We can use normalization when the features of the dataset are different. | It is used when we need to ensure that we have a zero mean and unit standard deviation. |

| Value of scale between 0 to 1 and -1 to 1. | In standardization, there is no specific range. |

| It is used for the outliers. | In standardization, it is less affected by outliers. |

| Basically, normalization is when we don’t know the actual distribution. | Standardization is during the distribution. |

| Normalization is also called scaling. | Standardization is additionally called a Z-score. |

Features of Dataset Normalization

Given below are the different features of dataset normalization:

- Pipeline: Scaling has a chance of leaking the part of the test data in train-test split into the training data. The pipeline is basically used for cross-validation. We can scale the train and test datasets independently to stay away from this. By using sklearn we can easily implement the pipeline in the dataset.

- Scaling: It is one main feature of dataset normalization.

- Persistence: We can use a scaler for normalization for the new dataset, so we can use pickle or joblib.

Conclusion

From the above article, we have taken in the essential idea of the dataset normalization and we also saw the representation of the dataset normalization. From this article, we saw how and when we use the dataset normalization.

Recommended Articles

We hope that this EDUCBA information on “Dataset Normalization” was beneficial to you. You can view EDUCBA’s recommended articles for more information.