Updated April 12, 2023

Introduction to Docker Storage Drivers

Docker storage driver, also known as graph driver provides a mechanism to store and manage images and containers on Docker host. Docker uses a pluggable architecture that supports different storage drivers. Storage drivers allow our workloads to write to the container’s writable layer. There are several different storage drivers supported by Docker, we need to choose the best storage driver for our workloads. To choose the best storage driver, it is important to understand the process of building and storing images in Docker and how these images are used by containers. The default storage driver is overlay2 and it is supported on Docker Engine – Community, and Docker EE 17.06.02-ee5 and up, however, we can change it as per our requirement.

Different Storage Drivers of Docker

Below are different storage drivers supported by Docker: –

- overlay2

- aufs

- devicemapper

- btrfs

- zfs

- vfs

1. overlay2

- It is the default storage driver currently.

- It is supported by Docker Engine – Community, and Docker EE 17.06.02-ee5 and newer version.

- It is newer and more stable than its original driver called ‘overlay’.

- The backing filesystem for overlay2 and overlay driver is xfs.

- It is supported by Linux kernel version 4.0 or higher, version 3.10.0-514 or higher of RHEL or CentOS is required for overlay2 otherwise we need to use overlay storage driver that is not recommended.

- Overlay2 and overlay drivers come under Linux kernel driver OverlayFS storage driver which is a modern union filesystem and it is similar to aufs, however, OverlayFs is faster and easy to implement.

2. aufs

- AUFS is a union filesystem, which means it presents multiple directories called branches in AUFS as a single directory on a single Linux host. These directories are known as layers in Docker.

- It was the default storage driver before overlay2 used to manage images and layers on Docker for Ubuntu, and for Debian versions before Stretch. It was the default storage driver in Ubuntu 14.04 and earlier.

- This driver is good for Platform as a Service where container density is important because AUFS can share the images between multiple running containers efficiently.

- It provides a fast container startup and uses less disk space as AUFS shares images between multiple running containers.

- It uses memory efficiently, however, not efficient in write-heavy workloads as latencies are high while writing to the container because it has multiple directories so the file needs to be located and copied to containers top writable layer.

- It should be used with Solid State Drives for faster read and writes than spinning disk and use volumes for write-heavy workloads to get better performance.

- The backing filesystem for AUFS is xfs and ext4 Linux file system.

3. devicemapper

- It is a block storage driver that stores data in blocks.

- It is good for write-heavy workloads as it stores data at the block level instead of the file level.

- It is a kernel-based framework and Docker’s devicemapper storage driver takes advantage of its capabilities such as thin provision and snapshotting to manage images and containers.

- It was the default storage driver for CentOS 7 and earlier.

- It supports two modes:

loop-lvm mode:

- loop-lvm is used to simulate files on the local disk as an actual physical disk or block device by using the ‘loopback’ mechanism. It is because devicemapper only supports external or block devices.

- It is useful for testing purposes only as it provides bad performance.

- It is easy to set up as does not require an external device

direct-lvm mode:

- It requires additional devices to be attached to the localhost as it stores data on a separate device.

- It is production-ready and provides better performance.

- It requires a complex setup to enable direct-lvm.

- We need to configure the daemon.json file to use direct-lvm mode. It has multiple options that can be set up as per requirement.

- The lvm2 and device-mapper-persistent-data packages must be installed to use devicemapper.

- It uses direct-lvm as a backing filesystem.

4. btrfs

- This storage driver is also part of the main Linux kernel.

- It is only supported on SLES ( Suse Linux Enterprise Server) for Docker EE and CS engine.

- However, it is recommended for Ubuntu or Debian for Docker Engine – Community edition.

- btrfs driver backed by Btrfs filesystem which is a next-generation copy-on-write filesystem.

- Btrfs filesystem has many features, for example, block-level operations, thin provisioning, copy-on-write snapshots, etc. these features of Btrfs are used by Docker’s btrfs storage driver to manage images and containers.

- It also requires dedicated block storage and must be formatted with Btrfs filesystem, however, we do not require separate block device if using SLES as it is formatted with Btrfs by default. It is recommended to use additional block devices for better performance.

- Our kernel must support btrfs.

5. zfs

- It is open-sourced under CDDL (Common Development and Distribution License) and created by Sun Microsystems (currently Oracle Corporation).

- It is also a next-generation filesystem that has many features like volume management, snapshots, checksumming, compression and deduplication, replication, etc.

- It is not part of the mainline Linux kernel because of licensing incompatibilities between the CDDL and GPL.

- It is not recommended to use this Docker storage driver for production workloads without substantial experience with ZFS.

- It is only supported on Docker CE with Ubuntu 14.04 or higher.

- It is not supported on Docker EE or CS-engine.

6. vfs

- It is only for testing purposes and not recommended for production use.

- The performance is poor for this storage driver.

- It can be supported on any filesystem where no copy-on-write filesystem can be used.

Examples of Docker Storage Drivers

Let’s learn some commands to know about Docker’s storage driver with examples: –

Example #1

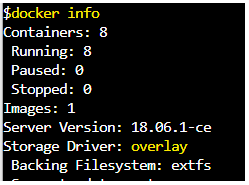

We use the ‘docker info’ command to check the default driver used by Docker as below: –

$docker info

Explanation: – In the above snapshot, we can see that the ‘overlay’ storage driver is used by Docker.

Example #2

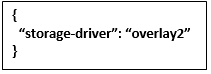

Now we want to configure the Docker to use ‘overlay2’ as a default storage driver, we can do that by editing the ‘daemon.json’ file located at /etc/docker/daemon.json as below: –

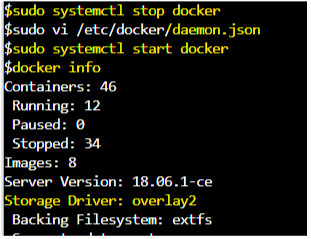

Step1: First stop docker service using below command: –

$sudo systemctl stop docker

Step2: Add below configuration to the ‘daemon.json’ file, create the file if it does not exist.

Step3: Start the docker service again as below: –

$sudo systemctl start docker

$docker info

Example #3

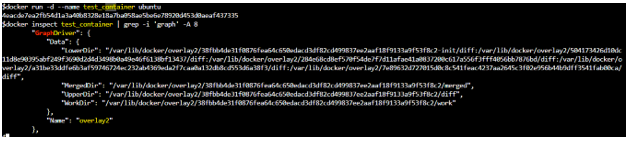

We can run a container and check which driver is being used by that container: –

$docker run -d --name test_container ubuntu

$docker inspect test_container | grep -i ‘graph’ -A 8

Explanation: – In the above example, we can see that the ‘overlay2’ graph driver or storage driver is being used by a newly created container.

Conclusion

There are several different storage drivers available that are supported by Docker. We have to understand the functionality of every driver and choose which driver is best suited for our workloads. We consider three high-level factors while selecting Docker’s storage driver those are overall performance, shared storage system, and stability.

Recommended Articles

This is a guide to Docker Storage Drivers. Here we discuss the different storage drivers supported by Docker and examples along with the commands. You may also look at the following articles to learn more –