Updated March 21, 2023

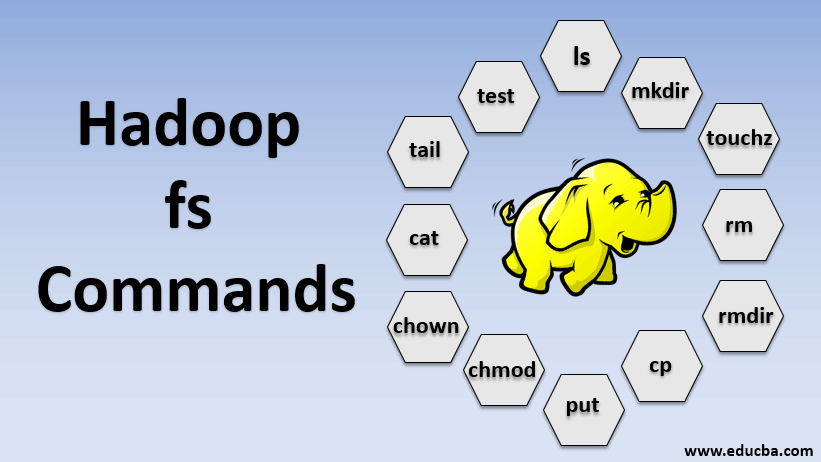

Introduction to Hadoop fs Commands

Hadoop fs Commands are the command-line utility for working with the Hadoop system. These commands are widely used to process the data and related files. These commands are Linux based commands which control the Hadoop environment and data files. The ‘Hadoop fs’ is associated as a prefix to the command syntax. Some of the commonly used Hadoop fs commands are listing the directory structure to view the files and subdirectories, Creating directory in the HDFS file system, creating empty files, removing files and directories from HDFS, copying files from other edge nodes to HDFS and copying files from HDFS locations to edge nodes.

Commands of Hadoop fs

Now, let’s learn how to use HADOOP fs commands.

We will start with the basics. Just type these commands in PUTTY or any console you are comfortable with.

1. hadoop fs -ls

For a directory, it returns the list of files and directories whereas, for a file, it returns the statistics on the file.

hadoop fs -lsr: this is for recursively listing the directories and files under specific folders.

Example:

Hadoop fs -ls / or hadoop fs -lsr

- -d: This is used to list the directories as plain files.

- -h: This is used to format the sizes of files into a human-readable manner than just the number of bytes.

- -R: This is used to recursively list the contents of directories.

2. hadoop fs -mkdir

This command takes the path as an argument and creates directories in hdfs.

Example:

hadoop fs -mkdir /user/datahub1/data

3. hadoop fs -touchz

It creates an empty file and utilizes no space

Example:

hadoop fs -touchz URI

4. hadoop fs -rm

Delete files specified as the argument. We must specify the -r option to delete the entire directory. And if -skipTrash option is specified, it will skip trash and the file will be deleted immediately.

Example:

hadoop fs -rm -r /user/test1/abc.text

5. hadoopfs -rmdir

It removes files and permissions of directories and subdirectories. Basically, it is the expanded version of the Hadoop fs -rm.

6. hadoop fs -cp

It copies the file from one location to other

Example:

hadoop fs -cp /user/data/abc.csv /user/datahub

7. hadoop fs -copyFromLocal

It copies the file from edgenode to HDFS.

8. hadoop fs -put

It copies the file from edgenode to HDFS, it is similar to the previous command but put also reads input from standard input stdin and writes to HDFS

Example:

hadoop fs -put abc.csv /user/data

Note:

- hadoop fs -put -p: The flag preserves the access, modification time, ownership and the mode.

- hadoop fs -put -f: This command overwrites the destination if the file already exists before the copy.

9. hadoop fs -moveFromLocal

It is similar to copy from local except that the source file is deleted from local edgenode after it is copied to HDFS

Example:

fs -moveFromLocal abc.text /user/data/acb.

10. hadoop fs -copyToLocal

It copies the file from HDFS to edgenode.

Example:

fs -copyToLocal abc.text /localpath

11. hadoop fs -chmod

This command helps us to change access of a file or directory

Example:

hadoop fs -chmod [-R] [path]

12. hadoop fs -chown

This command helps us to change the ownership of a file or directory

Example:

hadoop fs -chown [-R] [OWNER][:[GROUP]] PATH

13. hadoop fs -cat

It prints the content of an HDFS file on the terminal

Example:

hadoop fs -cat /user/data/abc.csv

14. hadoop fs -tail

It displays last KB of the HDFS file to the stdout

Example:

hadoop fs -tail /in/xyzfile

15. hadoop fs -test

This command is used for HDFS file test operations, it returns 0 if true.

- –e: checks to see if the file exists.

- -z: checks to see if the file is zero-length

- -d/-f: checks to see if the path is directory/file respectively

Here, we discuss an example in detail

Example :

hadoop fs -test -[defz] /user/test/test1.text

16. hadoop fs -du

Displays sizes of files and directories contained in the given directory or the length of a file in case it is a file

17. hadoop fs -df

It displays free space

18. hadoop fs -checksum

Returns the checksum information of a file

19. hadoop fs -getfacl

It displays the access control list (ACLs) of the particular file or directory

20. hadoop fs -count

It counts the number of directories, files, and bytes under the path that matches the specified file pattern.

21. hadoop fs -setrep

Changes the replication factor of a file. And if the path is a directory then the command changes the replication factor of all the files under the directory.

Example:

hadoop fs -setrep -R /user/datahub:

It is used to accept the backward capability and has no effect.

hadoop fs – setrep -w /user/datahub: waits for the replication to be completed

22. hadoop fs -getmerge

It concatenates HDFS files in source into the destination local file

Example:

hadoop fs -getmerge /user/datahub

23. hadoop fs -appendToFile

Appends a single source or multiple sources from the local file system to the destination.

Example:

hadoop fs -appendToFile xyz.log data.csv /in/appendfile

24. hadoop fs -stat

It prints the statistics about the file or directory.

Example:

hadoop fs -stat [format]

Conclusion

So, we have gone through almost all the commands which are necessary for file handling and view the data inside the files. You can modify your files and ingest data into the Hadoop platform now.

Recommended Articles

This is a guide to Hadoop fs Commands. Here we discuss the basic concept, and various Hadoop fs commands along with its example in detail. You may also look at the following articles to learn more –