Updated March 22, 2023

Introduction to Hadoop Schedulers

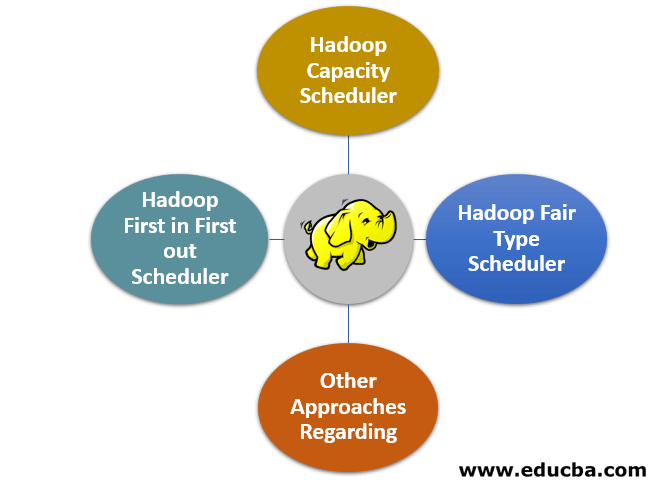

Hadoop Schedulers are general purpose system as it allows the system to perform high level performance processing of data on distributed node sets known as Hadoop. It also helps in ensuring optimum utilization of the resources and the access to the unused level of capacity, few of the Hadoop schedulers present in Hadoop are Hadoop capacity Scheduler, Hadoop First in First out(FIFO) Scheduler and Hadoop Fair type Scheduler

Top 4 Hadoop Schedulers Types

There are several types of Hadoop schedulers which we often use:

1. Hadoop First in First out Scheduler

- As the name suggests, this is one of those oldest job schedulers which works on the principle of first in and first out. Basically, when we talk about the process such as that of JobTracker, we talk about pulling jobs from the queue which is often said to be the work queue.

- According to that work queue the job which is the oldest i.e. the one which has been the first will be the one to be the first as well to get executed.

- This was always believed to be a much simpler approach than other scheduling techniques and therefore not much thought was given to scraping this technique only to find newer approaches with better scheduling capabilities as they also included in themselves concepts of sizing and priority of the job.

2. Hadoop Capacity Scheduler

- The Hadoop Capacity scheduler is more or less like the FIFO approach except that it also makes use of prioritizing the job. This one takes a slightly different approach when we talk about the multi-user level of scheduling.

- This one is known to schedule and simulate a separate MapReduce Cluster for every organization or the user and which is done along with the FIFO type of schedule.

3. Hadoop Fair Type Scheduler

- When there comes a need to provide a separate and reasonable amount of cluster capacity with time and period, we make use of the Hadoop fair scheduler. It is helpful in getting all the clusters even if a particular job is in running condition.

- Moreover, all the free slots of the cluster are provided to all the jobs in a way such that each user gets a normalized share of their cluster’s part as more jobs become useful to be submitted.

- If there is the pool that has not yet received their part of the fair share and a normalized share for a reasonably good amount of time and period, then preemption comes into play thereby killing all the pooled tasks and running with the capacity to provide those pool slots to run under capacity.

- Moreover, this is also known as the contrib module which means that by copying the Hadoop’s control and fair scheduler based directory into the lib based directory and placing the JAR file at the appropriate location this scheduling technique can be enabled. The only thing which is needed to be done is setting up of property of the task scheduler to mapred.FairScheduler.

4. Other Approaches Regarding Scheduler

- Hadoop ensures to offer a provision of providing virtual clusters which means that the need for having physical actual clusters can be minimized and this technique is known as HOD (Hadoop on Demand).

- It makes use of the Torque based resource manager to keep the nodes up and its allocation upon the virtual cluster’s requirement.

- It is used to initialize the load and the system which is based upon the particular nodes inside the virtual and not physical cluster and also along with the allocated nodes, only once the configuration files are prepared automatically.

- The HOD cluster could also be used in a comparatively much independent way once the initialization has taken place. In a nutshell, a nutshell model that is used for deployment of these big Hadoop clusters is within the cloud infrastructure and that’s what is called the HOD. It shares a lesser number of nodes comparatively and therefore provides a higher amount of security.

Importance of Using Hadoop Schedulers

- From the types of Hadoop Schedulers, it should be clear where the importance of using these Hadoop Schedulers lies. If you are running a large cluster that has different job types, different priorities, and sizes along with multiple clients then choosing the right kind of Hadoop scheduler to become important.

- This is important as it ensures the guaranteed access to the unused level of capacity and optimum utilization of resources by prioritizing the jobs efficiently within the queues. Even though this part of the Hadoop schedulers is comparatively easy as using fair schedulers is mostly the right choice if there comes a difference between the number and types of clusters running within a single organization.

- This fair scheduler still can be used to provide and non-uniformly distribute pool capacity of jobs and it is done in a much simpler and configurable manner. The fair scheduler also comes to our rescue when we talk about the presence of diversified kind of jobs as it can be used to provide higher response times for comparatively smaller jobs which are mixed with the larger kinds of jobs and the support for these is included in the interactive use of models.

- Capacity schedulers are helpful, when you are more concerned about the queues instead of the level of pools created and also the configurable level of the map and reduce jobs type slots are available and the queue can afford to get a guaranteed capacity of the cluster.

Conclusion

In this post, we read about the Hadoop schedulers, their meaning, introduction, types of Hadoop schedulers, their functions and also learned about the importance of these Hadoop schedulers. When one relates to the big data ecosystem and environment, Hadoop schedulers are something which is often not talked about but holds utmost significance and cannot be afforded to be left as is. Hope you liked our article.

Recommended Articles

This is a guide to Hadoop Schedulers. Here we discuss the introduction and top 4 types of Hadoop scheduler with the importance of using it. You may also look at the following articles to learn more-