Updated March 18, 2023

What is Hadoop Streaming?

Hadoop Streaming is defined as a utility which comes Hadoop distribution that is used to execute program analysis of big data using programming languages such as Jave, Unix, Perl, Python, Scala, etc. as this gives the user the liberty to create and run MapReduce jobs with the scripts hence it’s used for real-time data ingestion which can be used in different real-time apps(like watching stock portfolio, share market analysis, narrating weather report, etc.) It is a Hadoop distribution with utility. Utility helps us to create and run specific MapReduce jobs with an executable or the script as the mapper and/or reducer.

Understanding

There are java utilities provided by the Hadoop distribution, which are called Hadoop streaming. The utility is packaged in a JAR file. Using the utility, we can create and run MapReduce jobs with an executable script. Moreover, we can create executable scripts to run mapper and reducer functions. The executable scripts are passed to Hadoop streaming using a command. After the scripts are passed to Hadoop streaming, the Hadoop streaming utility creates a map and reduce jobs and submit them to the cluster. These jobs can also be monitored with this utility.

How does it Work?

The script specified for mapper and reducer works as below-

After the complete initialization of the mapper script, it will launch the instance of the script with different process ids. The mapper task, while running, takes the input lines and passes them to the standard input. At the same time, the outputs from the process’s standard output are collected by the mapper. It converts each line into a key-value pair. The set of key-value pairs is then collected as the output from the mapper. The key-value pair is selected based on the first tab character. The part of the line up to the initial tab is selected as key, while the rest of the line is selected as a valuable part. In case the tab is not present in a line, then the total line is selected as key, and there is no value part for the line. This can be adjusted according to business needs.

Purpose

It is used for real-time data ingestion, which can be used in different real-time apps. There are different real-time apps like watching stock portfolios, share market analysis, narrating weather report, traffic alerts which are done using Hadoop streaming.

Working of Hadoop Streaming

Below is a simple example of how it works:

$HADOOP_HOME/bin/hadoop jar $HADOOP_HOME/hadoop-streaming.jar \

-input myInputDirs \

-output myOutputDir \

-mapper org.apache.hadoop.mapred.lib.IdentityMapper \

-reducer /bin/wc

The input command is used to provide the input directory, while the output command is used to provide the output directory. The mapper command is used to specify the executable mapper class, while the reducer command is used to specify the executable reducer class.

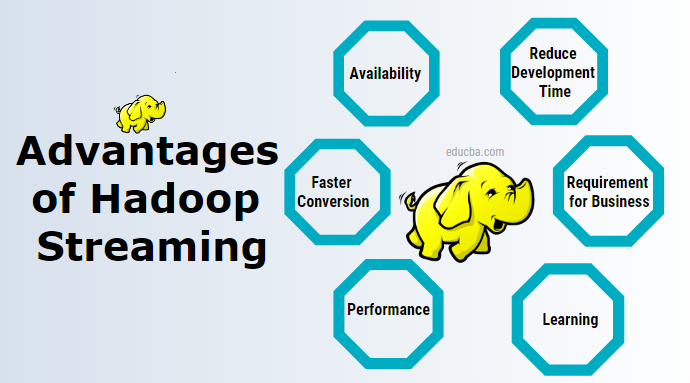

Advantages

Below are the advantages explained:

1. Availability

This doesn’t require any extra separate software to be installed and managed. There are other tools like a pig, hive which can be installed I need to be managed separately.

2. Learning

It doesn’t require learning new technologies. It can be leveraged with minimum Unix skills for data analysis.

3. Reduce Development Time

It requires to write mapper and reducer code while developing streaming applications in Unix, whereas doing the same work using Java MapReduce application is more complex and needs to be compiled first, then test, then package, followed by exporting JAR file, and then run.

4. Faster Conversion

It takes very little time to convert data from one format to another using Hadoop streaming. We can use it for converting data from text file to sequence file and then again from sequence file to text file and many others. This can be achieved using input format and output format options in Hadoop streaming.

5. Testing

Input and output data can be quickly tested by using it with Unix or Shell Script.

6. Requirement for Business

For simple business requirements like simple filtering operations and simple aggregation operation, we can use this with Unix.

7. Performance

Using this, we can get better performance while working with streaming data. There are also several disadvantages of Hadoop streaming which are addressed by using other tools in the Hadoop package like Kafka, Flume, spark.

Why do we need Hadoop Streaming?

It helps in real-time data analysis, which is much faster using MapReduce programming running on a multi-node cluster. There are different Technologies like spark Kafka and others which helps in real-time Hadoop streaming.

How will this technology help you in career growth?

Nowadays, all major enterprises are moving to Hadoop for their data analysis, and many of them may require analysis of real-time data. The demand for the use of real-time data and processing of the same day by day and this technology is creating a lot of scope for individual career growth.

Conclusion

It offers a huge range of advantages for different real-time data processing using streaming data.

Recommended Articles

This is a guide to Hadoop Streaming. Here we discuss the basic concept, need, purpose, working advantages, and disadvantages along with the career growth of Hadoop Streaming. You can also go through our other suggested articles to learn more –