Difference Between Hadoop vs Elasticsearch

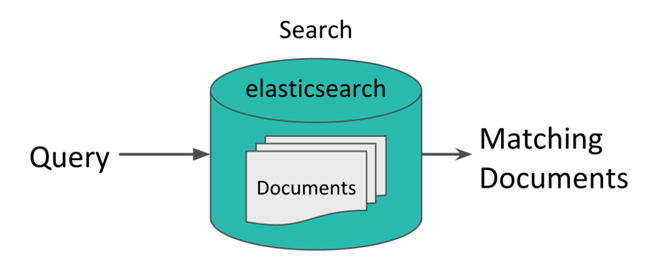

The following article provides an outline for Hadoop vs Elasticsearch. Hadoop is a framework that helps handle the voluminous data in a fraction of a second, which traditional ways fail to handle. It takes the support of multiple machines to run the process parallelly in a distributed manner. Elasticsearch works like a sandwich between Logstash and Kibana. Where Logstash is accountable for fetching data from any data source, elastic search analyzes the data, and kibana gives actionable insights. This solution makes applications more powerful for complex search requirements or demands.

Let us look forward to the topic in detail:

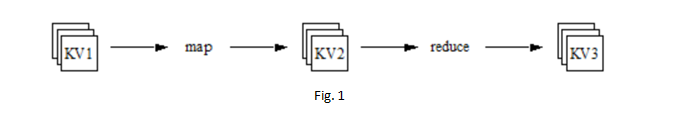

Its unique way of data management (specially designed for Big data) includes an end-to-end process of storing, processing and analyzing. This unique way is termed MapReduce. Developers write the programs in the MapReduce framework to run the extensive data in parallel across distributed processors.

The question then arises, after data gets distributed for processing into different machines, how does output accumulate similarly?

The answer is MapReduce generates a unique key that gets appended with distributed data in various machines. MapReduce keeps track of the processing of data. And once it is done, that unique key is used to assemble all processed data. This gives the feel of all work done on a single machine.

Scalability and reliability are ideally taken care of in MapReduce of Hadoop.

Below are some functionalities of MapReduce:

1. Map then reduce: To run a job, it gets broken into individual chunks called tasks. The mapper function will always run first for all the tasks, then only reduce function will come into the picture. The reduced function will complete its work for all distributed tasks to finish the entire process.

2. Fault Tolerant: Take a scenario when one node goes down while processing the task. The heartbeat of that node doesn’t reach the engine of MapReduce or, say Master node. Then, in that case, the Master node assigns that task to some different node to finish the task. The HDFS (Hadoop Distributed File System) stores the unprocessed and processed data and has a default replication factor of 3, serving as the storage layer of Hadoop. Two nodes are still alive with the same data if one node goes down.

3. Flexibility: You can store any type of data: structured, semi-structured, or unstructured.

4. Synchronization: Synchronization is an inbuilt characteristic of Hadoop. This ensures reduce will start only if all mapper function is done with its task. “Shuffle” and “Sort” is the mechanism that makes the job’s output smoother. Elasticsearch is a JSON-based simple yet powerful analytical tool for document indexing and powerful full-text search.

In ELK, all the components are open source. ELK taking significant momentum in the IT environment for log analysis, web analytics, business intelligence, compliance analysis, etc. ELK is apt for businesses where ad hoc requests come and data needs to be quickly analyzed and visualized.

ELK is a great tool for Tech startups who can’t afford to purchase a license for a log analysis product like Splunk. Moreover, open-source products have always been the focus of the IT industry.

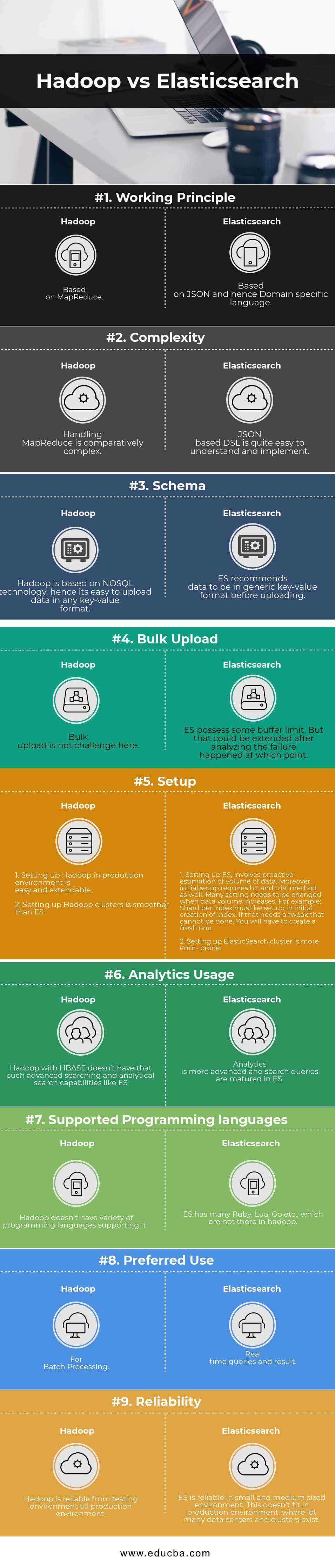

Head-to-Head Comparisons Between Hadoop and Elasticsearch (Infographics)

Below are the top 9 comparisons between Hadoop and Elasticsearch:

Key Difference Between Hadoop vs Elasticsearch

Below are the lists of points that describe the key differences between Hadoop vs Elasticsearch:

- Hadoop has distributed filesystem designed for parallel data processing, while ElasticSearch is the search engine.

- Hadoop provides far more flexibility with a variety of tools as compared to ES.

- Hadoop can store ample data, whereas ES can’t.

- Hadoop can handle extensive processing and complex logic, whereas ES can handle only limited processing and essential aggregation.

Hadoop vs Elasticsearch Comparison Table

Below are the points that describe the comparisons between Hadoop and Elasticsearch.

| Basis of Comparison | Hadoop | Elasticsearch |

| Working Principle | Based on MapReduce. | Based on JSON and hence Domain-specific language. |

| Complexity | Handling MapReduce is comparatively complex. | JSON-based DSL is quite easy to understand and implement. |

| Schema | Hadoop is based on NoSQL technology; hence, uploading data in any key-value format is easy. | ES recommends data be in the generic key-value format before uploading. |

| Bulk Upload | Bulk upload is not challenging here. | ES possesses some buffer limit. But that could be extended after analyzing the failure happened at which point. |

| Setup |

|

|

| Analytics Usage | Hadoop with HBase doesn’t have such advanced searching and analytical search capabilities as ES. | Analytics is more advanced, and search queries are matured in ES. |

| Supported Programming Languages | Hadoop doesn’t have a variety of programming languages supporting it. | ES has many Ruby, Lua, Go, etc., which are not in Hadoop. |

| Preferred Use | For batch processing. | Real-time queries and results. |

| Reliability | Hadoop is reliable from the testing environment to the production environment. | ES is reliable in small and medium-sized environments. This doesn’t fit a production environment with many data centers and clusters. |

Conclusion

Ultimately, it depends on the data type, volume, and use case one works on. If simple searching and web analytics are the focus, then Elasticsearch is better. Whereas if there is an extensive demand for scaling, a volume of data, and compatibility with third-party tools, the Hadoop instance is the answer. However, Hadoop integration with ES opens a new world for heavy and big applications. Leveraging full power from Hadoop vs Elasticsearch can give an excellent platform to enrich the maximum value out of big data.

Recommended Articles

This has been a guide to Hadoop vs Elasticsearch. Here we have discussed Hadoop vs Elasticsearch key differences with infographics and a comparison table. You may also look at the following articles to learn more –