Updated March 4, 2023

Introduction to HDFS ls

In the Hadoop stack, we are having the HDFS service to manage the complete storage part of the Hadoop. We can use the multiple combinations of command with it. The Hadoop ls command is useful to list out the files which are available on the HDFS path or the location. We can use the different option with the ls command. As per the requirement, we can use the different options with the ls command. The ls command has come under the file system of the HDFS service. The ls command supports the HDFS file system as well they are also supporting the S3 FS, Web HDFS, Local FS, etc.

Syntax of the HDFS ls

hadoop fs [-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...] ]

1. Hadoop: We can use the Hadoop keyword in the syntax or command. It will take the different arguments as an option and command. As the result, we can list out the number of files on the Hadoop HDFS level.

2. fs: In the HDFS ls command comes under the file system type. Hence we need to define the fs as the file system in the Hadoop ls command.

3. OPTION: We can provide the different flags as options that are compatible with the Hadoop ls command. We need to use the different compatible keyword like [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] .

4. Path: As per the requirement, we can define the hdfs path to list out the number of files.

How HDFS ls Works?

As we have discussed, in the Hadoop environment we are having multiple components or services. The Hadoop ls command deals with the Hadoop HDFS service. To work with the Hadoop ls command, we can trigger the command in different ways like hdfs shell, Hue UI, Zeppelin UI, third party application tools, etc.

The Hadoop ls command will trigger from the hdfs shell windows or any other tool. The hdfs compiler will validate the command first. If the command is valid then it will pass for the further process or it will through an error message. Once the command will valid then the request goes to the hdfs namenode. The namenode having detailed information on the hdfs block. It will check the request if the block information is available or not. If the block information is available then it will give the necessary output. If the block information is not present then it will through an error message.

Below are the lists of options that are compatible with the Hadoop ls command.

-C: It will print or display the HDFS paths of files and directories only.

-d: It will help to print the directories as plain files.

-h: It will format the file size in a human-readable way

Ex: If the size is print as 67108864 with the help of the -h option it will print 64 m.

-q: It will help to display or print? instead of non-printable characters.

-R: It will print the list of files recursively in the subdirectories encounter.

-t: It will help to sort the files by modification time. It will print the files with the most recent modified or updated.

-S: It will print the output by file as per the size.

-r: It will help to reverse the sort order.

-u: It will list out the files as per the access time rather than modification time for the sorting and display.

-e: It will help to display the erasure coding policy of directories and files only.

Example for the HDFS ls

Here are the follwoing examples mention below

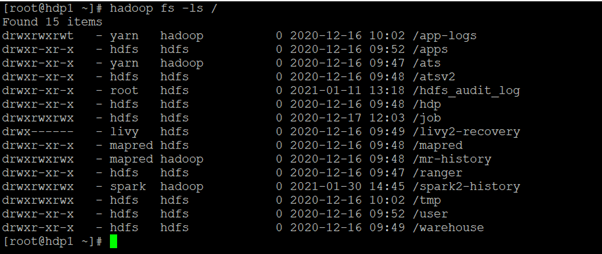

Hadoop ls Command

In the Hadoop environment, the HDFS ls command is very common. It is using very widely. It will help us to list out the number of files on the HDFS level.

Syntax:

hadoop fs -ls /

Explanation:

As per the above command, we are getting the list of files on the “/” HDFS level. It will only list the number of directories on the root HDFS level. It will also give the information of user and group permission.

Output:

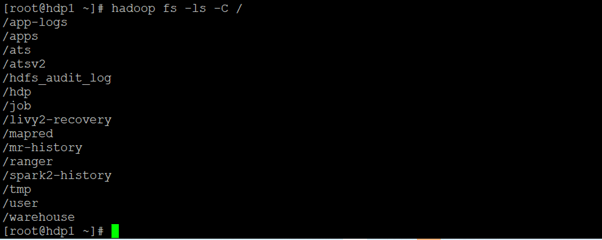

HDFS ls: Get List HDFS Directory Only

In Hadoop, we can list out the number of the directory with the directory name only. It will not print the permission and user group information.

Syntax:

hadoop fs -ls -C /

Explanation:

As per the above command, we are using the -C option. It will help to list out the HDFS directory only. It will not print the user permission status of the HDFS directories.

Output:

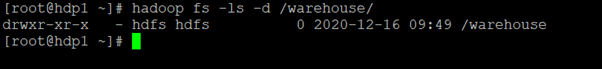

HDFS ls: Get Specific Directory

In the Hadoop ls command, we are having the functionality to print the specific directory only. It will not print any other directory.

Syntax:

Hadoop fs -ls -d /warehouse/

Explanation :

As per the above command, we are using the -d option with the Hadoop ls command. It will help to print the warehouse directory only. This type of command will help in the shell or application job level.

Output:

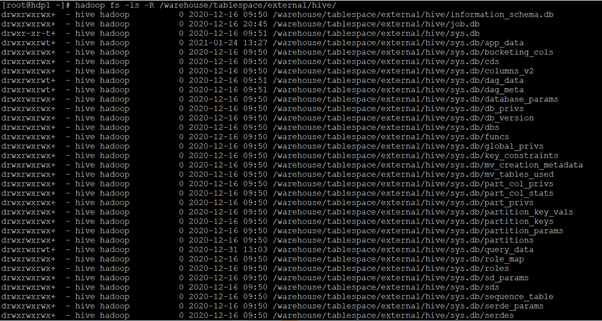

HDFS ls: List the Number of File’s in Recursively Manner

As per the default nature of the Hadoop ls command, we can print the number of files in the current working directory. But if we need to print the number of files recursively, we can also do that with the help of the “-R” option.

Syntax:

hadoop fs -ls -R /warehouse/tablespace/external/hive/

Explanation:

As per the above command, we are listing the number of the file from the “/warehouse/tablespace/external/hive/” location. To get all the files in a single shot, we are using the “-R” option with the Hadoop ls command.

Output:

Conclusion

We have seen the uncut concept of “HDFS ls” with the proper example, explanation, and output. The HDFS ls are very important in terms of the Hadoop environment. As per the requirement, we can list out the number of files recursively with different options like size, modification, human-readable format, etc.

Recommended Articles

This is a guide to HDFS ls. Here we discuss the uncut concept of “HDFS ls” with the proper example, explanation, and output. You may also have a look at the following articles to learn more –