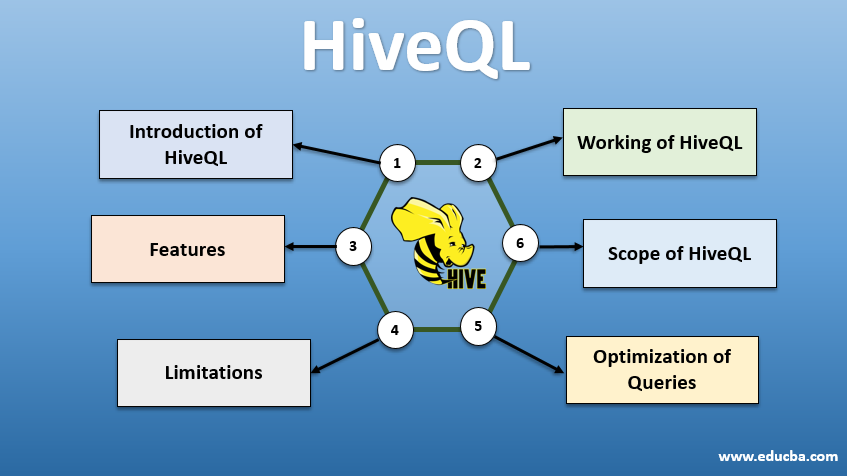

Introduction to HiveQL

Hive provides a CLI for the use of Hive query language to write Hive queries. HQL syntax is usually similar to the SQL syntax most data analysts know about. The SQL language of Hive distinguishes the user from the scope of the programming Map Reduce. It uses well-known concepts such as rows, tables, columns, and schemes from the relevant database environment to encourage learning. HiveQL’s syntax is generally similar to SQL, which is familiar to most data analysts. TEXT FILE, SEQUENCE FILE, ORC, and RCFILE (Column File Record) are four formats supported by Hive.

Working of HiveQL

Hive provides a SQL-like dialect for data manipulation, eliminating the need for us to write low-level MapReduce jobs to fetch data (through Mapper) and aggregate final results (through Reducer Modules).

Executing Hive Query

Steps involved in executing a Hive query are:

- Hive interface (through CLI or Web UI) sends the query to Driver (which is JDBC, ODBC or Thrift Server) to compile, optimize and execute. It checks the query through a compiler for syntax and execution plan.

- Compiler sends a request to Metastore and receives Metadata as a response.

- The compiler communicates the execution plan back to the driver, which further sends it to the Execution engine.

- The execution engine interacts with the Job Tracker, which is the Name Node to get the job done.

- In parallel, an execution engine also executes Metadata operation.

- Name node gets the job done by Task tracker or Data nodes.

- Results that are shared with the execution engine, which then are displayed to the interface through the driver.

Optimization of Queries

Tuning HiveQL for better optimization of queries. Using the below-set commands, we can override the default configurations and enable faster query execution.

1. SET hive.execution.engine = tez

By default, the execution engine is set as Mapreduce, but we can explicitly set it to tez (for Hadoop 2 only) or Spark (for Hive 1.1. 0 onward).

2. SET hive.mapred.mode = unstrict

This is for dynamic partitioning, which helps load large data sets. However, static partitioning is set as default, which happens in “strict” mode.

3. SET hive.vectorized.execution = true

set hive.vectorized.execution.enabled = true

Vectorized query execution allows operation like –aggregates, filters or joins to happen in batches of 1024 rows instead of a single row at a time.

4. SET hive.auto.convert.join = true

While joining a large data set with a minimal data set, map joins are more efficient and can be set using the above command.

5. SET hive.exec.parallel = true

MapReduce jobs are executed in parallel in Hadoop. Sometimes, if queries are not dependent on one another, parallel execution can be favoured leading to better memory management.

6. SET hive.exec.compress.output=true

This enables the final output to be stored in HDFS in a compressed format.

7. SET hive.exec.compress.output = true

This enables the final output to be stored in HDFS in a compressed format.

Features of HiveQL

- Being a high-level language, Hive queries are implicitly converted to map-reduce jobs or complex DAGs (directed acyclic graphs). Using the ‘Explain’ keyword before the query, we can get the query plan.

- Faster query execution using Metadata storage in an RDMS format and replicates data, making retrieval easy in case of loss.

- Bitmap indexing is done to accelerate queries.

- Enhances performance by allowing partitioning of data.

- Hive can process different types of compressed files, thus saving disk space.

- To manipulate strings, integers or dates, HiveQL supports extending the user-defined functions (UDF), to solve problems not supported by built-in UDFs.

- It provides a range of additional APIs, to build a customized query engine.

- Different file formats are supported like Textfile, Sequencefile, ORC(Optimised Row Columnar), RCFile, Avro and Parquet. ORC file format is most suitable for improving query performance as it stores data in the most optimized way, leading to faster query execution.

- It is an efficient data analytics and ETL tool for large datasets 10. Easy to write queries as it is similar to SQL. DDL (Data definition language) commands in a hive are used to specify and change the database or tables’ structure in a hive. These commands are drop, create, truncate, alter, show or describe.

Limitations

- Hive queries have higher latency as Hadoop is a batch-oriented system.

- Nested or sub-queries are not supported.

- Update or delete or insert operation cannot be performed at a record level.

- Real-time data processing or querying is not offered through the Hive Scope of HQL.

With petabytes of data, ranging from billions to trillions of record, HiveQL has a large scope for big data professionals.

Scope of HiveQL

Below are how the scope of HiveQL widens and better serves to analyse humungous data generated by users every day.

Security: Along with processing large data, Hive provides data security. This task is complex for the distributed system, as multiple components are needed to communicate with each other. Kerberos authorization support allows authentication between client and server.

Locking: Traditionally, Hive lacks locking on rows, columns or queries. Hive can leverage Apache Zookeeper for locking support.

Workflow Management: Apache Oozie is a workflow scheduler to automate various HiveQL queries to execute sequentially or parallel.

Visualization: Zeppelin notebook is a web-based notebook, which enables interactive data analytics. It supports Hive and Sparks for data visualization and collaboration.

Conclusion

HiveQL is widely used across organizations to solve complex use cases. Keeping in mind the features and limitations offered by the language, Hive query language is used in telecommunications, healthcare, retail, banking, and financial services and even NASA’s Test Propulsion Laboratory’s Climate evaluation system. Ease of writing SQL like queries and commands accounts for wider acceptance. This field’s growing job opportunity lures fresher and professionals from different sectors to gain hands-on experience and knowledge about the field.

Recommended Articles

This is a guide to HiveQL. Here we discuss the Introduction to HiveQL, Optimizing Queries and its Limitations and Features. You can also go through our related articles to learn more –