Updated March 14, 2023

Introduction to Kafka message

Kafka is originally developed by LinkedIn. The Kafka is the framework to develop for streaming processing. The Kafka message is a small or medium piece of the data. For Kafka, the messages are nothing but a simple array of bytes. The Kafka messages are nothing but information or the data. Which is coming from the different sources? The sources are not specific to any platform or software. We can integrate Kafka with multiple technologies and different platforms also. In Kafka, the messages will be flow in terms of the bit format. In the Kafka environment, we can enable parallel processing as well. If we need to work with Kafka, the message is not a single concept in it. To understand the Kafka messages, we need to understand the different components of Kafka and need to understand it. Then only we can understand the Kafka messages concept.

Syntax of Kafka message

As such, there is no specific syntax exist for Kafka messages. To work with the Kafka messages, we need to understand the in and out data. Similarly, we need to understand the flow of data. In Kafka messages, we are using the number of services on it. As per the requirement or need, we will install the Kafka cluster and configure it accordingly. In the Kafka messages, the number of times we are using the CLI Kafka commands. But if we are working on the Kafka cluster, then we are recommended to manage the configuration on the Kafka UI only. It will be better for the management. For the troubleshooting front, we need to explore Kafka logs as well.

How does the Kafka message works?

As we have discussed, the Kafka messages are nothing but a simple array of bytes. The Kafka messages are nothing but data. The size of the data depends on the configuration. By default, the Kafka broker can handle the message size is 1MB. But if we need to increase the message or data size, then we can also increase it. We need to do the changes in the Kafka configuration and increase the values over there. It will depend on the system or the application requirement.

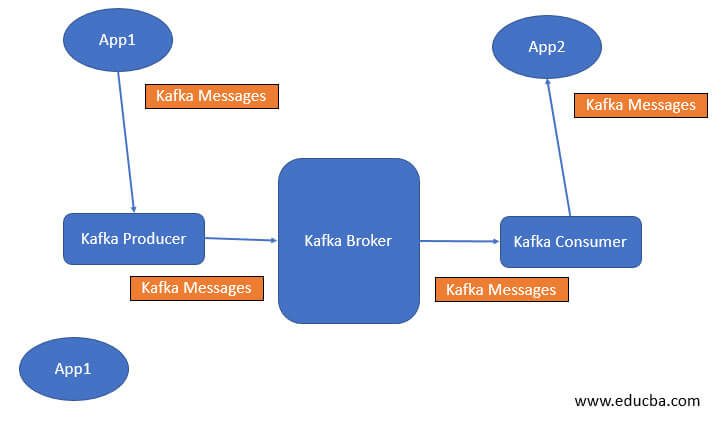

To get the Kafka messages, we need to first understand the basic concept of Kafka components. In Kafka, there number of components and having different roles in it. Below are the lists of Kafka components.

1) Kafka producer: The producer is very important in terms of the Kafka messages. The producer is responsible for producing the messages in Kafka. Therefore, we can create the number of producers in the Kafka environment.

2) Kafka consumer: The consumer is responsible for getting or consuming the Kafka producer’s messages (on a specific Kafka Topic).

3) Kafka broker: The consumer and the producer cannot communicate directly in the Kafka environment. Here, the Kafka broker acts as a communication bridge in-between the Kafka consumer and the Kafka producer. With the help of the Kafka broker, the messages will travel.

As per the above flow, we have two different applications, i.e., the App1 and App2. The App1 application is sending the messages or data on the Kafka producer. Then the Kafka producer (from the specific topic) will pass the messages to the Kafka broker. The Kafka broker is responsible for the communication. The Kafka broker will communicate in-between the Kafka producer and the Kafka consumer. Initially, while consuming the data from the Kafka consumer, it will consume the data from the beginning. But from the next time, the new or updated data will be consumed by the Kafka consumer. Here, the offset values come in the picture. While consuming the updated messages, the consumer will check the updated offset value and consumer it from.

Examples to understand Kafka message.

Given below are the examples of Kafka message:

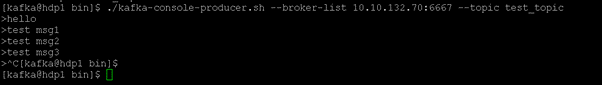

Kafka message: Push the Kafka Messages

In the Kafka environment, we need to push the message on the Kafka topic. The Kafka topic will hold the messages as per the default retention period.

Command:

./kafka-console-producer.sh --broker-list 10.10.132.70:6667 --topic test_topic

Explanation:

As per the above command, we are running the “Kafka-console-producer.sh” script. Here, we are using the broker’s IP address and the port. In the end, we need to update the topic name. On which topic, we need to push the messages.

Output:

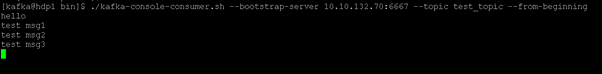

Kafka message : Get the Kafka Messages

In the Kafka environment, we are able to consume the Kafka messages. Here, we need to use the Kafka consumer to get the Kafka messages.

Command :

./kafka-console-consumer.sh --bootstrap-server 10.10.132.70:6667 --topic test_topic --from-beginning

Explanation:

As per the above command, we are consuming the Kafka messages. Here, we are running the “Kafka-console-consumer.sh” script. We need to use the broker’s IP address and the port. In the end, we need to update the topic name. On which topic, we need to consume or get the messages.

Output:

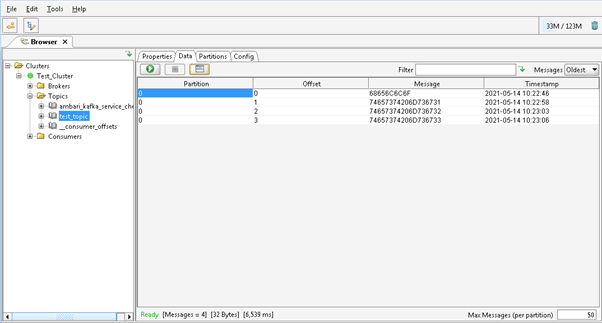

Kafka message: Check the message status on the Kafka tool

In Kafka, normally, we are working with Kafka on the command line. But we have the functionality to check the status of Kafka messages on the UI also.

Command:

Here, there is no syntax or command. Instead, we need to configure the Kafka tool. In the Kafka tool, we need to provide the zookeeper details and connect the Kafka.

Explanation:

In the Kafka ecosystem, we are able to get the Kafka messages in the UI also. As per the below screenshot, we have configured the Kafka cluster with the Kafka tool and get the status of the Kafka messages as well.

Output:

Conclusion

We have seen the “Kafka message” uncut concept with the proper example, explanation, and command with different outputs. The Kafka messages are nothing but a small or medium piece of data. It is a collection of a simple array of bytes. As per the requirement or application need, we can also integrate Kafka with a number of different applications.

Recommended Articles

This is a guide to Kafka message. Here we discuss the uncut concept of the “Kafka message” with the proper example, explanation, and command with different outputs. You may also look at the following articles to learn more –