Updated March 15, 2023

Introduction to Kafka Rebalance

The Kafka rebalance is defined as, it is a process to depict every partition to the accurate customer, as a customer group is the set of customers which can overwhelm the messages together from one customer or various customers which are available in the Kafka topics, and when we have a new customer which can be appended to the customer group or left from the group at that time Kafka rebalancing can take place in which customer can also able to end the filtering of messages for some duration and then the filtering of an incident from one topic can take place slowly.

What is Kafka rebalance?

As we know Kafka cluster contains one or more ‘brokers’, and the ‘producer’ can bring out data from the Kafka brokers, and then a ‘consumer’ can be the application that interprets the messages from a broker, and the data is to be conveyed that can be stored in the topic in which every topic can have various ‘partitions’ and one consumer can able to interpret the one partition at one time,

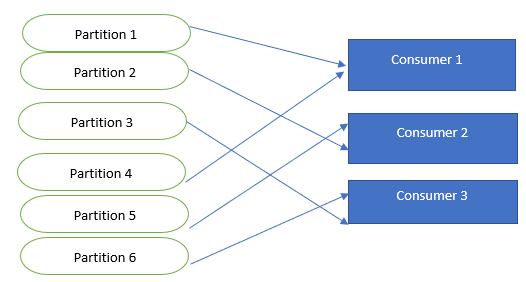

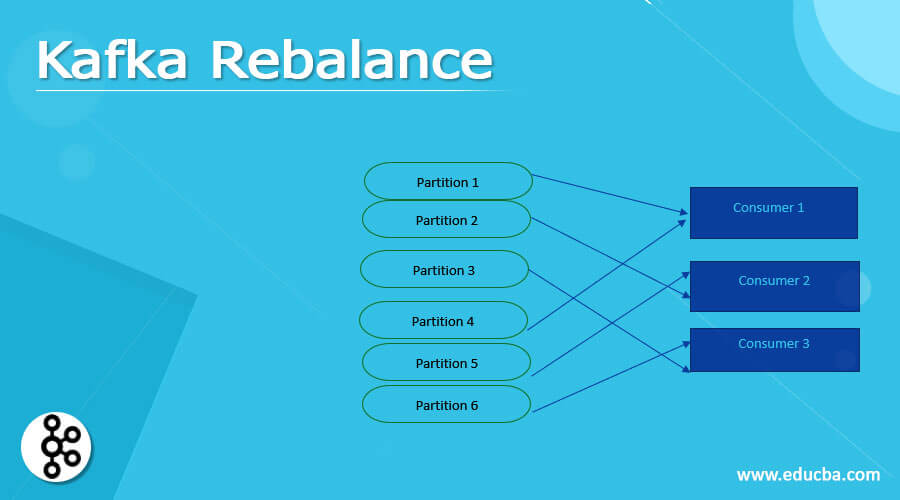

We can say that the Kafka rebalancing is the process of plotting the partitions to consumers in a specific manner, when rebalancing is going on then one partition can be handed over to the consumer in an incremental way, let us understand it with the help of the following figure,

From the above figure, we can able to see the assignment of the partitions.

- Kafka rebalance field:

Apache Kafka is a famous disperse even flowing platform that can be utilized for data pipelines, flowing analytics, and data combination then we can able to find out the matter, sum up the capacity and also the balance,

There are some key fields of Kafka rebalance which are given below,

- Topics: A Kafka cluster that can manage the reserve categories which is known as Topic.

- Brokers: A cluster that can contain server more than one is the brokers.

- Partitions log: Every topic can support the partitioned log.

- A topic can contain the various partitions that all can perform as primary parallelism.

- Replicas: It can be the list of brokers that can reproduce the log for every partition.

Kafka Rebalance Process

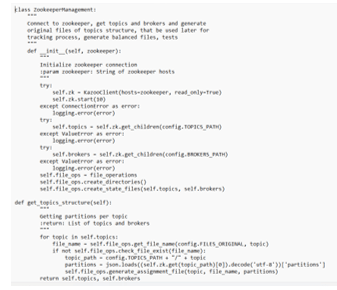

Step 1: To create the original file:

It contains two parts,

- Through the Zookeeper, it can obtain the current broker, topics, and partitions for every topic.

- For every topic, the originally created files have been allocated.

And the original file can be allocated,

- To communicate the situation of the cluster survey if there is any disturbance so in such case, we do not need to begin it.

- We can have the authentic allocation for testing it after some time.

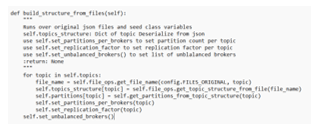

Step 2: To collect the data for calculating the rebalance

In this step,

- The topics can be considered in the files.

- Partitions for each topic.

- Also partitions for each broker.

- And then the replication factor for each topic.

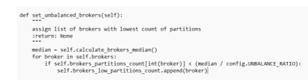

After that, we can able to put the unbalance brokers and we can put it as half of the median partition add up, The unbalanced correlation has to be put as lower in case of the regulating the rebalance,

Step 3: Managing the topics and partitions and generating the migration files:

In this step, looping can be done over the topics when we try to calculate some tasks as given below,

- The number of partitions to be altered for each topic.

- Brokers can able to lower and sum up the topic for upgrading the rebalance.

- Also, it can calculate the inflated and beneath brokers.

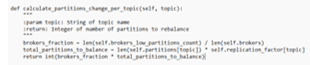

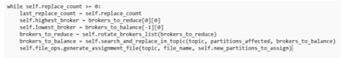

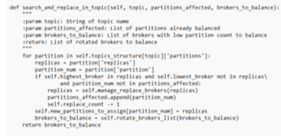

- To create a new balance allocation file with minimum modification in the brokers, so let us see how to calculate the partitions for each topic,

- We can able to implement a particular topic to decrement any modifications which are generated by replace count and also to proceed to the next topic,

- The search and replace process can look for partitions having the highest broker count,

Step 4: Streaming tests

In this step for confirmation, we can test something which is given below,

- Test_changes_in_assignment: It can check that there is no further modification in the partition under the genuine topic balance.

- Test_if_topics_are_balanced: It can check if there is any type of difference of more than 15% as compared to the median of partitions.

- Check_brokers_to_reduce: It can test that the broker can be the subset of the complete list of brokers.

Kafka rebalance consumer

The consumer in the Kafka rebalance is the process which can interpret from the topic and action a message, in which a topic may carry various partitions which can be admitted by a broker, a consumer group may have the various consumers in which that cannot absorb the equal message, if in a case the similar message has been absorbing by multiple consumers then that have to be in various consumer groups, in which we can say that there may be the compact relationship between the number of consumers and the number of partitions but if we have fewer consumer then the consumer can have to interpret from various partitions which may influence the output, every consumer can bale to understand only his allocation and the leader of group also can be the consumer in which it can follow the other consumers in a group and their allocation which means that the new consumer can able to perform some task in which every partition can also have the counterbalance for reserving the obsessed messages index.

Conclusion

In this article we conclude that the Kafka rebalancing is the process of filtering the messages for some time duration, we have also discussed the Kafka rebalancing field, the Kafka rebalancing process, and Kafka rebalancing of the consumers, so this article will help to understand the concept of the Kafka rebalance.

Recommended Articles

This is a guide to Kafka Rebalance. Here we discuss the introduction, What is Kafka rebalance, Kafka Rebalance Process, example, respectively. You may also have a look at the following articles to learn more –