Updated March 15, 2023

Introduction to Kafka Security

Kafka security is defined as the Apache Kafka is an interior central layer that can allow the back end system to divide real-time data with each other via Kafka topics in which it can have some consumers that can interpret the data which is coming from the Kafka and producers that can put the data together. Still, it is also required to make sure the security of the data because there may be various consumers which can interpret the data and to share the data between one or more particular users. Hence, data needs to be secured from other consumers in such cases.

Overview of Kafka security

Kafka can utilize the end-to-end data security model and cell-based security model with the help of Apache Kafka, in which the cell-based security prototype in Kafka can have the access control list, simple authentication, security layer, and topic level security and provide such type of security we need to have the structured data, access control for lists, broker side authentication, and also message-level security, it can utilize the real-life use cases depends on the bank requirement, and we need to have the different types of consumers and users so that sensitive information can able to reserve bank related information such as card number and other real-life ID, it can able to join security metadata to the messages, data can be structured as a valid JSON, and it can put out the message header with security data and that messages can be clarified by security data it can have backward compatibility for producers, consumers, and brokers.

Kafka security problems and solving

Kafka security has three elements,

- Encryption of data in-flight using SSL/TLS:

This can support the data encryption within our producer and Kafka as well as between the consumers and Kafka, so we can say that it can be an ordinary influence for all those who are passing on the web.

2. Authentication using SSL or SASL:

This element can authenticate the cluster such as SSL and SASL, allowing our producer and consumers to clarify the identity. It can be a much more secure path to allow the client to inscribe the identity that can assist in the authorization.

3. Authentication using ACLs.

In this element, identifying a specific client can be authorized for interpreting or reading a few topics in which Kafka can deploy clients over the ACL (Access Control Lists).

Kafka security models

1. PLAINTEXT:

Typically, data can be conveyed in the form of a string, and the PLAINTEXT cannot need to have the authentication and authorization; hence data will not be secure so that the PLAINTEXT can be utilized as proof; hence it will not be useful for the environments which may require the huge data security.

2. SSL:

SSL stands for Secure Socket Layer, which can be utilized for both encryption and authentication; if any application can utilize the SSL, then first need to configure it in that it can allow the one-way authentication so that the client can able to authenticate the certificate provided by the server and SSL authentication can provide the two-way authentication so that the broker can also authenticate client certificate. Still, the SSL can influence the effect because of the encryption project.

3. SASL:

The SASL stands for Security Authentication and Security Layer in which it is a structure for data security and user authentication on the top of the network, and Apache Kafka can able to authenticate the client via SASL so the number of SASL systems can qualify on the broker, but the client has to select only one system.

SASL can also have different systems such as GSSAPI in which it does not need to install a new server, specially for Kafka, PLAIN can utilize for authenticating the username and password, which can be executed by default, and SCRAM belongs to the SASL family, which can authenticate the security concept by using username and password, OUATHBEARER is the authorization structure which allows the third-party application to access the HTTP services. DELEGATION token can be utilized concluding the SASL methods, and it can only share the confidentiality between the Kafka broker and the client.

Example

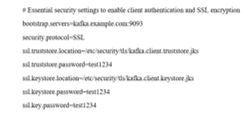

Let us see an example in which the Kafka broker in the cluster can have the security setup, and the necessary SSL certificate can be there for application,

- For example, if we are utilizing the docker, then we have to add the SSL certificate at the proper location with the image; now, below code can allow authenticating the client and SSL encryption for transferring the data between Kafka flow and the Kafka cluster, which can be interpreted and read from,

bootstrap.servers=kafka.example.com:9093

security.protocol=SSL

ssl.truststore.location=/etc/security/tls/Kafka.client.truststore.jks

ssl.truststore.password=test1234

ssl.Keystore.location=/etc/security/tls/Kafka.client.Keystore.jks

ssl.Keystore.password=test1234

SSL. key.password=test1234

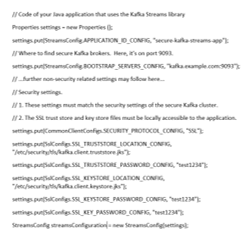

- Such type of setting can be configured in our application for the ‘StreamsConfig’ instance. This setting can able to encrypt any data which is being interpreted or written into Kafka, and our application can able to authenticate ourselves over the Kafka brokers, which are able to interact, but this example cannot have the client authorization,

Properties settings = new Properties ();

settings.put(StreamsConfig.APPLICATION_ID_CONFIG, “secure-Kafka-streams-app”);

settings.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, “kafka.example.com:9093”);

settings.put(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG, “SSL”);

settings.put(SslConfigs.SSL_TRUSTSTORE_LOCATION_CONFIG, “/etc/security/tls/Kafka.client.truststore.jks”);

settings.put(SslConfigs.SSL_TRUSTSTORE_PASSWORD_CONFIG, “test1234”);

settings.put(SslConfigs.SSL_KEYSTORE_LOCATION_CONFIG, “/etc/security/tls/Kafka.client.Keystore.jks”);

settings.put(SslConfigs.SSL_KEYSTORE_PASSWORD_CONFIG, “test1234”);

settings.put(SslConfigs.SSL_KEY_PASSWORD_CONFIG, “test1234”);

StreamsConfig streamsConfiguration = new StreamsConfig(settings);

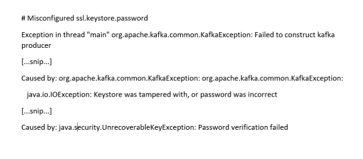

- In our application, if the configuration is done incorrectly, it will generally stop at the runtime after it begins. For example, if we have to go in for the wrong password for the ‘ssl.Keystore.password’ setting, an error message will be recorded, and our application will close.

Exception in thread “main” org.apache.kafka.common.KafkaException: Failed to construct kafka producer

[…snip…]Caused by: org.apache.Kafka.common.KafkaException: org.apache.Kafka.common.KafkaException:

java.io.IOException: Keystore was tampered with, or password was incorrect

[…snip…]Caused by: java.security.UnrecoverableKeyException: Password verification failed

Conclusion

In this article, we conclude that Kafka security is important while sharing the data because it needs to be secured from other consumers, we have also discussed problems and solutions of the Kafka security, security model, and examples also, so this article will help to understand the concept of Kafka security.

Recommended Articles

This is a guide to Kafka Security. Here we discuss the Introduction, overviews, Kafka security problems and solving, and examples with code implementation. You may also have a look at the following articles to learn more –