Updated March 16, 2023

Introduction to Keras Custom Loss Function

Keras custom loss function is the neural network component that was defined in a loss function. The loss function in keras is nothing but prediction error, which was defined in a neural net, the method in which we are calculating the loss and loss function. It is used to calculate the gradients and neural net. Gradients are used in calculating and updating the weights of functions.

Key Takeaways

- Loss function is used in evaluating the ML model for performing observation on the dataset. We can say that the lower loss the performance is better.

- It is calculated by using a single observation of data by using the cost function average from the data set.

What is Custom Loss Function?

In deep learning, the loss is computed for the gradients with respect to the model’s weights. Custom loss function is calculated, and the network is updated after each iteration until the model is updated for bringing the improvement in the desired evaluation metric. At the time of keeping evaluation metrics like f1 score or AUC of the validation set during the project of ML.

The custom loss function is a core part of machine learning, this function is also known as the cost function. It is a special type of function which was helping us to minimize the error and it will be reaching the close for the expected output. In deep learning, the loss is gradients for the weights models and update the same by using backpropagation.

Why Use a Custom Loss Function?

Sometimes our prediction is more accurate in the ML model, but it is not always better for business as it is a misalignment between the business metric and science metric. At that time custom loss function is crucial for achieving the goal of the business objective. Basically, the custom loss function is used for evaluating how the machine learning model is performing the dataset observation.

The optimization of the search algorithm is gradient and descent to use and minimize the loss of function by parameters varying which was referring to the process of training in machine learning. While simple the loss function works as a compass.

For using the custom loss function we need to follow the below steps as follows:

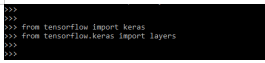

1. In the first step we are importing the keras and layers module by using the import keyword.

Code:

from tensorflow import keras

from tensorflow.keras import layersOutput:

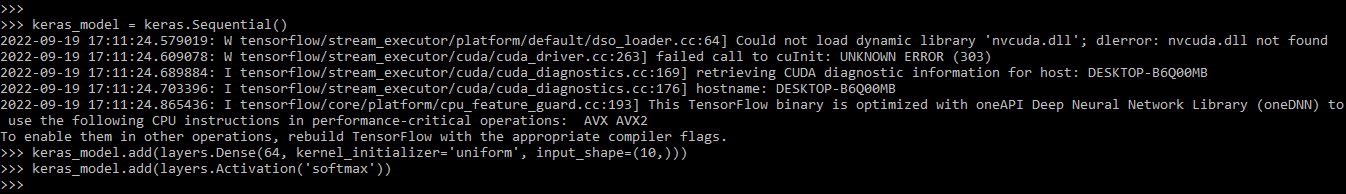

2. After importing the module in this step we are defining the add method with custom loss function.

Code:

keras_model = keras.Sequential()

keras_model.add()

keras_model.add(layers.Activation('softmax'))Output:

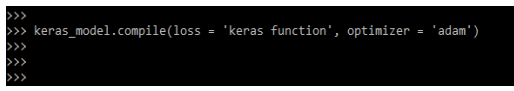

3. After defining the add method now we are passing the optimizer by using the default parameter.

Code:

keras_model.compile(loss = 'keras function', optimizer = 'adam')Output:

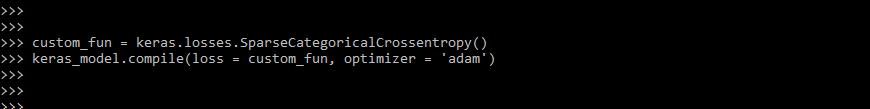

4. After passing the optimizer now in this step we are creating the keras custom loss function.

Code:

custom_fun = keras.losses.SparseCategoricalCrossentropy()

keras_model.compile(loss = custom_fun, optimizer = 'adam')Output:

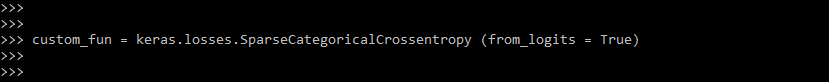

5. After creating the function now in this step we are passing the configuration arguments as follows.

Code:

custom_fun = keras.losses.SparseCategoricalCrossentropy (from_logits = True)Output:

How to Create Keras Custom Loss Function?

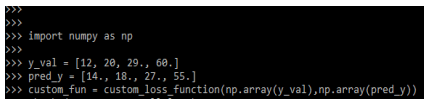

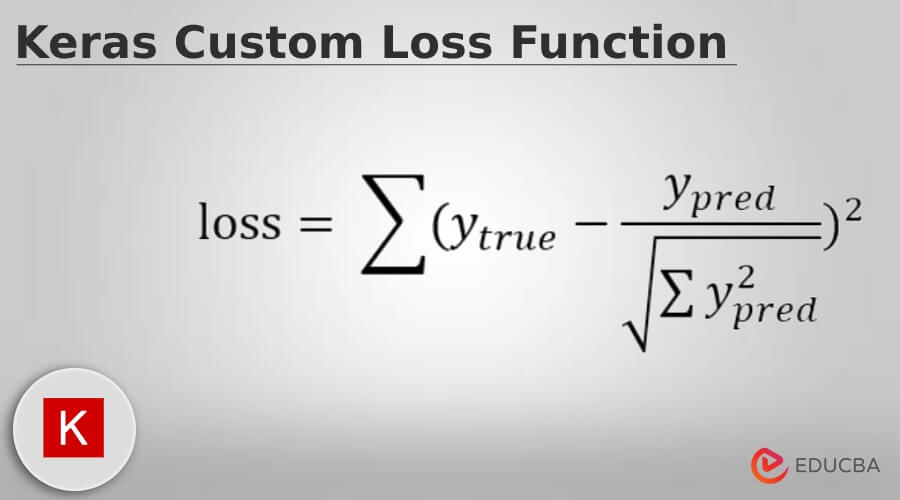

The custom loss function is created by defining the function which was taking predicted values and true values as a required parameter. The function is returning the losses array. Then the function will pass in a compile stage. The below example shows how we can apply the function of custom loss to an array of predicted values as follows.

Code:

import numpy as np

y_val = [12, 20, 29., 60.]

pred_y = [14., 18., 27., 55.]

custom_fun = custom_loss_function(np.array(y_val),np.array(pred_y))

custom_fun.numpy()Output:

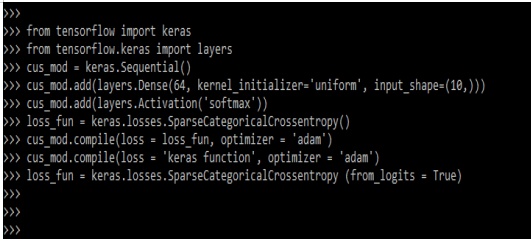

In the below example, we are creating the custom loss function in keras as follows. We are importing the keras and layers modules.

Code:

from tensorflow import keras

from tensorflow.keras import layers

cus_mod = keras.Sequential()

cus_mod.add(layers.Dense())

cus_mod.add(layers.Activation('softmax'))

loss_fun = keras.losses.SparseCategoricalCrossentropy()

cus_mod.compile(loss = loss_fun, optimizer = 'adam')

cus_mod.compile(loss = 'keras function', optimizer = 'adam')

loss_fun = keras.losses.SparseCategoricalCrossentropy (from_logits = True)Output:

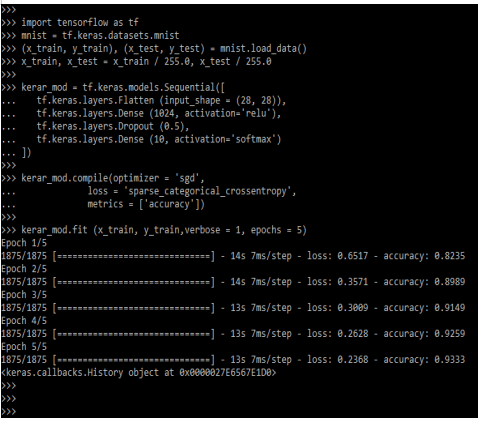

The below example shows how we can monitor the keras loss by using console logs.

Code:

import tensorflow as tf

mnist = tf.keras.datasets.mnist

….

kerar_mod = tf.keras.models.Sequential ([

tf.keras.layers.Flatten (input_shape = (28, 28)),

tf.keras.layers.Dense (1024, activation='relu'),

tf.keras.layers.Dropout (0.5),

tf.keras.layers.Dense (10, activation='softmax')

])

kerar_mod.compile()

kerar_mod.fit (x_train, y_train,verbose = 1, epochs = 5)Output:

Keras Custom Loss Function Classification

Classification problems are those problems on which we are predicting the labels. This means we can say that output comes only from the specified labels which were provided by the model.

Common classification loss is divided into two types:

1. Cross Entropy – This is the most used classification of loss functions. We can say that it is a measure of degrees. It contains three types.

2. Binary Cross Entropy – It is nothing but the cross entropy which occurred between two classes. Below is the implementation of binary cross entropy.

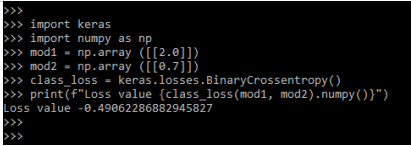

Code:

import keras

import numpy as np

mod1 = np.array ([[2.0]])

mod2 = np.array ([[0.7]])

class_loss = keras.losses.BinaryCrossentropy()

print(f"Loss value {class_loss(mod1, mod2).numpy()}")Output:

We can create the categoricalcrossentropy object by using keras losses and pass the object.

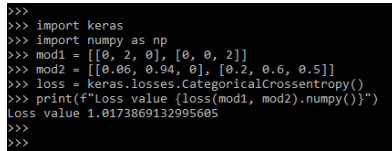

Code:

import keras

import numpy as np

mod1 = [[0, 2, 0], [0, 0, 2]]

mod2 = [[0.06, 0.94, 0], [0.2, 0.6, 0.5]]

loss = keras.losses.CategoricalCrossentropy()

print(f"Loss value {loss(mod1, mod2).numpy()}")Output:

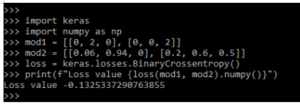

The binary classification function will come when we are solving the problem by using two classes. The below example shows binary classification.

Code:

import keras

import numpy as np

mod1 = [[0, 2, 0], [0, 0, 2]]

mod2 = [[0.06, 0.94, 0], [0.2, 0.6, 0.5]]

loss = keras.losses.BinaryCrossentropy()

print(f"Loss value {loss(mod1, mod2).numpy()}")Output:

Keras Custom Loss Function Multiple

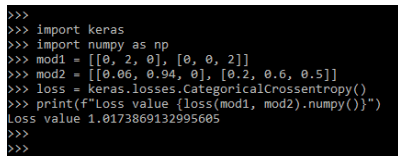

Prediction of problem involving using different types of loss functions. The categorical cross entropy will be computing the cross entropy loss between predicted and true classes. Below is the example of categorical cross entropy as follows.

Code:

import keras

import numpy as np

mod1 = [[0, 2, 0], [0, 0, 2]]

mod2 = [[0.06, 0.94, 0], [0.2, 0.6, 0.5]]

loss = keras.losses.CategoricalCrossentropy()

print(f"Loss value {loss(mod1, mod2).numpy()}")Output:

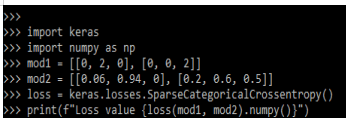

If suppose we have two or more classes and labels are integer then we can use the sparse categorical cross entropy as follows.

Code:

import keras

import numpy as np

mod1 = [[0, 2, 0], [0, 0, 2]]

mod2 = [[0.06, 0.94, 0], [0.2, 0.6, 0.5]]

loss = keras.losses.SparseCategoricalCrossentropy()

print(f"Loss value {loss(mod1, mod2).numpy()}")Output:

FAQ

Given below are the FAQs mentioned:

Q1. What is the use of custom loss function in keras?

Answer: The main purpose of keras custom loss function is to compute the model quantity which was seek to minimize the same at the time of training.

Q2. What is the use of add loss API in keras custom loss function?

Answer: At the time of writing the call method the custom layer will be subclassed into the model.

Q3. Which loss function is available in the keras custom loss function?

Answer: Binary and multiclass classification functions are available in the keras custom loss function.

Conclusion

The custom loss function is a core part of machine learning, this function is also known as the cost function. The loss function in keras is nothing but prediction error which was defined in a neural net, the method in that we are calculating the loss and loss function.

Recommended Articles

This is a guide to Keras Custom Loss Function. Here we discuss the introduction, why to use a custom loss function? classification and FAQ. You may also have a look at the following articles to learn more –