Updated March 15, 2023

Introduction to Keras Dropout

Keras dropout is a mechanism that helps reduce odds while overfitting for every epoch of the model by following the method of dropping, skipping the neurons present in the neural network in a random fashion. When the approach followed is of minibatch, then this dropping or skipping of a neuron is carried out for every individual minibatch.

What is Keras dropout?

Keras dropout can be theoretically explained as a mechanism for reducing the odds of overfitting by simply skipping random neurons of the neural network in every epoch.

The attachment of the variable of Bernoulli to the neurons helps drop out the neurons as an output. The Bernoulli variables help reduce the overfitting, and they have the value of 1 or zero with p and 1-p probability, respectively. This is useful because they create the presence of unreliable units other than the one specified. Because of this, there is no more generation of complex co-adaptions in neural networks, which for unseen data is not generalized, which decreases the overfitting occurrence in neural networks. We will need to follow abstractly below steps to create a Keras dropout model –

- Take your input dataset. For example, let’s say a few samples of the CIFAR-10 dataset contain a few images such as of ship, frog, truck, automobile, horse, automobile, cat, etc. All the thousands of images are classified into ten different classes. The CIFAR-10 is the default data set in most samples where we build neural networks.

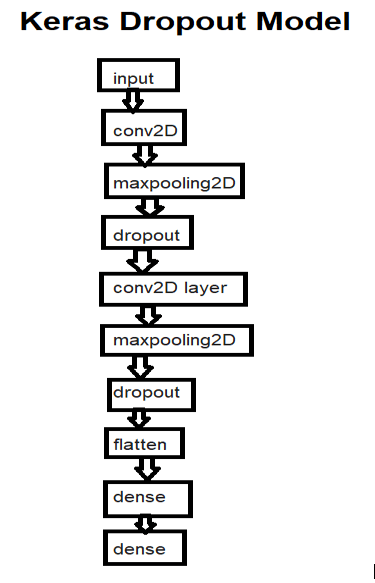

- Defining model architecture – the model architecture will define various layers present in the keras model. For example, we will have an input layer as the first convolutional neural layer, conv2D as the second convolutional layer, maxpooling2D, dropout, conv2D layer, maxpooling2D, dropout, flatten, dense, and again a dense layer.

- Running the model requires one Keras backend, such as TensorFlow and python.

How to use Keras dropout?

To get a generalized idea of how we can use Keras dropout, let’s consider convnet, a convolutional neural network classifier, along with dropout as an example. The steps that need to be followed while using Keras dropout are as listed below –

- We will need certain import statements to import some basic functionalities, libraries, and classes.

- Set the model’s required configurations, including its batch size, image height, and width, verbosity, no classes, no epochs, max norm value, validation split, etc.

- Loading the data and preparing it for training. For example, the CIFAR-10 dataset.

- Define the architecture by creating a model of sequential type pr any other and then adding the subsequent required layers to it using the model. add(). We can add the same layers discussed earlier.

- Training and compiling the model – Firstly, we can compile our model by simply using compile() method and passing the required parameters of loss functions and other metrics along with optimizer details. Then we have to train the model using the fit() method and pass it on to the training input, epochs, validation split, verbosity, and batch size.

- Model evaluation – We can evaluate the model using a sample model method. Evaluate () and pass the testing inputs, targets, and vocabulary.

keras dropout Model

Keras dropout model is the Keras model that contains the drop-out layer or layers added to it. The dropout layer is responsible for randomly skipping the neurons inside the neural network so that the overall odds of overfitting are reduced in an optimized manner.

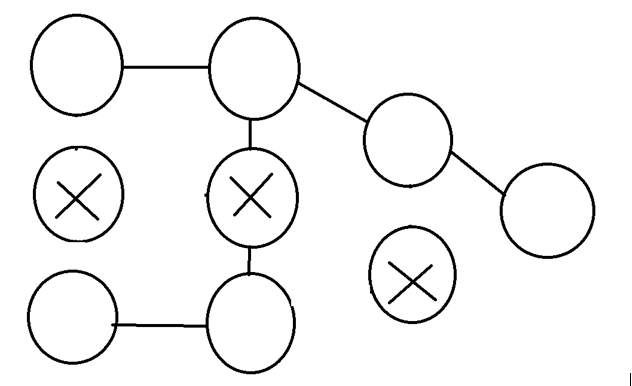

We can see that in the neural network shown in the above diagram, the model consisted of 9 neurons. Still, when we used dropout in Keras, the model dropped or skipped three neurons by considering the value of variable p, which helped reduce the overfitting problem inside the Keras model.

- Various layers were added in the Keras model, which includes the input layer as the first convolutional neural layer, conv2D as the second convolutional layer, maxpooling2D, dropout, conv2D layer, maxpooling2D, dropout, flatten, dense, and again a dense layer.

To build the dropout Keras model following layers are added as shown below –

Keras dropout API

Keras contains a core layer for dropout, which has its definition as –

Keras. layers.Dropout (noise_shape = None, rate, seed = None)

We can add this layer to the Keras model neural network using the model. add method, which will take the following parameters –

- Noise shape – If we want to share the noise between particular timesteps, batches, or features, we can set this value.

- Rate – the p parameter helps determine the odds that the neurons will be dropped out. We need to use a validation set for validating the p that will work perfectly optimally for us. If you have not validated p, then for the hidden layers, the rate is set to 0.5, and for input layers, the rate is set to 0.1, which means that the value of p will be 0.9. the logic of Keras is upside down as more neurons are dropped out than kept inside the model.

- Seed – If you want to fix the pseudo-random generator, then this value can be specified with an integer number which will help determine the value of Bernoulli’s variables is 0 or 1, which ensures that there is no issue with the number generator.

Note that the drop rate or specified rate helps specify odds for dropping the neurons from the neural network instead of keeping the same inside the model. Taking into consideration the value of the p parameter rate is taken into consideration as -p in dropout measurement. For example, when neurons are kept in the model as 80%, the p parameter value must be 0.80, and that rate will be 0.20.

keras dropout model code

Let us consider one example –

import keras

from keras.datasets import cifar10

from keras.sampleEducbaModels import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D, MaxPooling2D

from keras import backend as K

from keras.constraints import max_norm

widthOfImage, heightOfImage = 32, 32

sizeOfBatch = 250

noEpochs = 55

noClasses = 10

validationSplit = 0.2

specifiedVerboseValue = 1

maximumNormalizationValue = 2.0

(inputForTraining, targetForTraining), (inputForTesting, targetForTesting) = cifar10.load_data()

if K.image_data_format() == 'channels_first':

inputForTraining = inputForTraining.reshape(inputForTraining.shape[0],3, widthOfImage, heightOfImage)

inputForTesting = inputForTesting.reshape(inputForTesting.shape[0], 3, widthOfImage, heightOfImage)

shapeOfInput = (3, widthOfImage, heightOfImage)

else:

inputForTraining = inputForTraining.reshape(inputForTraining.shape[0], widthOfImage, heightOfImage, 3)

inputForTesting = inputForTesting.reshape(inputForTesting.shape[0], widthOfImage, heightOfImage, 3)

shapeOfInput = (widthOfImage , heightOfImage, 3)

inputForTraining = inputForTraining.astype('float32')

inputForTesting = inputForTesting.astype('float32')

inputForTraining = inputForTraining / 255

inputForTesting = inputForTesting / 255

targetForTraining = keras.utils.to_categorical(targetForTraining, noClasses)

targetForTesting = keras.utils.to_categorical(targetForTesting, noClasses)

sampleEducbaModel = Sequential()

sampleEducbaModel.add(Conv2D(64, kernel_size=(3, 3), kernel_constraint=max_norm(max_norm_value), activation='relu', shapeOfInput=shapeOfInput, kernel_initializer='he_uniform'))

sampleEducbaModel.add(MaxPooling2D(pool_size=(2, 2)))

sampleEducbaModel.add(Dropout(0.50))

sampleEducbaModel.add(Conv2D(64, kernel_size=(3, 3), kernel_constraint=max_norm(max_norm_value), activation='relu', kernel_initializer='he_uniform'))

sampleEducbaModel.add(MaxPooling2D(pool_size=(2, 2)))

sampleEducbaModel.add(Dropout(0.50))

sampleEducbaModel.add(Flatten())

sampleEducbaModel.add(Dense(256, activation='relu', kernel_constraint=max_norm(max_norm_value), kernel_initializer='he_uniform'))

sampleEducbaModel.add(Dense(noClasses, activation='softmax'))

sampleEducbaModel.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adam(),

metrics=['accuracy'])

sampleEducbaModel.fit(inputForTraining, targetForTraining,

batch_size=sizeOfBatch,

epochs=noEpochs,

verbose=specifiedVerboseValue,

validation_split=validationSplit

)

generatedScoreResult = sampleEducbaModel.evaluate(inputForTesting, targetForTesting, verbose=0)

print(f'Loss in testing: {generatedScoreResult[0]} / Accuracy for testing: {generatedScoreResult[1]}')

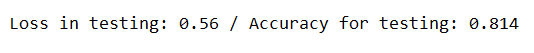

The execution of the above code gives the following output for the first attempt –

For the second attempt, it gives –

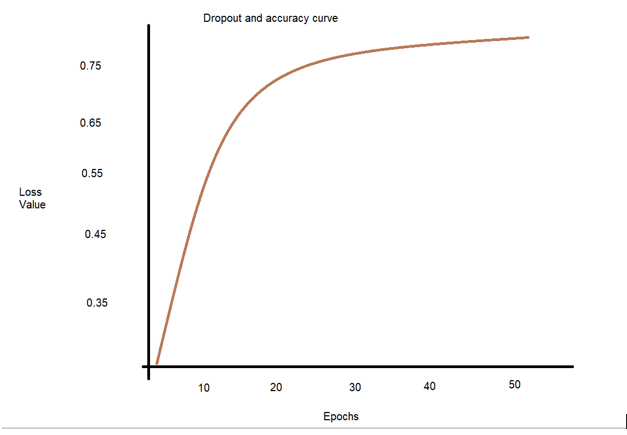

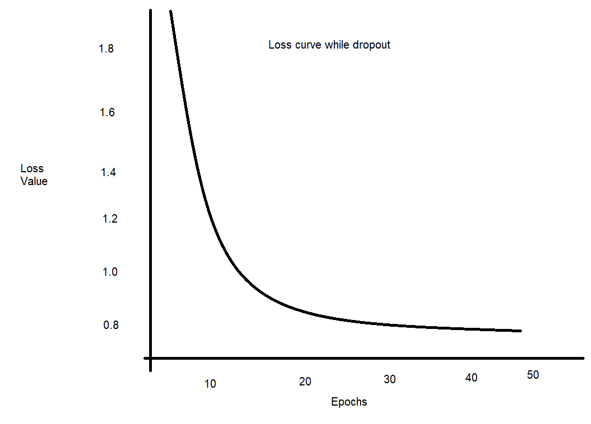

Graphically accuracy and loss can be displayed as –

We can observe that there has been a substantial decrease in the overfitting of the model.

Conclusion

Keras dropout is used to reduce odds while overfitting each model’s epoch.

Recommended Articles

This is a guide to Keras Dropout. Here we discuss the Introduction, What is Keras dropout, How to use Keras dropout, and Examples with code implementation. You may also have a look at the following articles to learn more –