Updated March 14, 2023

Definition of Keras Early Stopping

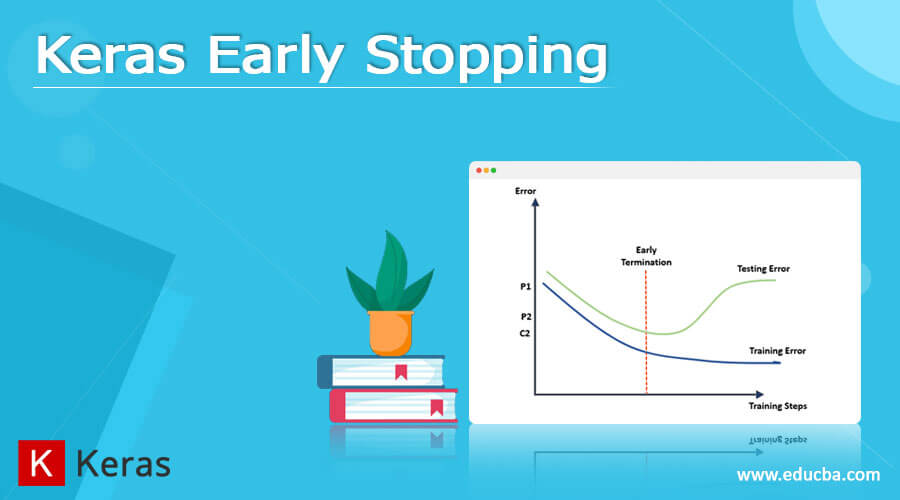

Keras early stopping has an advantage when it comes to stopping the training of neural networks or to bring a process to halt. At times there are many training datasets that are irrelevant or are overfitting for any dataset then in that case an arbitrary number is required for training epochs and validating the dataset. This validation in turn depends on a certain model that helps in measuring performance using metrics. Keras provides an early stopping class that consists of required parameters that help in optimization and minimize the loss of information taking care of performance.

Overview on Keras early stopping

- Keras early stopping overviews involve certain features where the keras early class comprise of certain parameters which helps in stopping the continuous training as soon as any of the metrics as part of monitoring gets stopped especially on aspects of improvement.

- The main motto of training and bringing early stopping class in keras is its beauty of balancing and minimizing the loss.

- The actual metric that is targeted for measurement is “loss” which is measured with a mode that would be ‘min’.

- A model.fit() training loop exists which is used for checking values as part of the model at end of every epoch that is used with variable and data fitting.

- It all depends on the loss factor whether It is decreasing or increasing according to the metric factor like some of the targeted parameters or arguments passed as part of model training.

- If in case the metrics found are not proper, then also some of the parameter to stop the training process is required to optimize and save the loss of information from the neural network.

- Early stopping somehow depends on the callback function with performance measurement and monitoring features.

- Early stopping callback function with all performance metrics get some of the minimum and maximum values for reconsideration depending upon the requirement.

- It is a perfect fit for stopping to reduce the reduction of overfitting of an MLP using some of the simple binary classification problems.

- Even marginal change or strike is considered as an increase at the time of using keras early stopping and is considered an improvement.

- A threshold value is given based on which it will be decided whether to consider the keras early class that threshold for improvement or no improvement.

Keras early stopping class

- Keras early stopping class is an inbuilt class as part of Keras library which is an extension to it, therefore, helping in making the threshold value with the incorporation of all parameters of early stopping class.

- Training of neural network requires some of the early stopping parameters to control the flow by maintaining the data sets and fitting of values within it.

- As mentioned, monitoring metric should show certain improvement that is again dependent on the so-called “loss” factor which is making it incorporated with the entire model containing certain logs with metric to reflect and acknowledge the improvement simultaneously to end-user.

- The model. fit() function leads the entire class which contains all these parameters for manipulation and functioning with respect to the training loop at end of each epoch, then there are logs dict followed by model. compile() that helps in keeping track of metrics present as part of quantity for monitoring.

- Marking the model.stop_training as true or false will, in turn, take care of training initiation or termination simultaneously.

- The quantity that is measured as part of the log should be present over dict that will in turn help in making or passing the model with loss or metrics at the time of compilation.

Parameters or arguments that are part of the early class monitoring are as follows:

– mode: This parameter as part of Keras early class will be used by selecting any one of the modes that include three modes namely “auto”, “min”, “max”. In min mode the quantity defined will start decreasing, In max mode, the quantity defined will stop increasing quantity and in auto mode, the definition with direction will start detecting and monitoring automatically.

– monitor: This argument will be used for monitoring the quantity present as part of Keras early stopping class.

– Baseline_val: This is a baseline value that can be called a threshold value which will be used as a baseline value whether to stop or increase the quantity as per monitoring the metrics.

– Verbose: This will be used for verbosity mode to detect the monitoring changes accordingly.

– Patience: This argument will be used for counting a number of epochs for counting and keeping a check on the metrics with which there will be an improvement or not will get reflected and the entire training will get stopped.

– Restore_best.weights: This parameter will act with the restore model weight which will be using either the best of metric value or it will by default restore the baseline value which will be not used for improving or training the patience value. Therefore, the default value with this parameter acts in a Boolean value pattern. If in case, there is no improvement while considering the overfitting of values with class then in that case the patience epochs will vary accordingly.

– Min_delta: value less than Min_delta will never help in improving the quantity and then it will not even help in making the increase or decrease the threshold. In nutshell, anything less than min_delta will not at all be considered an improvement.

Screenshot for keras early stopping class:

Keras early stopping examples

Example #1

This example of code snippet for Keras early stopping includes callback where the callback function will get stopped if in case the value is showing no improvement when compared with the threshold value of epochs i.e. patience with value 6.

from Keras.models import Sequential

from Keras.layers import Dense, Activation

model = Sequential([

Dense(22, input_dim=884),

Activation('relu'),

Dense(13),

Activation('softmax'),

])

callback = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=6)

model_val = tf.keras.models.Sequential([tf.keras.layers.Dense(12)])

model.compile(tf.keras.optimizers.SGD(), loss='get_the_mse_val')

history_vl = model_val.fit(np.arange(200).reshape(4, 18), np.zeros(6),epochs=20, batch_size=2, callbacks=[callback],verbose=0)

len(history_vl.history['loss'])

Example #2

This example shows the model.fit() and model.compile() which will be used for keras early stopping class for viewing and if it compiles successfully then will display the result.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

def create_model():

model = Sequential([

Dense(54, activation='relu', input_shape=(6,)),

Dense(120, activation='relu'),

Dense(130, activation='relu'),

Dense(112, activation='relu'),

Dense(54 activation='relu'),

Dense(60, activation='relu'),

Dense(84, activation='relu'),

Dense(2, activation='softmax')

])

return model

model.compile(

optimizer='adam_1',

loss='categorical_crossentropy_patern',

metrics=['accuracy']

)

history = model.fit(

X_0_train,

y_0_train,

epochs=180,

validation_split_1=0.22,

batch_size_0=40,

verbose=2

)

Conclusion

Keras early stopping gels with all the NLP and neural network-related builds that in turn will help in making the entire early stopping class accordingly. Early stopping helps in controlling the irrelevant compilation and calculation of threshold values which might act as an overhead for the entire neural model. Adjustments with auto-build parameters help in improving the training for any neural network.

Recommended Articles

This is a guide to Keras Early Stopping. Here we discuss the definition, overviews, Keras early stopping class Examples with code implementation. You may also have a look at the following articles to learn more –