Updated March 16, 2023

Introduction to Keras GPU

Keras gpu consists of a gpu not generally supported by the normal keras. Keras consists of all three backend implementations, including Microsoft cognitive toolkit, Theano, and tensorflow. All these three backends are not supported by keras gpu and might cause trouble at some point in time when implemented as a solution to any major machine learning framework. But as all the keras interfaces have evolved, so are the changes; therefore, after certain versions, gpu or CPU are mandatory components for keras to make the operations work streamlined with systems.

What is keras gpu?

Keras is a neural network-oriented library that is written in python. The entire keras deep learning model uses the keras library that can involve the keras gpu for computational purposes. So keras GPU, which gels well with keras, is mostly used for processing the system. To use keras GPU, it has to make sure that the system has proper support like installation of GPU, for example, NVIDIA.

How to run keras gpu?

To run keras gpu over any system, certain checkpoints are needed to be made like:

- Any system with an urge to install and work with keras gpu should have Nvidia as installing, and the presence of AMD does not work much.

- Also, it needs to install a specific GPU version of tensorflow because tensorflow is an extension of keras that helps install tensorflow-gpu on anaconda.

- Once all the checks are made, the anaconda installed on top of the gpu will make the work of learning and training or any execution of the neural network.

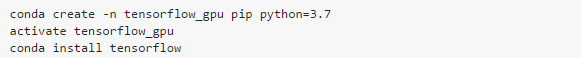

The below screenshot represents the installation of tensorflow gpu for any manipulation regarding python and gpu.

- Tensorflow installation will help understand and run computations with various devices involving CPU or GPU. They are represented using certain strings as identifiers for their representation.

- For example, if there CPU or GPU is using a string identifier as “/device: CPU:0,” then, in that case, it represents the CPU of your machine; if it represents the string identifier as “/Gpu:0,” then it signifies that the first GPU is found and can be manipulated accordingly.

- Suppose any tensorflow involves operation with both CPU and GPU as implementation, then in that case, by default. In that case, the GPU usage within the system gets the priority until and unless any external request is made to run within the system.

- Another aspect to be taken care of when running keras gpu is execution time and optimization paradigms. Also, it should take care of the memory management of the entire model designed concerning the machine learning framework.

Keras gpu model parallelism

- GPU parallelism generally works on the same lines where the GPU availability for instances or, say; the model is available in more than two numbers than in that case to maintain the availability and scalability of the process this gets introduced.

- Keras gpu model parallelism follows and adopts certain algorithms to work well with complex neural networks.

- Adopting model parallelism is a bit difficult but not impossible as any neural network model includes layers after a layer, making the working of the API and overall functioning a difficult task for the same.

- Some memories that are part of GPU also get affected because they cannot work with these complex layers, and it is said that model parallelism with AI algorithms can sort out the problem where it is not at all a real way for the approach.

- Model written on parallelism is undoubtedly a good approach. Still, it is not suitable for small batch jobs firstly because of time consumption and secondly due to its scaling efficiency, which is time taking and might encounter the curse of dimensionality issue. Thus recommended only for models and can consider something like mesh-tensorflow or distributed approach for implementation.

- This can be concluded that model parallelism uses different parts of the same model to run on different systems for processing a single batch of data which gels and fit with a model pattern where only the model with parallel architecture makes feature ready.

- Distributed approach with a multi-system approach can be a preferred strategy.

Keras gpu using multiple

There are several ways to deal with multiple GPU but let us take two scenarios:

- In the first scenario, a single GPU might be used on top of a multi-GPU system.

- Multiple numbers of GPUs at a fly time can be used in the second scenario.

# First Scenario

- If a single gpu needs to work on the multi-gpu system can make use of or select the lowest ID by default at the time of execution to run on different GPU even when you need to specify preference explicitly for manipulation.

# Second Scenario

- Again developing or adopting an approach where multiple GPUs will be considered involves and allows for scaling that will include additional resources.

- But, if a single GPU exists in the first scenario, then a multi-GPU approach can also be adopted by creating virtual instances of all the GPU posts, which will clone or simulate similarly to that of multiple GPU.

- Using virtualization to create multiple instances might help in easy testing of multi GPU setup-related environment seamlessly without requiring additional resources on top of it.

- There are certain glitches or drawbacks while using the virtualization way of creating multiple GPU and then implementing anything because once initialized, it cannot be reused as it cannot be modified relentlessly.

- The best and recommended way will be to use the distributed strategy whenever multiple GPU gets used and come into a picture using tf.distribute.Strategy

Conclusion

Keras gpu is useful when any deep learning model needs to be incorporated with keras or tensorflow for analysis. It provides an edge and very useful functionality for the end-user to manipulate or create a custom Artificial intelligence algorithm for any manipulation. Keras gpu makes use of a lot of other packages and libraries for efficient development.

Recommended Articles

This is a guide to Keras GPU. Here we discuss keras gpu and How to run gpu over any system with certain checkpoints. You may also look at the following articles to learn more –