Updated March 16, 2023

Introduction to Keras Regularization

Keras regularization allows us to apply the penalties in the parameters of layer activities at the optimization time. Those penalties were summed into the function of loss, and it will optimize the network. It applies on a per-layer basis. The exact API depends on the layer, but multiple layers contain a unified API. The layer will expose arguments of 3 keywords.

Key Takeaways

- Suppose we need to configure the regularization using multiple arguments, then implement the subclass into the keras regularization.

- We can also implement the class method and get the config to support the serialization. We can also use regularization parameters.

What is Keras Regularization?

The keras regularization prevents the over-fitting penalizing model from containing large weights. There are two popular parameters available, i.e., L1 and L2. L1 is nothing but the Lasso, and L2 is called Ridge. Both of these parameters are defined at the time of learning the linear regression. When working with tensorflow, we can implement the regularization using an optimizer. We are adding regularization to our code by adding a parameter name as kernel_regularizer. While adding L2 regularization, we need to pass the keras regularizers.l2 () function.

This function takes one parameter, which contains the strength of regularization. We pass L1 regularizers by replacing the l2 function with the l1 function. Suppose we need to use L2 and l1 regularization this is called the elastic net. The weight regularization provides an approach to reducing the overfitting of neural network models for deep learning. Activity regularization encourages the neural network to learn the sparse features of internal representations for the raw observations. It is common to seek the representation of spark known for autoencoders called sparse encoders.

How to Add Keras Regularization?

It will generally reduce the model overfitting and help the model generalize. The regularization is a penalized model for overfitting, as we know it has two parameters. Below we are using the l1 parameter for adding keras regularization.

Below steps shows how we can add keras regularization as follows:

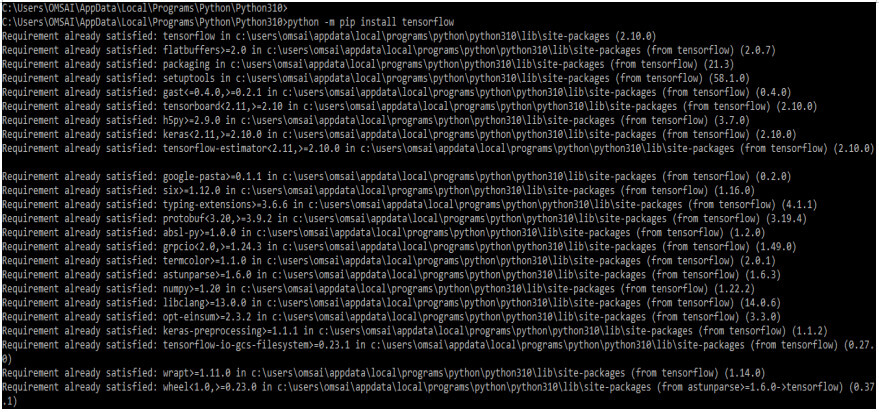

1. In the first step we are installing the keras and tensorflow module in our system. We are installing those modules by using the import keyword as follows.

Code:

python -m pip install tensorflowpython –m pip install kerasOutput:

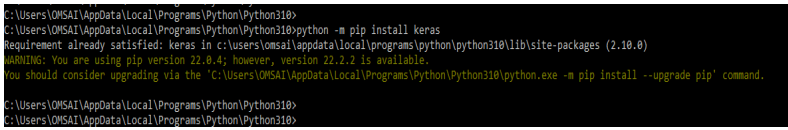

2. After installing the module of keras and tensorflow now we are checking the installation by importing both modules as follows.

Code:

import tensorflow as tf

from keras.layers import DenseOutput:

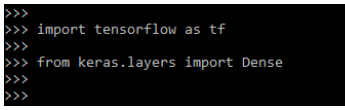

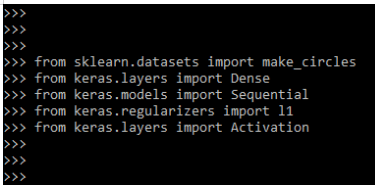

3. After checking the installation now in this step we are importing the required model which was used in it. Basically, we are importing the dense, sequential, l1, and activation modules. We are importing the dense module from the layers library, a sequential module from the library, an l1 module from the regularizers library, and an activation module from the layers library.

Code:

from sklearn.datasets import make_circles

…..

from keras.layers import ActivationOutput:

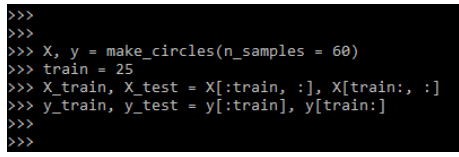

4. After importing the dataset now in this step we are preparing the dataset for it. We are preparing the dataset by using x and y values. Also, we are defining the value of X_train, y_train, X_test, and y_test as follows.

Code:

X, y = make_circles()

train = 25

X_train, X_test = X[]

y_train, y_test = y[]Output:

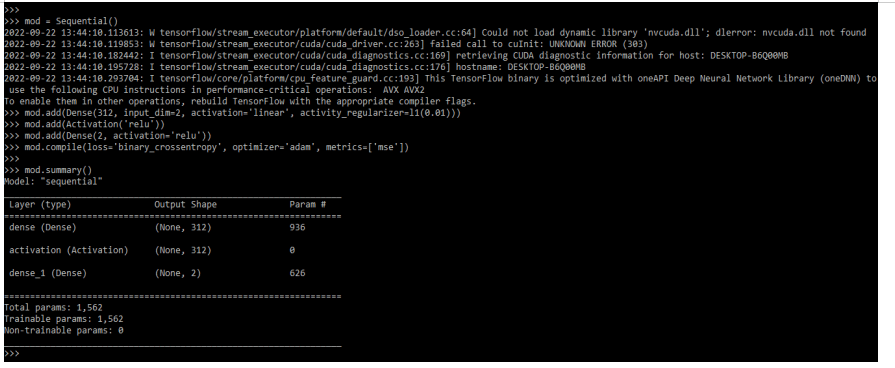

5. After creating the dataset in this step we are creating the neural network model and adding the regularizer into the input layer as follows. We are adding a sequential model and defining the dense layer as follows.

Code:

mod = Sequential()

mod.add()

mod.add(Activation('relu'))

mod.add(Dense(2, activation = 'relu'))

mod.compile()

mod.summary()Output:

Keras Regularization Layer

The weight regularization layer of keras is applying penalties to the parameters of layers. The weight regularization layer will expose three keyword arguments as follows:

- Kernel Regularizer

- Bias Regularizer

- Activity Regularizer

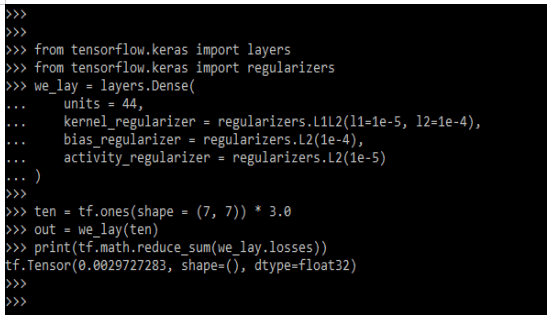

The below example shows keras weight regularization layer as follows. This layer is dividing the input batch size.

Code:

from tensorflow.keras import layers

from tensorflow.keras import regularizers

we_lay = layers.Dense(

units = 44,

kernel_regularizer = regularizers.L1L2(),

…

activity_regularizer = regularizers.L2 (1e-5)

)

ten = tf.ones (shape = (7, 7)) * 3.0

out = we_lay(ten)

print(tf.math.reduce_sum (we_lay.losses))Output:

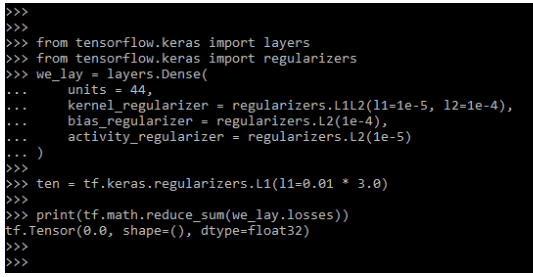

The L1 and L2 regularizers are available as part of a module of regularizers. The below example shows the L1 class regularizers module.

Code:

from tensorflow.keras import layers

from tensorflow.keras import regularizers

we_lay = layers.Dense (

units = 44,

kernel_regularizer = regularizers.L1L2(),

…

activity_regularizer = regularizers.L2(1e-5)

)

ten = tf.keras.regularizers.L1(l1=0.01 * 3.0)

print (tf.math.reduce_sum (we_lay.losses))Output:

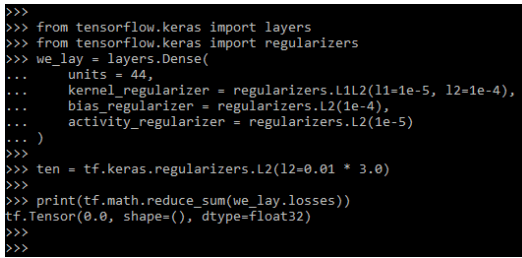

The below example shows the L1 class regularizers module as follows. We are importing the layers and regularizers model.

Code:

from tensorflow.keras import layers

from tensorflow.keras import regularizers

we_lay = layers.Dense(

units = 44,

kernel_regularizer = regularizers.L1L2(),

…

activity_regularizer = regularizers.L2 (1e-5)

)

ten = tf.keras.regularizers.L2 (l2 = 0.01 * 3.0)

print(tf.math.reduce_sum(we_lay.losses))Output:

Examples of Keras Regularization

Given below are the examples mentioned:

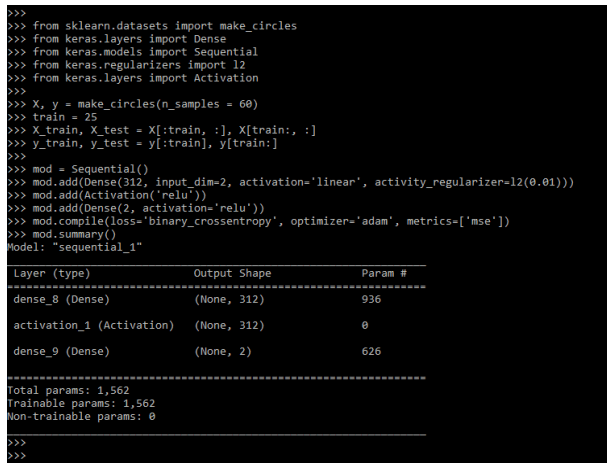

Example #1

In the below example we are using L2 arguments.

Code:

from sklearn.datasets import make_circles

…..

from keras.layers import Activation

X, y = make_circles()

train = 25

X_train, X_test = X []

y_train, y_test = y []

mod = Sequential()

mod.add()

mod.add(Activation ('relu'))

mod.add(Dense(2, activation = 'relu'))

mod.compile()

mod.summary()Output:

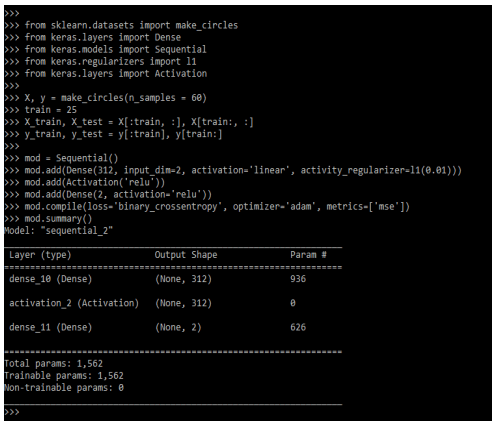

Example #2

In the below example, we are using L1 arguments.

Code:

from sklearn.datasets import make_circles

…..

from keras.layers import Activation

X, y = make_circles()

train = 35

X_train, X_test = X[]

y_train, y_test = y[]

mod = Sequential()

mod.add()

mod.add(Activation('relu'))

mod.add(Dense(2, activation = 'relu'))

mod.compile()

mod.summary()Output:

FAQ

Given below are the FAQs mentioned:

Q1. What is the use of keras regularization?

Answer: It is the technique for preventing the model from large weights. The regularization category is applied to the per-layer basis.

Q2. How many types of weight regularization are in keras?

Answer: Basically there are multiple types of weight regularization like vector norms, L1 and L2. It will require the hyper parameter which is configured.

Q3. Which modules do we need to import at the time of using keras regularization?

Answer: We need to import the keras and tensorflow module at the time of using it. Also, we need to import is a dense layer.

Conclusion

There are two popular keras regularization parameters available i.e. L1 and L2. In that L1 is nothing but the Lasso and L2 is called Ridge. It allows us to apply the penalties to the parameters of layer activities at the time of optimization.

Recommended Articles

This is a guide to Keras Regularization. Here we discuss the introduction, and how to add keras regularization, layer, examples, and FAQ. You may also have a look at the following articles to learn more –