Updated March 16, 2023

Introduction to Keras VGG16

Keras VGG16 is a deep learning model which was available with pre-trained weights. The Keras VGG16 model is used in feature extraction, fine-tuning, and prediction models. By using Keras VGG16 weights are downloaded automatically by instantiating the model of Keras and this model is stored in Keras/model directory. As per the instantiation, the Keras model will build according to the image data format which set up our Keras VGG16 configuration files.

Key Takeaways

- Transfer learning is referring the process where the model of Keras VGG16 is trained by using specified problems.

- In deep transfer learning, the model of a neural network was first trained by using a similar problem that we are solving in that specified neural model.

What is Keras VGG16?

The Keras VGG16 is nothing but the architecture of the convolution neural net which was used in ILSVR. The Keras VGG16 model is considered the architecture of the vision model. The very important thing regarding VGG16 is that instead of a large parameter it will focus on the convolution layers. It is following the arrangement of max pool layers and convolution which was consistent throughout the architecture. It is referring the 16 layers which contain weights. The Keras VGG16 network is very large, it will contain millions of parameters. It is implemented on a dataset of python.

How to Learn Keras VGG16 Model?

Transfer learning is an approach where we can use the model which was trained from the machine learning task. The domain of deep learning will use this approach for the classification of images.

1. CNN review

The CNN contains multiple layers which were used to build the block. CNN contains below building blocks as follows:

- Convolutional layer: This layer will be computing the output of nodes which was connected to input matrix regions.

- Activation layer: This layer will be determining whether the input node is fired by using input data. The volume of dimensions is unchanged by using this layer.

- Pooling layer: While using this layer down sampling strategy is applied while reducing the weight and height of the volume.

- Fully connected layer: The volume of output is passed by using the nodes of the fully connected layer. The probability class is computed and outputted into the 3D array by using dimensions.

2. Keras CNN predicting the food labels

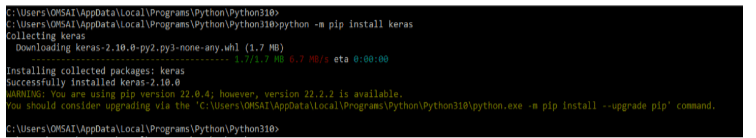

In the below example, we are loading the model for generating the predictions and calculating accuracy which was used for comparing the performance as follows. While using it we need to install the keras in our system. In the below example, we are installing the same by using the pip command as follows.

Code:

python -m pip install kerasOutput:

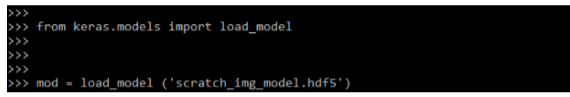

In the below example we are defining the model as follows. We are using a mod variable for the same.

Code:

from keras.models import load_model

mod = load_model('scratch_img_model.hdf5')

mod.summary()Output:

3. How we can transfer learning work?

Transfer learning will be resolving the limitation of the learning paradigm. Transfer learning will be giving the ability for sharing features across tasks.

4. Domain and task

Domain and task are defined in a domain and task. In the domain, we are defining the image classification of our task to classify the images. If suppose we are using the CNN, then it will already be optimized and also it will be trained for task and domain. We can utilize the model which was pertained.

5. How we can utilize the model of VGG16?

VGG16 is a CN network that was trained into the collection dataset. The image net dataset will contain images of different types of vehicles. We need to import the model which was pre-trained onto the dataset of imagenet.

There are two types of approaches to keras VGG16:

- Feature extraction approach: We can use the pretrained model architecture for creating the new dataset from the input images. By using this type of approach, we are importing the pooling and convolutional layers. We are passing the images through VGG16.

- Fine tuning approach: This type of approach is used to employ the fine-tuning strategy. The goal of this strategy is to allow the layers of pretrained. In the approach of feature extraction, we have used pretrained layers. We are passing our image dataset from weights outputting.

6. Transfer learning for the classification of food

The VGG16 model is easily downloaded by using the keras API. We need to import the function of pre-processing with the VGG16 model. The keras VGG16 model is trained by using pixels value which was ranging from 0 to 255. Other models contain different normalization schemes into it.

7. Training and testing data preparation

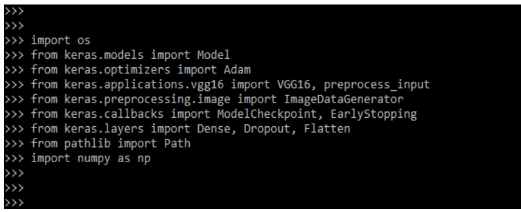

In the below example, we are first importing the libraries.

Code:

import os

from keras.layers import Flatten

from pathlib import Path

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Model

from keras.optimizers import Adam

from keras.applications.vgg16 import VGG16

from keras.callbacks import EarlyStopping

import numpy as npOutput:

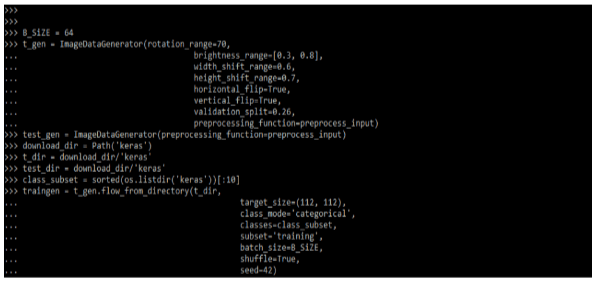

In the below example with an image data generator, we are using the image directory to define the path.

Code:

B_SiZE = 64

t_gen = ImageDataGenerator(rotation_range=70,

brightness_range=[0.3, 0.8],

width_shift_range=0.6,

height_shift_range=0.7,

horizontal_flip=True,

vertical_flip=True,

validation_split=0.26,

preprocessing_function=preprocess_input)

test_gen = ImageDataGenerator(preprocessing_function=preprocess_input)

download_dir = Path('keras')

t_dir = download_dir/'keras'

test_dir = download_dir/'keras'

class_subset = sorted(os.listdir('keras'))[:10]

traingen = t_gen.flow_from_directory(t_dir,

target_size=(112, 112),

class_mode='categorical',

classes=class_subset,

subset='training',

batch_size=B_SiZE,

shuffle=True,

seed=42)

validgen = t_gen.flow_from_directory(t_dir,

target_size=(112, 112),

class_mode='categorical',

classes=class_subset,

subset='validation',

batch_size=B_SiZE,

shuffle=True,

seed=42)

testgen = test_gen.flow_from_directory(test_dir,

target_size=(112, 112),

class_mode=None,

classes=class_subset,

batch_size=1,

shuffle=False,

seed=42)Output:

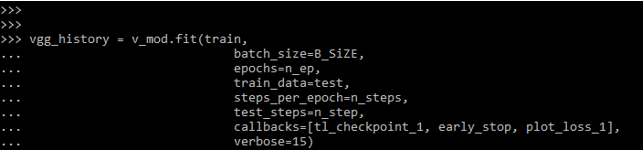

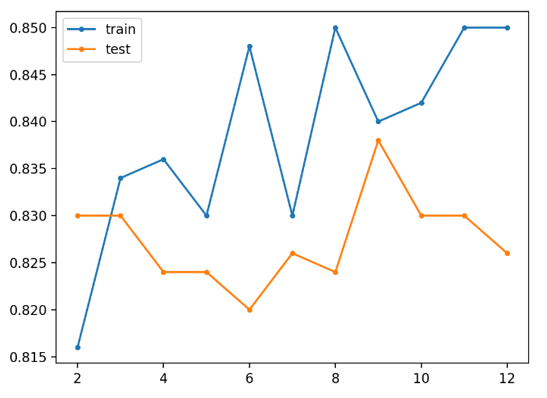

8. Feature extraction pretrained layers

In the below example, we are using the pretrained layer for the feature extraction.

Code:

optim_1 = Adam(learning_rate=0.1)

n_class=15

n_steps = traingen.samples

n_step = validgen.samples

n_ep = 40

v_mod = create_model(input_shape, n_class, optim_1)

%%time

vgg_history = v_mod.fit(train,

batch_size=B_SiZE,

epochs=n_ep,

train_data=test,

steps_per_epoch=n_steps,

test_steps=n_step,

callbacks=[tl_checkpoint_1, early_stop, plot_loss_1],

verbose=15)Output:

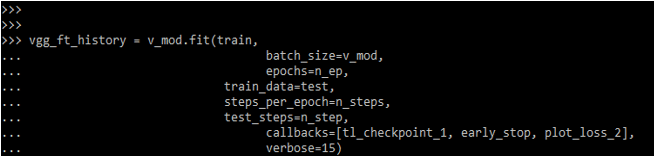

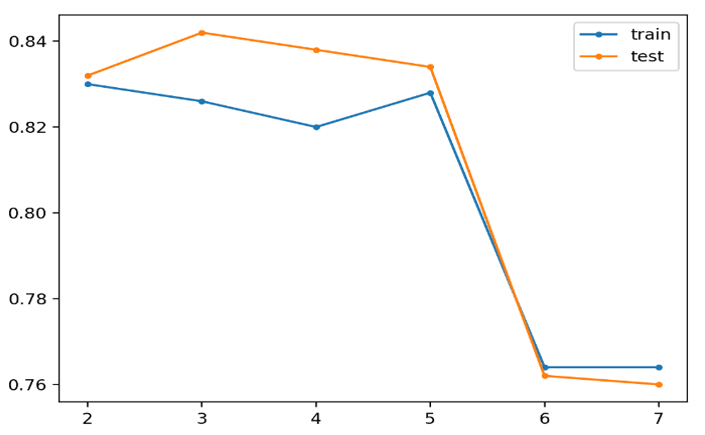

9. Pre-trained layers for fine-tuning

In the below example, we are using the pretrained layer for the feature extraction.

Code:

vgg_ft_history = v_mod.fit(train,

batch_size=v_mod,

epochs=n_ep,

train_data=test,

steps_per_epoch=n_steps,

test_steps=n_step,

callbacks=[tl_checkpoint_1, early_stop, plot_loss_2],

verbose=15)Output:

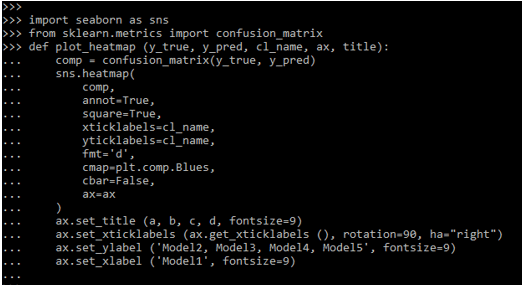

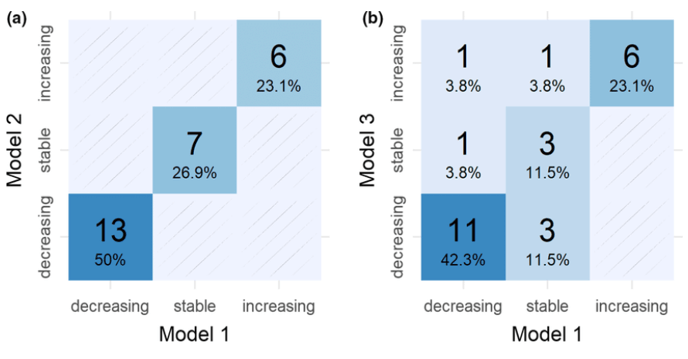

10. Comparing models

In the below example, we are comparing the model which was generated in the above case.

Code:

import seaborn as sns

from sklearn.metrics import confusion_matrix

def plot_heatmap (y_true, y_pred, cl_name, ax, title):

comp = confusion_matrix(y_true, y_pred)

sns.heatmap(comp, annot=True, square=True,

xticklabels=cl_name,

yticklabels=cl_name,

fmt='d',

cmap=plt.comp.Blues,

cbar=False, ax=ax)

ax.set_title (a, b, c, d, fontsize=9)

ax.set_xticklabels (ax.get_xticklabels (), rotation=90, ha="right")

ax.set_ylabel ('Model2, Model3, Model4, Model5', fontsize=9)

ax.set_xlabel ('Model1', fontsize=9)

…

plt.show()Output:

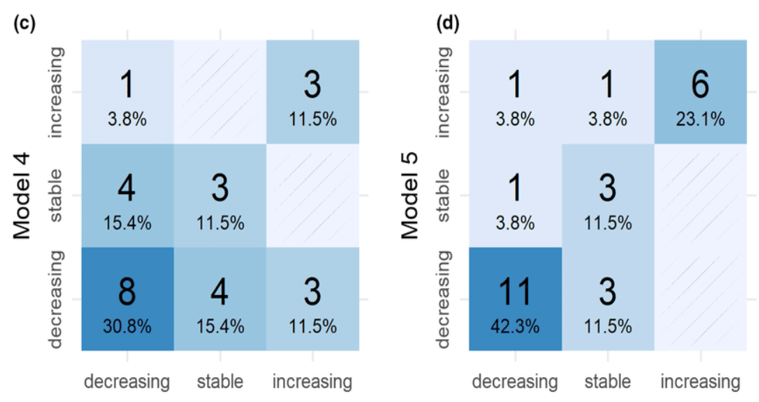

Keras VGG16 Architecture

In the VGG16 architecture, there are 13 layers available, five are the max pooling, and three are dense layers. Below figure shows keras VGG16 architecture.

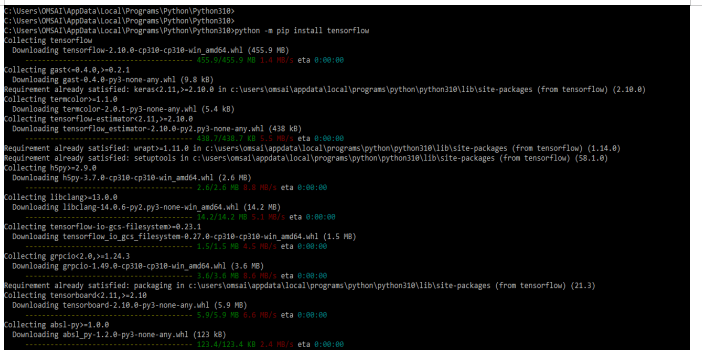

The training data is forwarded through the network and the same is stopped in the FC layers. In some of the network of the case is obtaining the accuracy. To use it we need to install the tensorflow in our system.

Code:

python -m pip install tensorflowOutput:

To use it we need to import the keras module by using the import keyword.

Code:

from keras.layers import Flatten

from pathlib import Path

from keras.preprocessing.image import ImageDataGenerator

from keras.applications.vgg16 import VGG16Output:

FAQ

Given below are the FAQs mentioned:

Q1. What is the use of keras VGG16?

Answer: It is nothing but the fundamental of image classification which was built into the CNN for classifying the images of food.

Q2. What is the use of transfer learning in keras VGG16?

Answer: Transfer learning is an approach of keras VGG16 where we can use the trained model in the task of machine learning for different types of jobs that are defined in the keras VGG16.

Q3. What is the use of the convolutional layer in keras VGG16?

Answer: The convolutional layer will compute the nodes connected to the local regions.

Conclusion

The keras VGG16 network is very large, it will contain millions of parameters. It is implemented on the dataset of python. It is a deep learning model which was available with pre-trained weights. The model is used in feature extraction, fine-tuning, and prediction models.

Recommended Articles

This is a guide to Keras VGG16. Here we discuss the introduction, how to learn keras VGG16 model? architecture, and FAQ. You may also have a look at the following articles to learn more –