Updated March 22, 2023

Introduction to Kernel Methods in Machine Learning

Kernel method in machine learning is defined as the class of algorithms for pattern analysis, which is used to study and find the general types of relations (such as correlation, classification, ranking, clusters, principle components, etc) in datasets by transforming raw representation of the data explicitly into feature vector representation using a user-specified feature map so that the high dimensional implicit feature space of these data can be operated with computing the coordinates of the data in that particular space.

The algorithm used for pattern analysis. In general pattern, analysis is done to find relations in datasets. These relations can be clustering, classification, principal components, correlation, etc. Most of these algorithms that solve these tasks of analyzing the pattern, Need the data in raw representative, to be explicitly transformed into a feature vector representation. This transformation can be done via a user-specified feature map. So, it can be taken that only the user-specified kernel is required by the kernel method.

The terminology Kernal Method comes from the fact that they use kernel function, which allows them to perform the operation in high-dimensional, implicit feature space without the need of computing the coordinates of the data in that space. Instead, they simply compute the inner product between the images of all pairs of data in feature space.

These kinds of operations are computationally cheaper most of the time compared to the explicit computation of the coordinates. This technique is termed as ‘kernel trick’. Any linear model can be converted into a non-linear model by applying the kernel trick to the model.

Kernel Method available in machine learning is principal components analysis (PCA), spectral clustering, support vector machines (SVM), canonical correlation analysis, kernel perceptron, Gaussian processes, ridge regression, linear adaptive filters, and many others. Let’s have a high-level understanding of a few of these kernel methods.

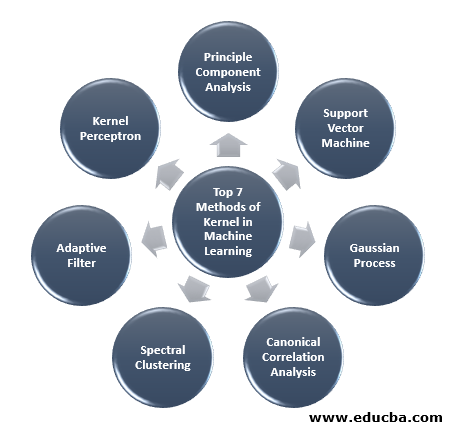

Top 7 Methods of Kernel in Machine Learning

Here are the Methods of Kernel in Machine Learning mention below:

1. Principle Component Analysis

Principal component analysis (PCA) is a technique for extracting structure from possibly high-dimensional data sets. It is readily performed by using iterative algorithms that estimate principal components or by solving an eigenvalue problem. PCA is an orthogonal transformation of the coordinate system in which we describe our data. The new coordinate system is obtained by projection on the principal axes of the data. A small number of principal components is often sufficient to account for most of the structure in the data. One of its major application is to perform exploratory data analysis for making a predictive model. It mostly used to visualize the relatedness between populations and genetic distance.

2. Support Vector Machine

SVM can be defined as a classifier for separating hyperplane where hyperplane is the subspace of one dimension less than the ambient space. The dimension of this mathematical space is defined as the minimum number of coordinates required to specify any point while the ambient space is the space that surrounds the mathematical object. Now Mathematical object can be understood as an abstract object which does not exist at any time or place but exists as a type of thing.

3. Gaussian Process

The Gaussian process was named after Cark Friedrich Gauss because it uses the notation of Gaussian distribution (normal distribution). It is a stochastic process which means a collection of random variables indexed by time or space. In Gaussian Process random variables have a multivariate normal distribution, i.e. all the finite linear combinations of it are normally distributed. The Gaussian process uses the properties inherited from a normal distribution and therefore are useful in statistical modeling. Machine Learning algorithm that involves this kernel method uses the measure of lazy learning and the similarity between points to predict the value of unseen points from training data. This prediction is not only the estimate but the uncertainty at that point.

4. Canonical Correlation Analysis

Canonical Correlation Analysis is a way of inferring information from cross-covariance matrices. It is also known as canonical variates analysis. Suppose we have two vector X, Y of random variable say two vectors X = (X1, …, Xn) and vector Y = (Y1, …, Ym) , and the variable having correlation, then CCA will compute a linear combination of X and Y which has the max correlation among each other.

5. Spectral Clustering

In the application of image segmentation, spectral clustering is known as segmentation-based object categorization. In Spectral Clustering, dimensionality reduction is performed before clustering in fewer dimension, this is done by using the eigenvalue of the similarity matrix of the data. It has its roots in graph theory, where this approach is used to identify communities of nodes in a graph which is based on the edges connecting them. This method is flexible enough and allows us to cluster data from non-graph as well.

6. Adaptive Filter

The adaptive filter uses a linear filter which comprises a transfer function, which is controlled by variable parameters and the methods, which will be used to tweak these parameters as per the optimization algorithm. The complexity of this optimization algorithm is the reason that all adaptive filter is a digital filter. An adaptive filter is needed in those applications where there is no prior information on the desired processing operation in advance or they are changing.

The cost function is used in the closed-loop adaptive filter, as it is necessary for the optimum performance of the filter. It determines how to modify the filter transfer function to reduce the cost of the next iteration. One of the most common function is the mean square error of the error signal.

7. Kernel Perceptron

In machine learning, the kernel perceptron is a type of the popular perceptron learning algorithm that can learn kernel machines, such as non-linear classifiers that uses a kernel function to calculate the similarity of those samples that are unseen to training samples. This algorithm was invented in 1964 making it the first kernel classification learner.

Most of the discussed kernel algorithms are based on convex optimization or eigenproblems and are statistically well-founded. Their statistical properties are analyzed using the statistical learning theory.

Talking about the Application areas of kernel methods it is diverse and includes geostatistics, kriging, inverse distance weighting, 3D reconstruction, bioinformatics, chemoinformatics, information extraction, and handwriting recognition.

Conclusion

I have summarized some of the terminologies and types of kernel methods in Machine Learning. Due to lack of space, this article by no mean is comprehensive and is just meant to give you an understanding of what kernel method is and a short summary of their types. However, covering this article will make you take the first step in the field of Machine Learning.

Recommended Articles

This is a guide to Kernel Method in Machine Learning. Here we discuss the 7 types of Kernel Methods in Machine Learning. You may also look at the following article.