Updated April 13, 2023

Introduction to Kubernetes Load Balancer

Load Balancing is the method by which we can distribute network traffic or client’s request to multiple servers. This is a critical strategy and should be properly set up in a solution; otherwise, clients cannot access the servers even when all servers are working fine; the problem is only at the load Balancer end. When used efficiently, the Load balancer is helpful in maximize scalability and high availability.

In Kubernetes, there are many choices for load balancing, but each with a tradeoff. So, choose wisely and select your priorities. In actual, Load Balancing is a simple and straight concept in many environments, but when it comes to containers, it needs more precise decisions and special care.

What is Kubernetes Load Balancer?

In Kubernetes, you must understand few basic concepts before learning advanced concepts like Load Balancing. These basic concepts include: –

- Pods, which is a set of containers that are related to each other functions.

- Service, which is a set of related pods that provides the same.

- Kubernetes creates and destroys pods automatically. So the available pod’s IP is not.

- As Pods don’t have stable IP. So, Services must have stable.

- A request from any external resource is directed towards Service.

- Service dispatches any request towards it to an available Pod.

- Use NodePort instead of Load Balancer if your need is only to allow external traffic to specific ports on pods running some application across.

- You can consider Ingress when you are optimizing traffic to many servers but need to control the cost charged by external Load Balancers providers like AWS, Azure and GCP. But for this, you must be ready to accept that Ingress have a more complex configuration, and you will be managing Ingress Controllers on which your Implementation rules will be.

In Kubernetes, we have two different types of load balancing.

- Internal Load Balancing to balance the traffic across the containers having the same. In Kubernetes, the most basic Load Balancing is for load distribution which can be done at the dispatch level. This can be done by kube-proxy, which manages the virtual IPs assigned to services. Its default mode is iptables which works on rule-based random selection. But that is not really a Load Balancer like Kubernetes Ingress, which works internally with a controller in a customized Kubernetes pod. Also, there are a set of rules, a daemon which runs these rules.

- As Ingress is Internal to Kubernetes, it has access to Kubernetes functionality. Considering this, the configurable rules defined in an Ingress resource allow details and granularity very much. These can be modified as per the requirements of an application and its pre-requisites.

- External Load Balancing, which distributes the external traffic towards a service among available pods as external Load Balancer can’t have direct to pods/containers. An External Load balancer is possible either in the cloud if you have your environment in the cloud or in such an environment which supports an external load balancer. Clouds like AWS, Azure, GCP provides external Load.

How to Use Kubernetes Load Balancer?

To use an available Load Balancer in your host environment, you need to update the Service Configuration file to have a field type set to LoadBalancer. You should also specify a port value for the port field. When you deploy this configuration file, you will be provided with an IP address viz. externally accessible that sends traffic to the designated port on your cluster nodes which are actually need to be accessed by the external Load Balancer provided by an external source like a cloud.

There is an alternative method where you specify type=LoadBalancer flag when you are creating Service on the command line with Kubectl. We will see some examples in this section.

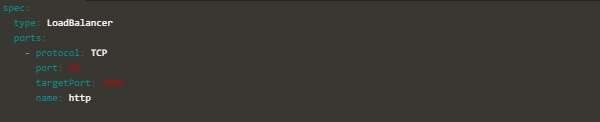

You can add an external Load Balancer to the cluster by creating a new configuration file or adding the specifications to your existing service configuration file. Let’s take an example like below; here, you can see that type and Ports are defined when type: LoadBalancer is mentioned.

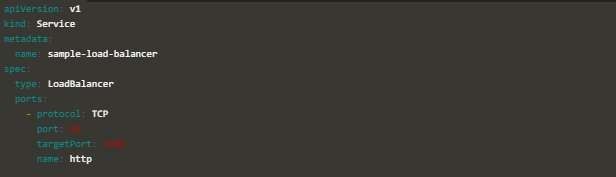

Now take an example of a service file like below where you specify it in the Service configuration file: –

Now after applying your configuration file like below:

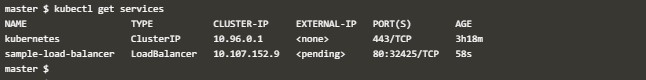

kubectl apply -f service-file.yamlYou can check available Load Balancers and related services like below; please note in this example of the load balancer, External-IP is shown in pending status.

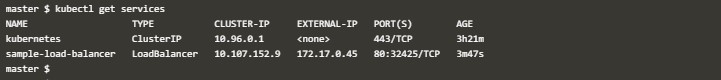

kubectl get servicesWhen the creation of Load Balancer is complete, the External IP will show an external IP like below, also note the ports column shows you incoming port/node level port format. The container port which was mentioned in the Specification file is not shown here.

kubectl get servicesTo get more details about a Load Balancer via Load Balancer Configuration file, we use Kubectl like below:

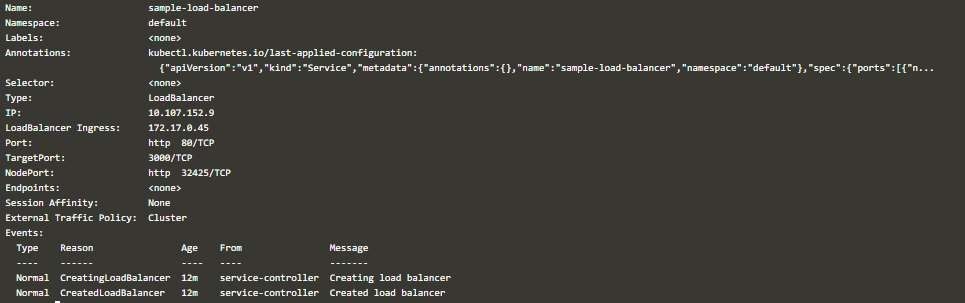

kubectl describe service sample-load-balancerYou will output something like below. Here you will get all details like:

- Load Balancer Name

- Selector

- Ingress IP

- Internal IP

- Node Port

- Target Port for containers

- Service Port

- Namespace

- Any label specified

- Annotations

Importance of Kubernetes Load Balancer

A Load Balancer service is the standard way to expose your service to external clients. On cloud platforms like GCP, AWS, we can use external load balancers services. They can also provide platforms to create Network Load Balancer which will give you a single IP address via which all the external IP address will be forwarded to you Services.

But there will not be any filtering of traffic, no routing. This means any kind of traffic can pass through Load Balancers. So, this is very useful as Load Balancers are not restricted to only a protocol or a set of protocols.

Conclusion

Load Balancer plays an important role in mixed environments where traffic is external as well internal, and it is also necessary to route traffic from a Service to another in the same Network Block, besides the external traffic for services. We should choose either external Load Balancer accordingly to the supported cloud provider as an external resource you use or use Ingress as an internal Load balancer to save the cost of multiple external Load Balancers.

Recommended Articles

We hope that this EDUCBA information on “kubernetes Load Balancer” was beneficial to you. You can view EDUCBA’s recommended articles for more information.