Updated November 20, 2023

What is Load Balancing in Cloud Computing?

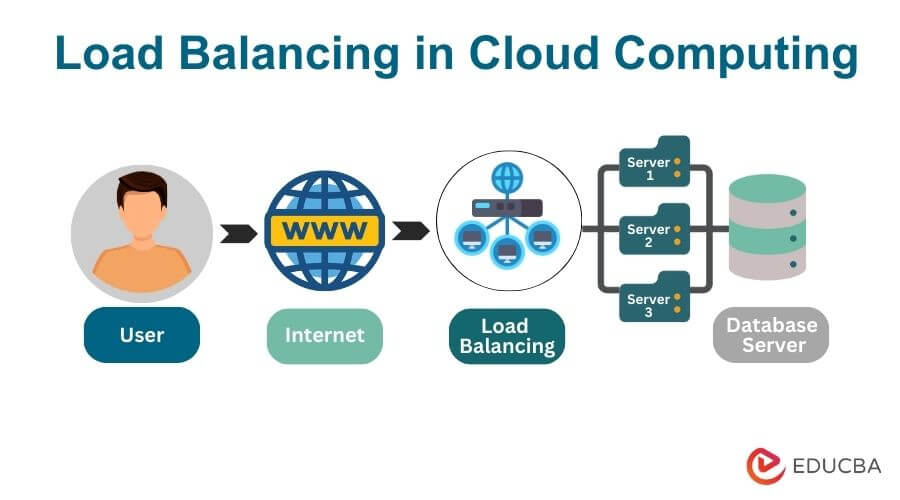

Load balancing in cloud computing is a crucial technique to distribute incoming network traffic or workload across multiple servers or resources within a cloud infrastructure. Distributing workloads equitably among servers is the primary goal of load balancing, which keeps some servers and resources from overloading while others are underutilized. This workload distribution helps optimize resource utilization, improve system performance, and enhance applications or services’ overall reliability and availability.

Load balancing plays a crucial role in managing the distribution of tasks and requests efficiently in a cloud computing environment where resources are often dynamic and scalable. This process helps prevent any individual server or component from becoming a bottleneck, improving application reliability and responsiveness.

Table of Contents

- Introduction

- How does it work?

- Implementation

- Load balancing techniques and algorithms

- Cloud load balancing as a service

- Advantages

How does Load Balancing work in Cloud Computing?

In cloud computing, load balancing effectively allocates incoming network traffic or computational workloads among several servers or resources to optimize performance and assure high availability. Here’s a step-by-step overview:

- Incoming Traffic: When a user requests to access an application or service hosted in the cloud, the traffic is directed to a load balancer.

- Load Balancer Evaluation: The load balancer receives the incoming traffic and evaluates various factors, such as the current state of servers, their health, and the load balancing algorithm in use.

- Load Balancing Algorithm: The load balancer applies a load balancing algorithm (e.g., Round Robin, Least Connections) to determine which server should handle the incoming request. This decision is based on server availability, response time, or session persistence.

- Traffic Distribution: The load balancer distributes the incoming traffic to the selected server. This ensures an even distribution of workloads, preventing any single server from overloading while others remain underutilized.

- Server Processing: The selected server processes the incoming request, executing the necessary tasks or computations associated with the user’s query.

- Response to the User: The server generates a response to the user’s request and sends it back through the load balancer to reach the user.

- Dynamic Adjustment: In dynamic cloud environments, the load balancer continuously monitors the health and performance of individual servers. If a server becomes unavailable or experiences issues, the load balancer redirects traffic to healthy servers to maintain high availability.

- Scaling: In response to changing workloads, load balancing facilitates auto-scaling by adding or removing servers based on demand. This ensures that resources are dynamically adjusted to handle varying levels of traffic.

- Monitoring and Analytics: Load balancers frequently integrate monitoring tools and analytics to track server and system performance. This data serves optimization, troubleshooting, and performance analysis.

- Security Measures: Load balancers may implement security measures like SSL termination to ensure secure communication between clients and servers. This is crucial for protecting sensitive data during transit.

Implementation of Load Balancing in Cloud Computing

There are numerous essential factors to implementing load balancing in cloud computing. Here’s a quick rundown.

- Select a Load Balancer: Choose a load balancing service or solution provided by the cloud platform (e.g., AWS Elastic Load Balancer, Azure Load Balancer, Google Cloud Load Balancing).

- Deploy Application Servers: Set up multiple instances of your application or service across different servers or virtual machines within the cloud environment.

- Configure Health Checks: Define health checks to monitor the status of each application server. The load balancer regularly checks the servers to ensure they are responsive and healthy.

- Define Load Balancing Algorithm: Choose a load-balancing algorithm that suits your application requirements. Common algorithms include round robin, least connections, weighted round robin, and least response time.

- Configure Load Balancer Settings: Set up the load balancer with the necessary parameters, such as port configurations, protocols (HTTP, HTTPS, TCP), and any SSL certificates, if required.

- Adjust Scaling Policies: Integrate load balancing with auto-scaling policies to dynamically adjust the number of application instances based on traffic or resource utilization.

- Testing and Optimization: Conduct thorough testing to ensure the load-balancing configuration works effectively under different conditions. Optimize settings based on performance and resource utilization metrics.

- Monitor and Analyze: Implement monitoring tools to track the performance of the load balancers and individual servers. Monitor metrics such as response time, error rates, and server health.

- Security Considerations: Implement security measures such as firewall rules and access controls to protect the load balancer and the underlying servers from potential threats.

- Documentation: Document the load balancing configuration, including details such as the chosen algorithm, server configurations, and any special considerations. This documentation is crucial for troubleshooting and future maintenance.

It is essential to remember that the steps required to configure load balancing may differ depending on the cloud provider and the type of load-balancing solution you opt for. Many cloud providers offer managed load-balancing services that streamline configuration and maintenance processes. To obtain detailed and platform-specific instructions, it is always recommended to refer to the documentation provided by your chosen cloud provider.

Load balancing techniques and algorithms

Load balancing techniques can be broadly categorized into static and dynamic approaches:

Static Load Balancing Techniques

1. Round Robin Load Balancing:

- Description: Requests are distributed to servers in a circular sequence.

- Adaptability: Static, as it doesn’t consider server load dynamically.

2. Weighted Round Robin Load Balancing:

- Description: Servers are assigned different weights, determining the proportion of requests they receive.

- Adaptability: Generally static, administrators typically set weights and don’t change dynamically.

3. Random Load Balancing:

- Description: Requests are sent to random servers.

- Adaptability: Static, as server load changes do not influence the randomness.

4. IP Hash Load Balancing:

- Description: The source IP address determines which server receives the request.

- Adaptability: Static, as it relies on fixed IP addresses for routing decisions.

Dynamic Load Balancing Techniques

1. Least Connections Load Balancing:

- Description: Traffic is directed to the server with the fewest active connections.

- Adaptability: Dynamic, as it requires continuous monitoring of connections and adjusts based on real-time conditions.

2. Least Response Time Load Balancing:

- Description: The server that has the fastest response time directs traffic.

- Adaptability: Dynamic, as it dynamically adjusts based on server response times.

3. Adaptive Load Balancing:

- Description: Dynamically adjusts the load balancing algorithm based on server performance.

- Adaptability: Highly dynamic, as it continuously adapts to changes in server load and network conditions.

4. Content-based Load Balancing:

- Description: Routing decisions depend on the type or content of the incoming traffic.

- Adaptability: Dynamic involves understanding the content and making decisions based on real-time factors.

Briefly, static load balancing techniques use predetermined rules that do not change during runtime, while dynamic load balancing techniques adapt to real-time conditions and adjust routing decisions based on current server states.

Cloud load balancing as a service

Cloud Load Balancing as a Service (LBaaS) is a comprehensive solution provided by cloud providers to distribute incoming network traffic across multiple servers automatically and efficiently, optimizing performance and ensuring high availability. This service eliminates the need for users to manage the underlying load-balancing infrastructure, offering a seamless and scalable solution. With LBaaS, users can easily configure load balancing settings, such as protocols, ports, and health checks, through the cloud provider’s interface. The service supports various load-balancing algorithms, including Round Robin and Least Connections, enabling users to tailor traffic distribution to specific application requirements.

Additionally, LBaaS often includes monitoring tools and analytics, allowing users to track performance metrics and make data-driven optimizations. With a pay-as-you-go pricing model, users benefit from cost efficiency, paying only for the load-balancing resources they consume. Cloud LBaaS abstracts the complexities of load balancing, making it accessible to users without extensive networking expertise. It is an integral part of creating resilient and high-performance applications in the cloud.

Advantages of Load Balancing in Cloud Computing

Load balancing in cloud computing provides various advantages for application efficiency, performance, and dependability. Here are some significant advantages:

- Enhanced Performance: Load balancing evenly distributes workloads across multiple servers, preventing one server from becoming a bottleneck. The overall performance of the application and reaction times are enhanced as a result.

- Optimized Resource Utilization: By distributing tasks intelligently, load balancing maximizes the use of available resources. It helps avoid situations where some servers are underutilized while others are overloaded, optimizing resource efficiency.

- Scalability: Load balancing supports the dynamic allocation of resources based on demand. As traffic increases, the system can seamlessly add new servers, and load balancers can distribute incoming requests, ensuring scalability without requiring manual intervention.

- High Availability: Load balancing contributes to increased availability by redirecting traffic to healthy servers in the event of a server failure. This ensures applications’ continuous operation and minimizes potential outages’ impact.

- Fault Tolerance: Load balancing enhances fault tolerance by providing redundancy. If one server fails, the system redirects traffic to other healthy servers, thereby reducing the risk of service disruptions.

- Improved User Experience: Consistent and efficient load distribution leads to a more stable and responsive user experience. Users are less likely to experience delays or mistakes due to uneven server loads.

- Cost Efficiency: Efficient resource utilization and the ability to scale dynamically contribute to cost efficiency. Users only pay for the resources they consume, and the infrastructure can be adjusted based on actual demand.

- Adaptability to Dynamic Workloads: Load balancing dynamically allocates resources based on real-time demand, adapting to changing workloads. This flexibility is especially valuable in environments with variable traffic patterns.

- Global Load Distribution: Load balancing can be configured for global distribution, allowing applications to be deployed across multiple data centers or regions. This improves availability, reduces latency, and enhances the user experience globally.

- Simplified Management: Load balancing services in the cloud often come with easy-to-use interfaces and automated management features. This simplifies the configuration and maintenance of load-balancing settings, reducing the administrative burden on users.

Conclusion

Load balancing is vital in optimizing performance, ensuring resource efficiency, and maintaining high availability in dynamic cloud computing environments. By intelligently distributing workloads across multiple servers, load balancing improves the overall user experience, adapts to changing demands, and contributes to cost efficiency. The advantages of load balancing, including fault tolerance and scalability, make it an integral component for building resilient and responsive applications in the cloud. As cloud technology continues to evolve, effective load balancing remains a key strategy for organizations seeking to maximize the benefits of their cloud infrastructure.

FAQ

Q1. How does load balancing contribute to high availability?

Answer: Load balancing guarantees high availability by redirecting traffic to healthy servers when one fails. This reduces downtime and service interruptions, giving consumers a more dependable experience.

Q2. What are the security considerations for load balancing in the cloud?

Answer: Implementing firewall rules, SSL termination, and access restrictions are all security measures for load balancing. Ensuring secure communication between the load balancer and servers is critical to the overall system’s security.

Q3. How does load balancing contribute to cost efficiency in the cloud?

Answer: Load balancing improves resource utilization by allowing consumers to pay only for the resources they use. It enables dynamic scalability, eliminating over-provisioning and lowering unneeded costs associated with underutilized servers.

Q4. What is the role of load balancing in hybrid cloud environments?

Answer: Load balancing facilitates the smooth traffic allocation between on-premises and cloud-based resources in hybrid cloud systems. This contributes to the overall performance and availability of the hybrid infrastructure.

Recommended Article

We hope that this EDUCBA information on “Load Balancing in Cloud Computing” was beneficial to you. You can view EDUCBA’s recommended articles for more information,