Updated March 15, 2023

Introduction to Logstash AWS

Logstash AWS is a data processing pipeline that collects data from several sources, transforms it on the fly, and sends it to our preferred destination. Elasticsearch, an open-source analytics and search engine, is frequently used as a data pipeline. AWS offers Amazon OpenSearch Service (successor to Amazon Elasticsearch Service), a fully managed service that provides Elasticsearch with an easy connection with Logstash to make it easy for clients.

What is Logstash AWS?

- Create an Amazon OpenSearch Service domain and start loading data from our Logstash server. The Amazon Web Services Free Tier allows us to try Logstash and Amazon OpenSearch Service for free.

- To make it easier to import data into Elasticsearch, Amazon OpenSearch Service has built-in connections with Amazon Kinesis Data Firehose, Amazon CloudWatch Logs, and AWS IoT.

- We can also create our data pipeline using open-source tools like Apache Kafka and Fluentd.

- All common Logstash input plugins, including the Amazon S3 input plugin, are supported. In addition, depending on our Logstash version, login method, and whether our domain uses Elasticsearch or OpenSearch, OpenSearch Service now supports the following Logstash output plugins.

- Logstash output amazon es, signs, and exports Logstash events to OpenSearch Service using IAM credentials.

- Logstash output OpenSearch, which only supports basic authentication at the moment. When building or upgrading to an OpenSearch version, select Enable compatibility mode in the console to allow OpenSearch domains.

- This setting causes the domain to falsely claim its version as 7.10, allowing the plugin to continue functioning. Set override main response version to true in the advanced settings if we want to use the AWS CLI or configuration API.

- We can also use the OpenSearch cluster settings API to enable or disable the compatibility of logstash.

- Depending on our authentication strategy, we can use either the normal Elasticsearch plugin or the logstash output amazon es plugin for an Elasticsearch OSS domain.

Step-By-Step Guide logstash AWS

- Configuration is comparable to any other OpenSearch cluster if our OpenSearch Service domain supports fine-grained access control and HTTP basic authentication.

- The input for this sample configuration file comes from Filebeat’s open-source version.

- Below is the configuration of the available search service domain as follows.

Code –

input {

beats {

port => 5044

}

}

output

{

opensearch

{

hosts => ["https://domain-endpoint:443"]

index => message"

user => "logstash"

password => "Logstash@123 "

}

}

- The configuration differs depending on the Beats app and the use situation.

- All requests to OpenSearch Service must be signed using IAM credentials if our domain has an IAM-based domain access policy or fine-grained access control with an IAM master user.

- In this scenario, the logstash output amazon es plugin is the simplest way to sign requests from Logstash OSS.

- After configuring the available search service domain, we are installing the plugin name as logstash-output-amazon_es.

- After exporting the plugin, we are exporting the IAM credential. And finally, we are changing the configuration files to use the plugin.

How to Use Logstash AWS?

- Logstash provides several outputs that allow us to route data wherever we want, opening up a downstream application.

- Over 200 plugins are available in Logstash’s pluggable structure. To work in pipeline harmony, mix, match, and orchestrate various inputs, filters, and outputs.

- While Elasticsearch is our preferred output for searching and analyzing data, it is far from the only option.

- We provide a great API for plugin development and a plugin generator to assist us in getting started and sharing our work.

- The below step shows how to use logstash in AWS as follows.

- The first step is to forward logs to OpenSearch Service using our security ports as 443.

- The second step is to update the configurations for Logstash, filebeat, and OpenSearch Services.

- The third step is to set up filebeat on the Amazon Elastic Compute Cloud instance we want to use as a source. Then, finally, check that our YAML config file is correctly installed and set up.

- The fourth step is to set up Logstash on a separate Amazon EC2 instance to send logs.

Install logstash AWS

The below step shows install logstash on AWS as follows.

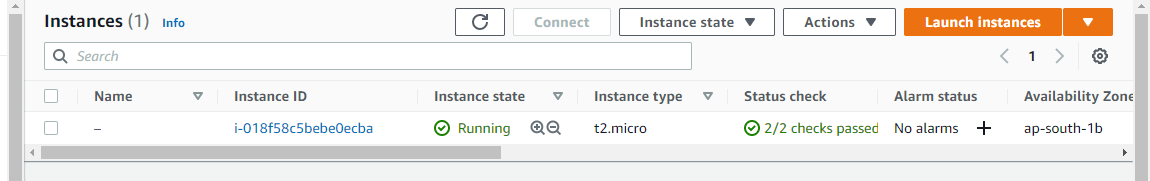

- Create a new Ec2 instance –

In this step, we are creating a new instance of EC2 to install the logstash. In the below example, we have created the t2.micro instance.

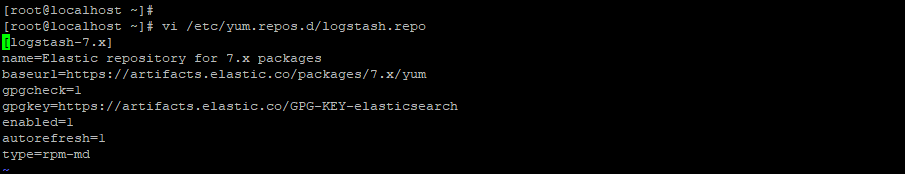

- Create a yum repository for installing the logstash –

In this step, we create the yum repository for installing the logstash in the Amazon EC2 instance.

# vi /etc/yum.repos.d/logstash.repo

[logstash-7.x]

Name = Elastic repository for 7.x packages

BaseUrl = https://artifacts.elastic.co/packages/7.x/yum

Gpgcheck = 1

Gpgkey = https://artifacts.elastic.co/GPG-KEY-elasticsearch

Enabled = 1

Autorefresh = 1

Type = rpm-md

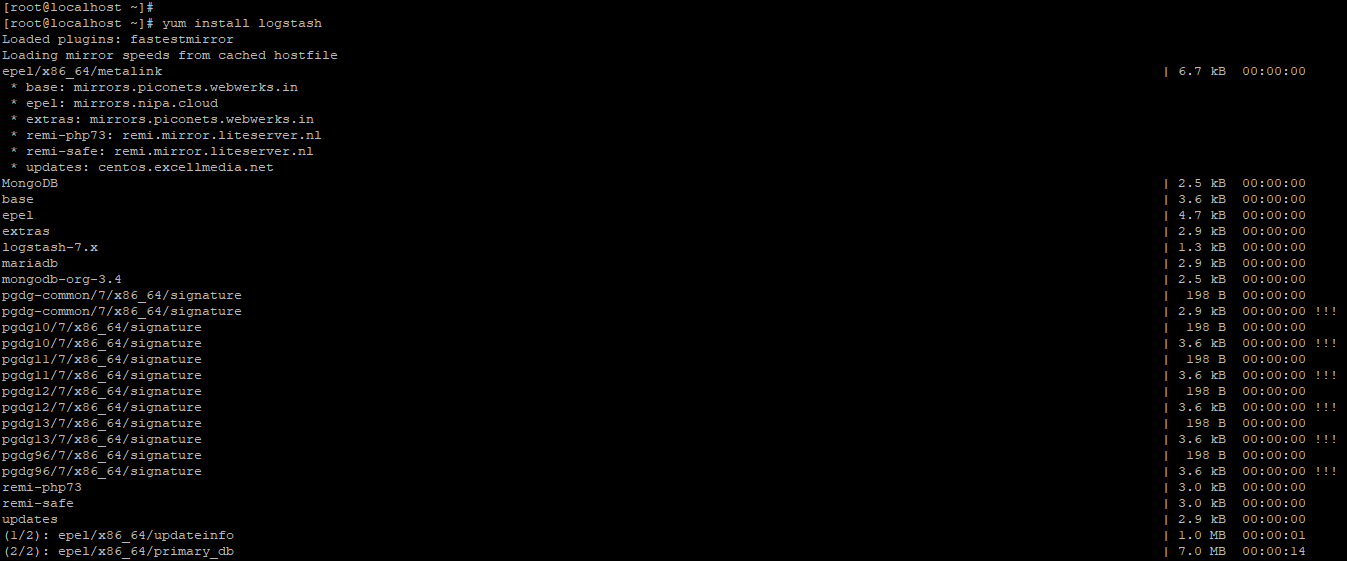

- Install the logstash on the newly created EC2 instance –

In this step, we install the logstash on a newly created instance using the yum command.

# yum install logstash

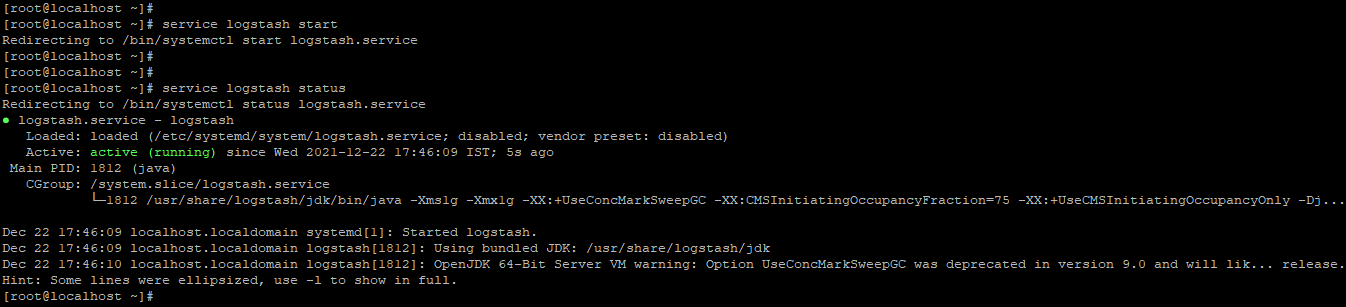

- Start the logstash and check the status –

In this step, we are starting the logstash and checking the status of the logstash as follows.

# service logstash start

# service logstash status

Setup a Logstash Server for AWS

The below step shows the setup logstash for AWS as follows.

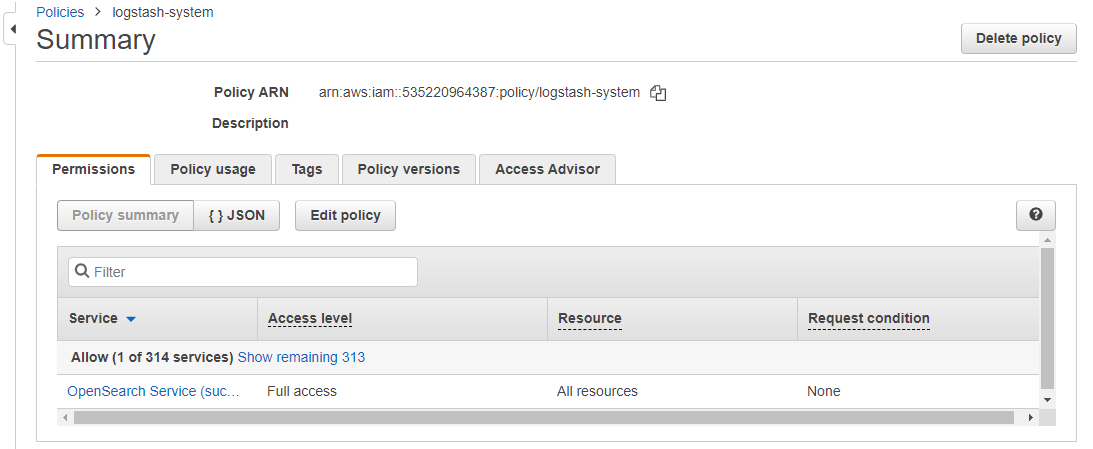

- Create IAM policy –

In this step, we create an IAM policy name as a logstash system.

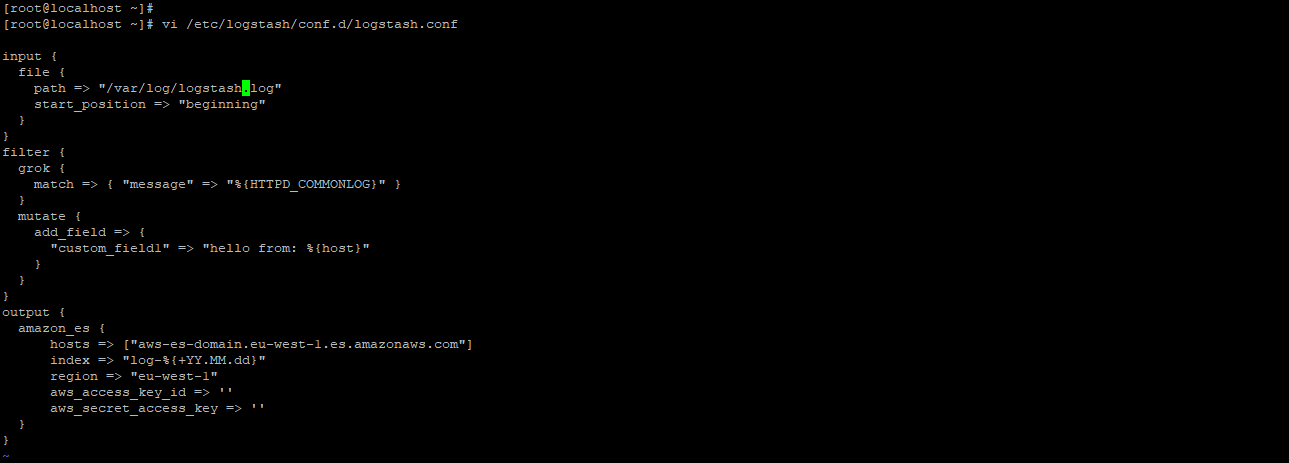

- Create a logstash configuration file –

# vi /etc/logstash/conf.d/logstash.conf

input {

file {

path => "https://cdn.educba.com/var/log/logstash.log"

start_position => "beginning"

}

}

filter {

grok {

}

mutate {

add_field => {

"custom_field1" => "hello from: %{host}"

}

}

}

output {

amazon_es {

hosts => ["AWS-es-domain.eu-west-1.es.amazonAWS.com"]

index => "log-%{+YY.MM.dd}"

region => "eu-west-1"

AWS_access_key_id => ''

AWS_secret_access_key => ''

}

}

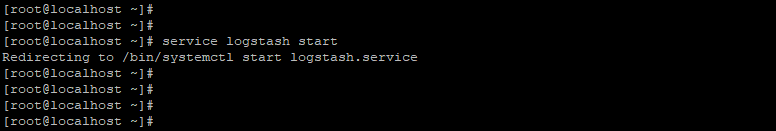

- Start the logstash and check the logs –

# service logstash start

Conclusion

Logstash AWS is a data processing pipeline that collects data from several sources, transforms it on the fly, and sends it to our preferred destination. Depending on our authentication strategy, we can use either the normal Elasticsearch plugin or the logstash-output-amazon_es plugin for an Elasticsearch OSS domain.

Recommended Articles

This is a guide to Logstash AWS. Here we discuss how to use logstash AWS, its installation and setup process, and a detailed explanation. You may also have a look at the following articles to learn more –