Updated March 16, 2023

Introduction to Logstash CSV

Logstash CSV is the filter plugin available in Logstash with its latest version as of now as v3.1.1, which was launched and released in June 2021. This helps in getting the input of the fields which have inside them the CSV data and then furthers processes by parsing it, which is further followed by storing the specified values of fields along with their names provided if they are mentioned with fields. In this article, we will have a deep-down look into Logstash CSV and try to understand it by using the subtopics, which include Introduction CSV, how to use Logstash CSV, Creating Logstash CSV, CSV Configuration, and Conclusion about the same.

The CSV is the filter available in Logstash. It takes the input of CSV data containing fields and further processes it by parsing the same and storing the data containing the field information. This filter’s efficiency is capable of parsing any data containing any specified separator value. The data can have only commas as separators as well.

The Logstash CSV filter plugin has the same behavior irrespective of the supported ECS compatibility, which stands for Elastic Common Schema. In this case, the plugin sends an issued warning saying that the target is not set when we turn on the ECS, which means it is enabled. In this case, to prevent the conflicts that can arise in the schema, you can make sure to mention the target option.

How to use Logstash CSV?

Logstash CSV filter plugin can be used by configuring it in the logsatsh.xml file by specifying the three sections of input, filter, and output as per our processing requirement. We will have to follow some standard practices and steps while using CSV, which are as mentioned below –

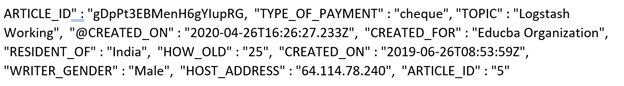

- Create a CSV format file containing all the data in the comma-separated fashion or any specific separator and fields enclosed in quote characters. The file educba_articles has been created for the demonstration purpose, which contains the following content shown in the image –

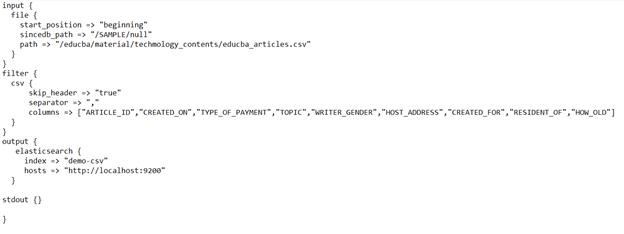

- Mention the configuration in the Logstash configuration file, which will help Logstash understand how the CSV data should be imported and what all care should be taken into consideration. This should be done by creating a config file and using the three sections of input, filter, and output to mention each step’s details. The contents of the configuration file that we have created look as shown below –

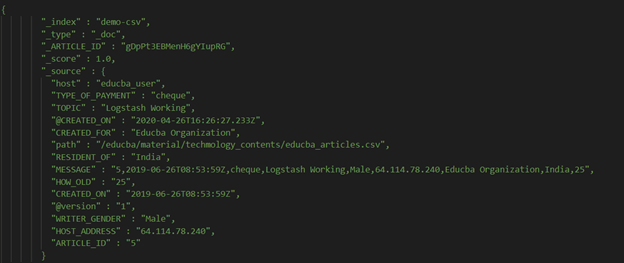

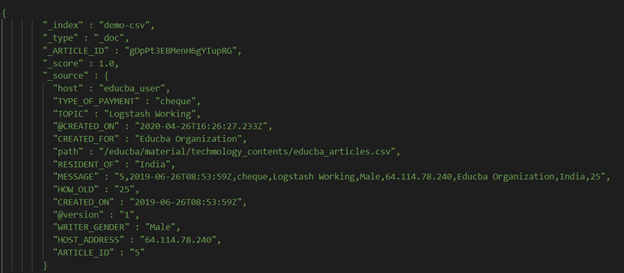

- Run the Logstash by specifying the configuration file you have created for CSV processing in the command. Logstash will consider those configurations while filtering the data, parsing it, and storing it. When running the Logstash command with specified configurations inside the command; we get the following output –

Creating Logstash CSV

We have one sample CSV file named educba_articles.CSV, which contains the details regarding the articles. Let us write the configurations for making the use of CSV so that it can parse this CSV data. We will write the following content in our configuration file –

input {

file {

start_position => "beginning"

sincedb_path => "/SAMPLE/null"

path => "https://cdn.educba.com/educba/material/techmology_contents/educba_articles.CSV"

}

}

filter {

CSV {

skip_header => "true"

separator => ","

columns => ["ARTICLE_ID","CREATED_ON","TYPE_OF_PAYMENT","TOPIC","WRITER_GENDER","HOST_ADDRESS","CREATED_FOR","RESIDENT_OF","HOW_OLD"]

}

}

output {

elasticsearch {

index => "demo-CSV"

hosts => "http://localhost:9200"

}

stdout {}

}The output of the execution of Logstash running with the specified configurations is as shown below –

Logstash CSV Configuration

There are various configuration settings or options that are supported by the CSV filter, which include the following –

| Configuration Setting | Optional/ required | Type of input |

| Columns | optional | Array value |

| Autogenerate column names | optional | Boolean |

| Convert | Optional | Hash value |

| Auto-detect column names | Optional | Boolean |

| Separator | Optional | String value |

| Quote char | Optional | String value |

| Esc compatibility | Optional | String value |

| Target | Optional | String value |

| Skip empty rows | Optional | Boolean values |

| Source | Optional | String value |

| Skip header | Optional | Boolean value |

| Skip empty columns | Optional | Boolean value |

| Id | Optional | String |

| Add field | Optional | Hash value |

| Enable metric | Optional | Boolean value |

| Add tag | Optional | Array value |

| Remove tag | Optional | Array value |

| Periodic flush | Optional | Boolean |

| Remove field | Optional | Array value |

As we can observe from the table, the specification of any configuration options is not required. It can be done on an optional basis if we want to change that option’s default value and behavior. Let us study in detail some of the very important configurations –

- Separator – This option takes the value of string type, and when not specified, the default value considered is “,” This is used to specify a character or string which will act as the value of the column separator in the CSV file. While comma is the default value of the separator, we can even change it by specifying the character string or string, which will act as the separator for specifying different columns in data that will help teach the tabulation. Also, the value set to these options should not have \t but the actual character for the tab.

- Skip empty rows or columns – By default, set to false and accepts the Boolean value. However, if we want to skip the empty rows or columns, we can set the value of the individual setting to be true.

- Target – This option does not have any default value and takes the input value of string type, which helps specify the field as a target where the data from the CSV source will be placed. By default, the data is written to the event’s root value.

- Quote char – It has the default value when not specified as “and should be specified with a string value so that we can specify the character which will be quoting the fields present in CSV. The double quotes are treated as the separator when not specified.

- Source – This is the string value that should be considered source data; the default value considered is the “message” string when not specified. While parsing, the value of the CSV data source is further expanded so that the target data structure can be formed.

Conclusion

Logstash CSV is the filter plugin available in Logstash, which is used for accepting the input containing the CSV data file and then further parsing it and storing it as the fields as per necessity.

Recommended Articles

This is a guide to Logstash CSV. Here we discuss how to use Logstash CSV, Create Logstash CSV, Logstash CSV Configuration, and Conclusion about the same. You may also look at the following articles to learn more –