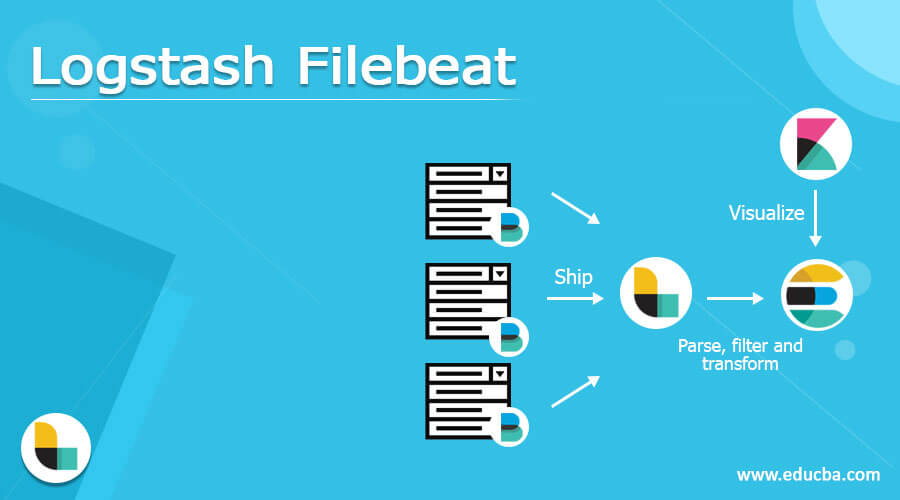

Introduction to Logstash Filebeat

The insignificant shipper can be used for the Filebeat and Logstash to centralized and also forward to the specified log information with facilitates of the simple objects by allowing the users to manage and organized the files, directories, folders and including the logs contents simple minimal manners put it on the other way like Logstash gathers, parse the string values and augments the user input data.

What is logstash filebeat?

Filebeat is the small shipper for forwarding and storing the log data and it is one of the server-side agents that monitors the user input logs files with the destination locations. And also collects the log data events and it will be sent to the elasticsearch or Logstash for the indexing verification. The filebeat is based on the Logstash Forwarder source code and it will be replaced by the Logstash Forwarder as the input method for using the tailing log files and forwarding them to the Logstash. It introduces the major changes and additionally the Beats input plugin for Logstash is more required.

Installation of Logstash Filebeat

The Filebeat is the log shipper that belongs to the Beats family group and it is the lightweight shippers installed on the specific hosts and other kinds of data into the ELK stack analysis. Each beat is dedicated to shipping with a different type of user information that includes the log events, metrics hosts, and so forth. When we want to install the Filebeat it has the following steps.

1. Install the Filebeat on each system that we want to monitor the system.

2. To specify the location of the log files which we have written on the configuration.

3. Then we parse the log data into each column field that data will be sent to the elasticsearch stack.

4. To download and install the filebeat by using the below steps, especially for Windows Operating System.

5. By using the below URL the Filebeat will be downloaded by using the zip format.

https://www.elastic.co/downloads/beats/filebeat

https://www.elastic.co/downloads/past-releases/filebeat-7-0-0

6. In Older versions we need to use the above URL.

7. After extracting the file content of the zip file into the local drive of the system.

8. Then Rename it on the specified file like fileName <version> and then the directory of the File system.

9. In windows open a powershell prompt by using Administrator Rights,

10. After opening the powershell command install the Filebeat as a windows service.

11. PS Drive path .\install-service-filebeat.ps1

12. By using the above command we can execute and run the filebeat as the windows service in the PowerShell prompt.

13. If suppose the script is disabled or not executing the system we will check and set the correction policy execution of the user

session. Command like PowerShell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1.

Logstash Filebeat Configuring

Next, we will configure the filebeat to the Logstash that will be the prerequisite for sending logstash events and it needs to be creating the Logstash configuration pipeline. So the user will listen to the data that will be the incoming beats connections and also data are stored in the backend so the user will retrieve the data using the index that received in the log events into the Elasticsearch. Basically, logstash provides the input plugins for reading the user inputs it may be of different types for this guide we need to create the logstash pipeline configurations events to the elasticsearch output.

Then we need to use the logstash to perform the additional processing on the data collection by installing the filebeat to use the Logstash configuration. Mainly we can achieve this by using the filebeat configuration file to disable the Elasticsearch output by commenting it out and enabling the Logstash output like uncommenting the logstash section.

We can enable the output in the configuration file like the below,

output.logstash:

hosts: [“127.0.0.1:5044”]

By using the above command we can configure the filebeat with logstash output. Then we will configure the host’s option to specify the logstash servers additionally with default ports like 5044. Also whereas the logstash is more configured to the listening port for incoming the beats connections.

Open the filebeat.yml file,

Then we need to set the Log paths which we want to send the file to elasticsearch the path below,

And also we enabled the input configuration as true.

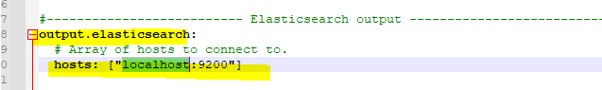

Finally, check and put the elasticsearch output hosts in the below area,

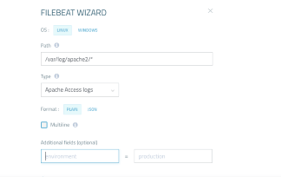

Logstash FilebeatWizard:

For using this the users are easily allowed to define the filebeat configuration file for easily configurable and users are to understand the workflow process. Mainly it is used to avoid syntax and other common issues errors etc. It will provide the Logz.io to provide the Filebeat wizard and the results are shown as an anomaly and automatically formatted using the YAML file. In this wizard, we can be accessed via the Log Shipping -> Filebeat pages. The file path is also entered and the users are sent the log or other file formats like csv, excels, txt files, etc. They want to be used as the shipping container with specified log types.

We need to specified the Operating system and its types, paths, etc. So that the logs are to be accessed via this folder it’s in .msi format installer package.

Logstash Filebeat Modules

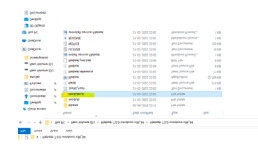

The filebeat modules provide the easiest way to start the process in the common log formats with default configurations. Mainly the elasticsearch is to be used to ingest the data pipeline definitions, kibana dashboards, and other log monitoring systems which are going to be enabled via a configuration file. Then we need to configure the modules by using the modules.d directory like below,

The above screenshot shows the list of modules is present in the modules.d directory folder. We can enable or disable the specific module as module enable or module disables commands.

Using the powershell we can enable the modules like the below command,

PS > .\filebeat.exe modules enable nginx

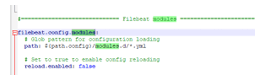

The default configurations are mainly allowed to assume and sent the user request datas to the target or specified location to the appropriate environment. We can also configure the modules using the filebeat.yml config file like below,

Or like below,

filebeat.modules:

-module:elasticsearch

access:

error:

The above codes are used to configure the modules in the filebeat.yml file.

Conclusion

The filebeat guarantees the log and user data events will be safely configured and sent the data to the target or destination location. Output also configured with no data losses by using filebeat we can achieve this because the data are stored separately as the log and events in the registry file.

Recommended Articles

This is a guide to Logstash Filebeat. Here we discuss the Introduction, What is logstash filebeat?, Logstash Filebeat Installing, examples with code implementation. You may also look at the following articles to learn more-