Updated March 14, 2023

Definition of Logstash Filter JSON

Logstash filter json is used for parsing, within the Logstash event, it expands an existing field that contains JSON into a real data structure. The parsed JSON is placed in the root of the Logstash event by default, but the target parameter can be used to store the JSON in any arbitrary event field. When something goes wrong with the event parsing, this plugin includes a few fallback scenarios. JSON is a popular log format because it allows users to create structured, standardized messages that are simple to read and analyze.

What is logstash filter json?

- In logstash filter json, if the parsed data contains the @timestamp field then the logstash plugin is attempting to use the same.

- If our parsing fails then this field is renamed with _@timestamp and this event is logged with name as timestamp parse failure.

- Logstash manages the resource-intensive activity of gathering and processing logs in ELK.

- Logstash’s processing ensures that our log messages are correctly parsed and formatted, and it is this structure that allows us to analyze and display the data more readily after indexing in Elastic search.

- We decide how the data is processed in the filter portion of our Logstash configuration files.

- To determine how to change the logs, we can choose from a huge variety of officially supported and community Logstash filter plugins.

- The Logstash json filter plugin extracts and maintains the JSON data structure within the log message, allowing us to keep the JSON structure of a complete message or a specific field.

- The source configuration option specifies which field in the log should be parsed for JSON.

- The entire message field in this case is JSON. In a field called log, we can utilize the target option to expand the JSON into a data structure.

- Logstash is a pipeline of data processing that collects data from a variety of sources, transforms it, and sends it to a specific location.

- Logstash is most typically used to deliver data to Elastic search, which can then be seen in kibana. The ELK stack is made up of three components i.e. Logstash, Elasticsearch, and Kibana.

- To configure how incoming events are processed, Logstash employs configuration files.

- Logstash is a filter for processing JSON. Within the Logstash event, it expands an existing field that contains JSON into a real data structure.

How to use logstash filter JSON?

The below steps shows how to use logstash filter json are as follows.

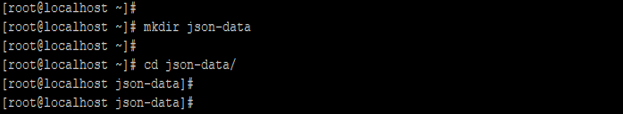

1) Create json data directory –

In this step, we are creating json data directory name as json-data. We are creating data directory by using mkdir command.

# mkdir json-data

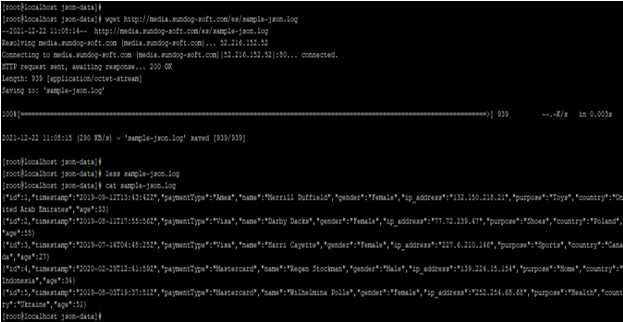

2) Create or download sample json.log file –

In this step, we are downloading the sample json file using the wget command. We can also create by using the editor.

# wget http://media.sundog-soft.com/es/sample-json.log

# cat sample-json.log

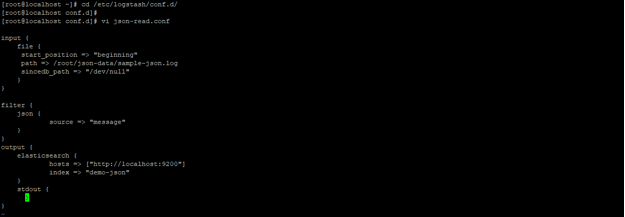

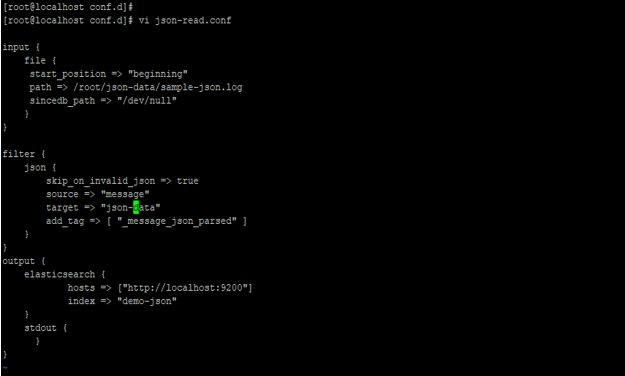

3) Configure the logstash config file –

In this step, we are configuring the logstash configuration file. We are creating json-read.conf file in /etc/logstash/conf.d directory.

# cd /etc/logstash/conf.d/

# vi json-read.conf

input {

file

{

start_position => "beginning"

path => /root/json-data/sample-json.log

sincedb_path => "/dev/null"

}

}

filter

{

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "demo-json"

}

stdout {

}

}

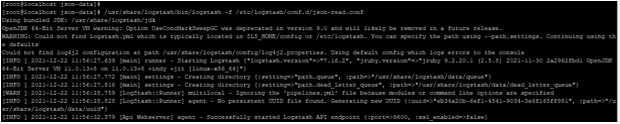

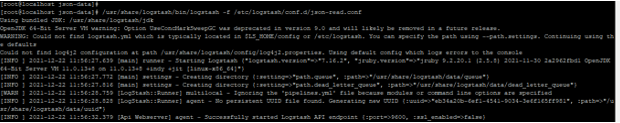

4) Run the json configuration file using logstash –

In this step we are running the json configuration file using logstash are as follows.

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/json-read.conf

5) After adding the above parameter we can also add another parameter like mutate in the configuration file. After adding another parameter we need to run the configuration file again.

Logstash filter json Plugin

- This is a Logstash extension. It’s completely free and open source. The license is Apache 2.0.

- Logstash offers an infrastructure for this plugin’s documentation to be generated automatically. Because we write documentation in the asciidoc format, any comments in the source code will be transformed first to asciidoc, then to html. All plugin documentation is kept in one place.

- Elasticsearch indexes events and log messages that Logstash collects, processes, and transmits. Kibana offers a professional dashboard for searching and navigating the data, along with the attractive dataviz that has become typical in professional products.

- Logstash allows users to receive and publish event streams in a variety of formats, as well as perform various enrichment and transformation operations on their data. As we might expect, Logstash provides a substantial amount of help for that transformation.

- The path field in the input plugin specifies one or more JSON files to be read. The JSON filter converts JSON data into a Java object, which can be a map or an ArrayList depending on the file structure.

- The output plugin tells Logstash to send the device detection result to standard output.

- The wurfl device detection filter JSON snippet contains all of the settings required for the WURFL micro service plugin to function.

- Logstash can be used to enrich data before it is sent to Elasticsearch. Logstash has a number of lookup plugin filters for enhancing data. For storing enrichment data, many of these rely on components outside of the Logstash pipeline.

Examples

Below is the steps of logstash filter json example are as follows. We are editing old logstash configuration files.

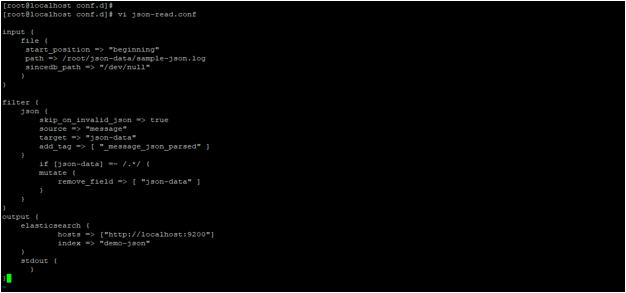

1) Edit the logstash configuration file –

# vi json-read.conf

input {

file {

start_position => "beginning"

path => /root/json-data/sample-json.log

sincedb_path => "/dev/null"

}

}

filter {

json {

skip_on_invalid_json => true

source => "message"

target => "json-data"

add_tag => [ "_message_json_parsed" ]

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "demo-json"

}

stdout {

}

}

2) Run the json configuration file using logstash –

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/json-read.conf

3) Update the logstash configuration file –

# vi json-read.conf

if [json-data] =~ /.*/ {

mutate {

remove_field => [ "json-data" ]

}

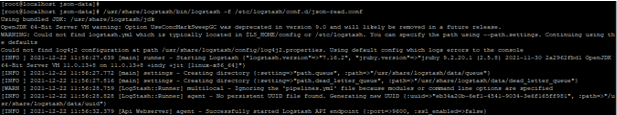

4) Run the json configuration file using logstash –

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/json-read.conf

Conclusion

In logstash filter JSON, if the parsed data contains the @timestamp field then then logstash plugin is attempting to use the same. Logstash filter JSON is used for parsing, within the Logstash event, it expands an existing field that contains JSON into a real data structure.

Recommended Articles

This is a guide to Logstash Filter JSON. Here we discuss the Definition, What is logstash filter JSON, How to use logstash filter JSON, examples with code implementation. You may also have a look at the following articles to learn more –